Challenges to Database DevOps: Dealing With Drift

Sometimes, people start their DevOps journey by getting their database into source control, but then, they don’t deploy from source control. Instead, they continue their manual deployments to production. Quickly they find that the database code in their production instances and the database code in their source control system don’t match. Alternatively, you may have a full-blown, full-automated deployment process from source control, but, last night at 3AM (it’s always at 3AM), someone introduced a change directly to production as part of dealing with an outage. If this isn’t dealt with, again, we arrive at a place where what’s in source control and what’s on production don’t match. This mismatch is frequently referred to as drift.

The question then is, how do you deal with database drift?

While it’s possible for a number of different causes for drift, I’m going to focus on two. First, we’ll talk about what happens when you start in source control, but for whatever reason, you don’t maintain it. Second, we’ll talk about how to deal with those 3AM changes that could occur. Finally, we’ll also talk about ways to try to mitigate and identify drift, before it causes problems.

Source Control Drift

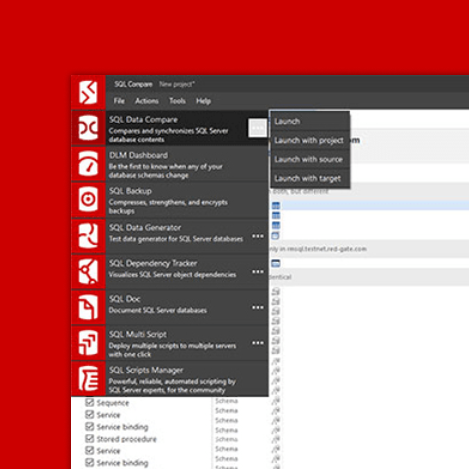

It’s actually very easy to arrive at a place, almost immediately, where source control and production don’t match. Let’s assume for a moment that you’re just starting with source control and database deployment automation. Let’s also assume we’re dealing with a brown field project, an existing database that we’re going to get into source control to begin automating. First step is simple. Reverse engineer your production database into source control. There are tools that can do this (Redgate might have one or two), or you can do it manually. How ever you get it done, you’ve got a copy of production in your source control system. Immediately a developer modifies a piece of code and merges it into source control. You’re already looking at drift.

Let’s start with branches. A branch is a way to create a copy of your source code that allows you to make changes, without affecting the code in other branches. There is a merge process that lets you incorporate those two different sets of changes together. When you first create that reverse engineer of source code, you should immediately start working with branching. Either, the production code is your main branch, or, you create a branch immediately that represents production. Then, any and all changes to production go through that branch. Fine.

However, what happens if we started the concept of automating our systems, but production and source control are already way off?

Again, let’s start with branches. You’ve got two sources of truth, source control and production. We need a single, matching, source of truth. Create a branch of your code. Then, again, using a tool is easier, compare this branch to production. Identify all the changes, and get them into this branch, so that you have a source of truth in source control that matches production. From there, even if you’re not yet automating deployments from source control to production, ensure that you regularly synchronize the two.

Next, you’ve got to deal with the fact that development is taking place on code that isn’t matching production. This means either one giant merge, wherein we move in all the changes at once, or, a series of smaller merges, moving in smaller sets of changes over time. The giant merge is almost guaranteed to cause immediate pain to the development process as we find all sorts of breaking changes to our code. However, like pulling off the bandage quickly, the pain is focused and over. The series of smaller merges can be planned out and controlled over time in order to minimize the impact to the development process. However, the longer process does mean it’s more likely that problematic code can find it’s way out of development and onto your production servers. After all, you’re developing and testing on structures that don’t match production.

If I had to choose, I’d go with the series of smaller merges, but I’d try to do them on as accelerated a cycle as possible. You really want to get production and your source control production branch to match in order to be able to make your non-production instances look like production for testing purposes.

Production Drift

Speaking for myself, but I’m sure others will back me up, at 3AM, I’m going to do what I need to in order to get the servers back online, or finish the data load, or whatever problem it is that woke me up at 3AM. If this means adding an index, I’m adding that index.

To deal with production drift, we just have to make it a part of the process. Assume that the 3AM event is going to happen and then build it into our plans. Make the change, but capture the script. The next day, get that change into source control. Done!

OK, not done because you still should immediately begin the process of merging this change into the other source control branches. Further, you’ll need to plan some deployments across your environments to ensure that, once again, they match production. It’s a constant struggle to ensure that all the non-production environments look as much like production as possible. This ensures that testing done on those environments will match the results from the ultimate deployment to production.

Mitigating Drift

Start with one core premise:

If it’s not in source control, it doesn’t exist.

This premise will help you to eliminate drift for the most part. If the only source for deployments is source control, you’re much less likely to see drift on your systems. It’s that easy.

Next, assume that some drift is likely. Build the concept of drift into your process. Ensure that you educate everyone who can make changes to production how to deal with those changes after the fact. Further, experiment with your system to create a fast track for changes where you start in source control and then run through a minimum set of changes. Then, you won’t always have to simply make the 3AM change on production. If I know I can spend about five minutes and have greater confidence that the script I’m about to run won’t break things, I’m all for it.

Next, incorporate drift detection into your deployment automation. Have a way to compare what’s in production currently with what your production branch of source control thinks should be there. Here again, tools will help. If you’re likely to hit a problem with drift, you can stop the deployment before the problem occurs. Here's an example using Redgate Monitor as a way to alert when an unauthorized user makes a structural change in your database.

Conclusion

Because it’s possible, even easy, for changes to occur to a database completely separately from your deployment automation process, you must assume within that process that these changes can happen. Then, you simply build infrastructure and processes around the drift. The one word of caution I would have though is that drift should be exceptional. Yes, it’s possible, but if every change, or even the majority of changes, are occurring through drift, through out-of-band, out of process, means, then we still have a problem. Drift should be acknowledged, accepted, dealt with and very rare.