A DBA’s Journey towards Database DevOps

John Morehouse describes how a quest to help solve his organization's "deployment bottleneck" led them to a place where they could both deliver application and database changes into the wild at a pace to satisfy customer demand, and achieve a better work-life balance for the team, both of which are well worth having.

Several years ago, I was working as a database administrator for an organization in Omaha, Nebraska. I was one of four production DBAs and, as well as keeping all the servers running sweetly, we supported, between us, ten application development teams, comprising a total of around a hundred developers. These teams were sending new releases our way more and more frequently, with improvements, fixes, new features and so on. Of course, the management wanted all this in production as quickly as possible, because the faster we could release cool new features, the happier the end users were, and the more widgets we sold.

The problem was that the DBA team weren’t getting any faster at deploying these releases to production. Our part in the process was painfully manual and time consuming. Work would back up, and we’d constantly be juggling four or five deployments at any one time. Any problem with a release, and there were always problems, would cause deadlines to be missed, working hours to extend, often into weekends, and we’d have management breathing down our necks. Frustration levels would rise, and tempers fray. We knew we could save time by scripting processes, and by putting in place systems such as issue tracking and automated checks, but these take undistracted time to achieve, and we had no time and plenty of distractions.

Does any of this sound familiar to you, as a DBA? If so, are you part of the problem? As in any relationship that hits a rough patch, admitting your own part in its failings is difficult. I’ve been there, and for a long time didn’t see myself, or the way I worked, as the issue.

I’ll explain what changed my mind, and what we did to fix things by introducing better collaboration, automation and test procedures. The tools we used to help us were mostly Redgate tools, but I believe the overall lessons are universal. The biggest challenge is not so much with the tools and technology, but in getting the team processes working well. A tool will help, of course, but only if the processes and relationships are working efficiently.

Denial

Our application development teams were finding faster and better ways to deploy code, while the processes that we felt obliged to use to deploy database changes weren’t evolving as quickly (well, frankly, not at all). Why? We never found time to come up for air from the sea of detail and work out an effective strategy to do the job.

At deployment time, the DBAs still reviewed manually any part of the application that might touch our Production servers, or any scripts to make the required database changes. For what was often a 50-script deployment process, this was a daunting task. The deployment itself was also manual, and therefore prone to human error. With four DBAs trying to support a hundred or so developers, it seemed little wonder we were always considered to be the bottleneck!

Sometimes, we had to say “no” to certain changes due to legitimate concerns about performance, security or stability. Of course, this would cause delays while we fixed the problems, but it was our job to protect these servers! If we didn’t do these reviews, well we knew that bad things would usually happen, and because we were responsible for the servers, we’d be the ones who would have to cope with the consequences.

I know that many DBAs are in a similar or worse situation. In a recent presentation, I asked a mainly-DBA audience how many developers they supported. The highest number was 300. “How many database administrators do you have on staff?” I asked. Four. If you have four DBAs supporting a development staff of 300 then it’s inevitable that the DBAs will be bottleneck, right? It’s hardly our fault. I had always thought like that, but I was wrong, as it turns out, because there is hardly a system anywhere that can’t be improved by working smartly, in a good team.

Seeking Help

One of the most important steps I ever took was to get involved with the SQL Server community, and start speaking. Through those efforts I became friends with Steve Jones, who is the Editor of SQLServerCentral.com, and an evangelist with Redgate. I explained to Steve the sort of problems we faced and asked for his advice. He looked at me pityingly, paused for a moment, and then gave me the frank truth.

Although I knew things had to change, I was still to a large degree in “selfish DBA” mode. I wanted the solution to be all about our DBA team, and the databases. I wanted automated database deployments to make my life easier. I wanted to stop being the bottleneck all the time. And yet, I was dead set against anything DevOps-related because I was that guy who thought DevOps was just a synonym for NoOps, and no way in all things holy was I going to allow tools to deploy anything to Production without my express consent! After all, I had servers and databases to protect!

Nevertheless, talking to Steve proved pivotal, and various conversations followed, which led to Redgate agreeing to send over a few people to see if they could help us out. So, my now good friends Alex Yates and Sean Newnham made the journey from the UK to Omaha, and we got down to business.

Steps towards Database DevOps

Now, this wasn’t just the three of us sequestered in a conference room somewhere; that would have been our first big mistake. Any project that will cause big changes for lots of people cannot afford to omit any team from the process. Anybody who is affected by the changes should have a voice in determining the best solution.

Our team included a lead developer, a database developer, a QA engineer, a production DBA (myself), and others. Essentially, we involved everybody that would have a touch point in the new process to ensure we covered the bases. We remained in a conference room for about three days, and we worked out a DevOps strategy which would embrace the database as well as the application.

As the meetings progressed, I slowly started to see the benefits of having the database involved with the continuous delivery of the applications. Any way of making it quicker to deliver better business applications would bring value to the organization. By making deployment of databases changes much simpler and more frequent, it would allow the application developers to deliver alterations to the applications they supported much faster and more consistently. Also, the DBAs would achieve a better work life balance, and lead less stressful lives.

In that short time span, I completely shifted my perspective from “this will never work” to “we have to make this work“. So, what did we agree to do? As the production DBA, there really was a lot I wanted to fix about our manual deployment processes. However, before we could automate our processes, we needed to improve them and introduce them earlier in the pipeline.

Get the database into source control

We were good about putting SSIS packages into source control, but not so good at the source of the databases themselves. I’ve heard arguments that a backup is all that is needed for database source control. This is not true. Source control is not about keeping the database safe, but about teamwork. How can a set of backups give you the narrative of changes? How would you use a backup to find out the ‘who, when, where and what’ of every change? How could you do code reviews and cooperate on scripting? How could you promote effective and standard ways of doing database operations?

Any database, regardless of whether the goal is a continuous delivery process, had to be in source control. Just like developers, we needed to commit unit-tested changes to source control. From there, we could incorporate them into an automated build process, test the whole application, check it over for compliance and security, and eventually package up the release and deploy it. In short, source control is the basis for all subsequent change and deployment processes. It allows a group of professionals to work as a single team when the task requires it.

Once you’ve taken the decision to put the database code into source control, and to build from this source, there are plenty of ways of do it. No single way is the best: it depends very much on the team involved, the requirements of the business and the architecture of the applications.

There are, for example, different ways of building the database, and this may affect how you version control the database. In the state-based model, the source of any database object is the DDL script to CREATE the required version of it. We then use a tool such as SQL Compare to compare the static definition of the database (i.e. the complete set of object DDL scripts for a database version) with a target database, and derive a deployment script that will change the state of the target database to match what’s in source control. Building from object state works best if you mainly use the database code modules (views, stored procedures and so on) that define the database interface, and only rarely mess around with the internal organization or structure of tables. You’ll still need to hand-craft the migration scripts required for any table changes, to ensure all data is preserved correctly.

In the migration-based model, the source for each object is the initial creation script for each object along with a sequence of immutable change, or migration scripts, that move it to the required version. You can use a tool, such as SQL Change Automation, to piece together the database deployment script from the series of migration scripts for each object. If you need to make frequent table changes, or are working on a complex database system, with dependencies between databases, linked servers and so on, then you may find a migration-driven approach easier.

The two approaches are not mutually exclusive and both are valid means to manage database changes. However, the tools that facilitate these two models are often different and should be evaluated carefully, although hybrid tools, such as SQL Change Automation, support both. Find a proof of concept project in which you could test both models and compare the outcome.

We’ve mainly used a state-based tool, Red Gate’s SQL Source Control. It suited our team, because it plugs into SQL Server Management Studio (SSMS), which the DBAs use, and happened to be where our developers did database work.

Introduce Automated Integration and Testing

With the database in source control, we needed to decide how to automate database builds and integrations, and to introduce some automated testing, and quality checks. Our current lack of automated database testing was arguably our biggest barrier to more frequent and automated, but also more reliable, releases.

On average, data professionals do not do a good job of testing and validating database changes, or even the database itself. Do you load test your changes? Do you check for orphaned records? Do you sniff for security holes? Do you test the code for adherence to coding standards? Like many teams, our intentions were good, but our time was limited.

You can move in the right direction by injecting testing mechanisms, as well as better documentation, into the database build and integration portions of the process. To do this requires the provisioning capability to set up database test instances that can run the different tests in parallel, before tearing down the existing database copies and putting in place a set of fresh databases, ready for the next set of tests. You might try setting this up in Azure SQL Database, and it’s a function that SQL Clone performs very well.

We had access to a unit-testing tool, SQL Test, which is in SQL Toolbelt. It is based on the tSQLt testing framework. However, our developers aren’t familiar with it, and they are the ones who would be required write and automate the test suites, which requires a substantial time commitment.

Be prepared to take small steps here. Our first one has been for the DBA team to introduce automated code quality checks into the build process. These checks look for worrying patterns and known issues in the SQL change scripts. SQL Prompt offers interactive code analysis features, which flag issues during development, but you also need a way to incorporate these tests into an automated build process, so they are never missed. These automated code quality checks allowed the DBAs to control the rules that were used to look for the ‘bad patterns’ but without imposing a ton of additional work on the developers.

Release Management and Scheduled Deployments

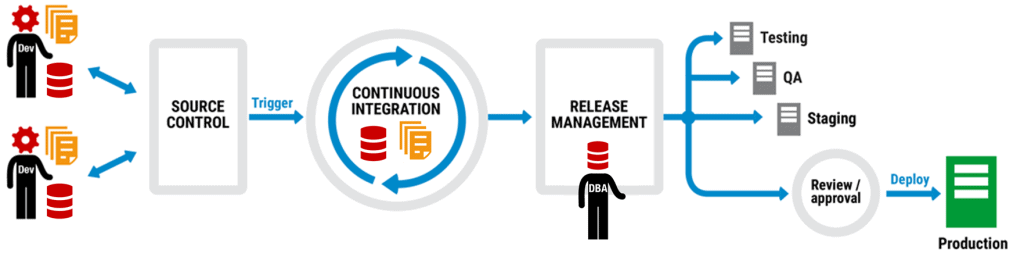

Having introduced automated builds and integrations, and whatever testing you can along the way, the final step is a Release Management tool that works with the build and CI tools and deploys the changes to the right environment, at the right time, and automatically.

With a non-automated deployment process, the risk of human error is huge. I’ve seen deployments that involved a dizzying array of steps and processes, most of them manual. I’ve seen a deployment that had hundreds of individual scripts, some of which contained thousands of lines of code. This is not an enjoyable experience for any DBA.

Nevertheless, moving away from a slow, manual process over which we had granular control, to an automated process in which a tool did all the work was, in the end, a leap of faith. After all, a lot was at stake. The databases that my team supported weren’t ‘widget’ databases; they handled large financial transactions. It’s a daunting step, and needs thorough testing, because it requires trust not only in the tools but also in the process. We used the SQL Compare (and Data Compare) CLIs to ensure that a rollback script was generated for every set of changes. In the event of a failure, and it will happen eventually, this provided a quick way to roll back the changes, if that was the best course of action. In most cases, we would roll forward rather than roll back but knowing roll back scripts were already available was comforting and helped establish the team’s confidence in using an automated process.

The rewards are worth it. For me, as a DBA, scheduled deployment is probably the single largest benefit of Database DevOps. Once you’ve tested everything thoroughly, and established trust, you get to do “set it and forget it” deployments. This is a huge step forward, and the one that does the most to facilitate an improved work-life balance for all those involved in deployments.

Training

Introducing database continuous delivery is certainly not as simple as choosing a few tools and persuading the organization to cut a check. You’ll need this investment in tools, of course, but also in training and re-education, because you are going to be shaking the foundation of the organization’s code release processes.

We knew that we had to re-educate the entire development staff and, speaking personally, the DBAs especially. Let’s face it. We can be a difficult bunch, resistant to change. Even fundamental steps such as putting databases into source control will be foreign to some DBAs and they will need time to adjust.

Introducing “DevOps” is a process of continuous communication, not just between development and operational teams but also with the management team, from whom you need financial and executive support. Without that, you’re going nowhere.

Summary

If you are a DBA in an organization where the ratio of developers to DBAs is high, and the release rate low, take some time to consider to what extent your team is a bottleneck. Are there always teams waiting for your code reviews? Do you have to be there, physically, for every deployment? Do you say “no” a lot because you are stretched thin? If you answer “yes” to any of these questions, you are the bottleneck.

It’s OK. I’ve been there and I know what that is like. It took some long meetings trapped in a conference room with the right people to convince me that “DevOps for Databases” was a good path to take. After those meetings, it took months of discussions and experimentation to see what tools and processes would fit the best for the organization. However, at the end, the efforts of the teams, along with the investments by the company, were rewarded. It put us on the path where we could deliver application and database changes to the wild at a pace to satisfy customer demands and restored a better work-life balance for the team, both of which are well worth having.