How to release value to customers faster, safer, easier

It’s a constant challenge for many businesses: where and how to focus investment in technology to remain competitive and stay current with the latest trends and development practices, while still meeting changing customer demands.

That’s even more relevant now because, in a tough economic climate, global digital transformation spending is still forecast to reach $3.4 trillion in 2026 with a five-year compound annual growth rate (CAGR) of 16.3%, according to IDC’s 2022 Worldwide Digital Transformation Spending Guide.

It may seem contradictory but as Craig Simpson, senior research manager with IDC's Data & Analytics Group stated when the guide was published in October 2022: “Despite strong headwinds from global supply chain constraints, soaring inflation, political uncertainty, and an impending recession, investment in digital transformation is expected to remain robust.”

The will is there, in order to modernize and strengthen IT infrastructures to be competitive, resilient and future-ready. The key is to ensure the investment delivers the best return possible, as McKinsey’s 2022 Global Survey on digital investments and transformations, Three new mandates for capturing a digital transformation’s full value, points out:

“If a company wants to differentiate itself through better customer engagement and innovation, it needs to have several core tech capabilities in place … Top performers are more aggressive than their peers in adopting automated processes to test and deploy new tech, as well as agile and DevOps practices that enable faster innovation and execution while keeping costs down. Top performers are also significantly ahead of their peers in their adoption of the public cloud.”

Redgate is no different here. We constantly research how enterprises are adopting and using technology to release value to their users sooner. As well as desk research, we hold research calls with organizations, companies and enterprises around the world to find out what their challenges are across different sectors and even different roles within enterprises.

We have to. We build products and solutions that resolve particularly hard parts of the database development process in what we like to call ingeniously simple way. We need to keep those tools aligned with the way IT teams work, the changing development environments they like to work in, and the challenges they face when releasing value from the development pipeline.

Three common themes emerged in our conversations over the last year or so that have enabled us to plan our product roadmaps and tie in our releases and new product launches with the way digital transformations are changing – and succeeding:

- Making teamwork really work

- Optimizing cloud adoption

- Focusing on code quality as well as speed

Making teamwork really work

Teamwork is at the heart of DevOps, and always has been. When asked to describe what DevOps is, I like to use the answer from Microsoft’s Donovan Brown:

DevOps is the union of people, process, and products

to enable continuous delivery of value to our end users

Note that he puts people first, because it starts with cooperation, collaboration and soft people skills, not software skills: in other words, teamwork. That’s not just the teamwork between developers and operations people that brings down internal walls and enables the collaboration that DevOps requires. It’s also the teamwork within and across different development teams.

This is where we’ve seen a lot of changes over the last couple of years, in terms of the work that developers do, where they do that work, and how teamwork threatens to be compromised.

Firstly, as revealed in the Stack Overflow Developer Survey 2022, the majority of respondents consider themselves to be more than one type of developer. While full-stack, back-end, front-end, and desktop developers account for the majority of job titles, they typically have multiple roles, and are responsible for developing both application code and database code, for example.

Secondly, as revealed in the same survey, they’re now using an average of 2.7 databases, the most popular being PostgreSQL, MySQL, SQLite, and SQL Server. They're also coding in a range of programming, scripting and markup languages like JavaScript, HTML/CSS, SQL, Python and TypeScript, with developers moving between them during the working day.

Thirdly, and a direct result of those first two changes, is that teamwork is more complicated than ever. Developers are expected to do more, with more technologies and more types of database, and processes are becoming tangled. What works one way with one type of database works another way with a second, or third. Teams become siloed with different release processes. Teamwork, the bedrock of DevOps, is being compromised.

As a result, cross-database products like Flyway, the popular open source database migrations tool, are becoming more popular. By standardizing migrations across more than 20 different databases, it encourages and enables teamwork, and simplifies what would otherwise be complicated release processes.

I like the way the IT team at Desjardins, the leading financial cooperative in North America, put it when I talked to them about how introducing Flyway resolved the challenge of standardizing migrations across teams and multiple database types: “So many people were deploying to so many databases, it was hard to keep track of who was deploying what and when.”

And after they introduced Flyway? The team now release changes up to 40 times per day and, even though they are deploying changes faster, they have a complete picture of which schema versions are in use in every environment.

Key takeaway

With developers having multiple roles and now working across different databases as well as applications, the standardization of processes to encourage teamwork is more important than ever. That way, when developers move from JavaScript to SQL, PostgreSQL to SQL Server, they can work in the same, familiar fashion, enabling teamwork both within and across teams.

To ease workflows across database types, Redgate has been adding to the capabilities of Flyway, the most popular open source database migration tool which automates database deployments across teams and technologies.

Advanced database management capabilities have already been added for PostgreSQL, along with deployments checks to improve the use of Flyway in CI/CD pipelines, and static data versioning to capture all database changes in version control. Work is now underway to provide the same advanced capabilities for the development of MySQL databases.

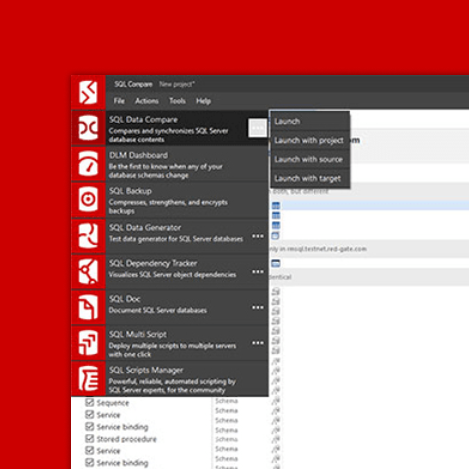

To help teams that work largely with SQL Server, Redgate has also been looking at ways to standardize development further. Features like SQL History in SQL Prompt now enable users to keep track of and search past queries, while shared styles and snippets save time, standardize code, and improve code quality. The development team are now looking at team sharing of styles, bulk formatting, auto-fixes and the automating of database documentation.

Optimizing cloud adoption

The biggest and most important change in enterprise technology in recent years has been the rise in cloud adoption. Flexera’s 2023 State of the Cloud Report, for example, shows that 87% of businesses now use multiple cloud providers, 11% use a single cloud provider, and 2% use a single private cloud environment.

Perhaps unsurprisingly, the leading providers continue to be AWS, Azure and Google Cloud Platform, with AWS and Azure fighting for top spot and Google coming a distant third, particularly among SMBs. Whichever the provider, the advantages being sought are typically cost savings, scalability and flexibility.

That isn’t the whole story, however, because while the attractions of the cloud are broadly the same, there are different usage patterns. While enterprises, for example, have 50% of their workloads and 48% of their data in the public cloud, among SMBs it rises to 67% of their workloads and 63% of their data. That’s presumably because they have fewer dependencies on large and complex legacy infrastructures.

The top cloud initiatives also change when comparing light and heavy users. Heavy users are focusing on optimizing existing cloud usage, implementing automated policies for cloud governance, and improving financial reporting on cloud costs. For light users, the focus shifts to migrating more workloads to the cloud, followed by the optimization of cloud usage and then moving from on-premises to SaaS versions of applications.

Across all organizations, however, the three top challenges are the same: managing cloud spend, security, and a lack of resources/expertise in terms of managing cloud environments. Concerns over spend and security have been consistent in past reports, but the lack of resources/expertise only entered the top three in 2022, and is probably a reflection of just how complex hybrid database estates have become.

Organizations of every size are now collecting, processing and storing data on-premises, in VMs as well as in AWS, Azure and Google. As estates grow, both vertically in size and horizontally in their breadth across different platforms, they become more difficult to manage and monitor.

This is where tools like Redgate Monitor are being increasingly taken up, which offer organizations the opportunity to view every SQL Server instance, database, availability group, cluster, and VM on one central web-based interface, regardless of location.

If anything needs attention on any platform, whether on-premises or in the cloud, users can quickly drill down for detailed performance statistics. A focused set of performance metrics then quickly help pinpoint the cause of any issues, while intelligent baselines can aid in finding the root cause, not just the symptom.

It also helps in deciding which databases to move to the cloud which has now become an important consideration, as Flexera points out in its report: “The old ‘lift and shift’ mentality is becoming more antiquated as organizations factor in cost and performance when finding the most appropriate place for workloads in the cloud.”

One notable financial institution, for example, uses Redgate Monitor to see what applications can be migrated to the cloud based on their size, and especially their input and output in terms of how much data is going between them. The IT team can look at which databases would benefit from being hosted in the cloud, and then use the insights from Redgate Monitor to identify how big the databases are and what kind of infrastructure they would need to stand it up in the cloud. This reduces the discovery process to a few minutes, rather than hours, and simplifies migration decision-making.

Key takeaway

The hybrid nature of database estates now makes it difficult to manage their complexity and monitor for performance issues both on-premises and in the various flavors of cloud. Hence the rise in the adoption of third-party monitoring tools which simplify the management of database estates and enable DBAs and development teams to instantly spot any issue, wherever it is.

To keep pace with the changing nature of database estates, Redgate added further features to Redgate Monitor in 2022 including introducing a PowerShell API to add, remove and manage tags to monitored instances, Amazon RDS SQL Server and Azure Managed Instances; enhanced query recommendations; and stored procedure monitoring.

Support for SQL Server on Linux has also been added to Redgate Monitor, giving organizations the opportunity to monitor their databases, whether on Windows or Linux, using one tool with a single view of their database estate.

And to help teams that work with both SQL Server and PostgreSQL, PostgreSQL monitoring is now supported by Redgate Monitor to improve performance and reduce downtime. PostgreSQL instances are managed right alongside SQL Server instances in the same UI, offering a single pane of glass across the entire environment. Redgate Monitor provides in-depth diagnostics for query tuning and PostgreSQL performance issues, dedicated PostgreSQL metrics, and can monitor PostgreSQL instances running either on Linux, or on Amazon RDS.

Focusing on code quality as well as speed

Speed is often the benchmark used when measuring developer productivity. If you can release features faster and get value into the hands of users sooner, that’s good for business, right?

Unfortunately, that’s not often the case, as pointed out by Tony Maddonna, Microsoft Platform Lead, Enterprise Architect & Operations Manager at BMW. In a recent webinar, Accelerating Digital Transformation: The role of DevOps and Data, he said:

“I’m so tired of hearing that DevOps equals speed. A lot of people are enamored with speed, whether it’s speed of commit, deployment or feature delivery, but they forget about the quality aspect. If I’ve delivered faster, but it’s bad quality, unstable, unreliable or inefficient, that’s a failure.”

Conversely, perhaps, the key to releasing code faster is to focus on developing quality code first because, by doing so, there are fewer failed deployments and less time spent reworking code. This in turn frees up developer time to focus on … developing quality code.

A great example of this is the notable research paper on developer productivity, The Developer Coefficient, published by Stripe in 2018. Stripe partnered with The Harris Poll to survey over 2,000 developers, technical leaders and C-level executives about their organizations’ business challenges, software development practices, and future investments. They wanted to determine the role that developer productivity plays in their success and the growth of worldwide GDP.

The research found that the average developer spends 3.8 hours a week correcting bad code, 13.5 hours dealing with technical debt by debugging, refactoring and modifying existing code, and 23.8 hours developing new code. It doesn’t sound too bad until you plot the time out visually.

If this was a pie being made for customers, just under half wouldn’t make it to the table. Perhaps unsurprisingly, 59% of respondents in Stripe’s research paper agreed with the statement: “The amount of time developers at my company spend on bad code is excessive.” So excessive that Stripe estimated it added up ~$85 billion of global GDP being lost annually.

That sounds a bit on the high side, but a good point is being made. The 42% slice of the pie was also researched far further and deeper in Code Red: The Business Impact of Code Quality, a white paper first presented at the 2022 International Conference on Technical Debt. It found that code quality has a dramatic impact on time-to-market as well as the external quality of products, with high quality code resulting in:

- 15x fewer bugs

- The ability to implement features twice as fast

- A 9x lower uncertainty in completion time

One of the keys to gaining those advantages is having a Test Data Management approach in place, where code is tested for quality as early in the development process as possible. This is particularly important when releasing database changes because they often become a bottleneck during deployments and the reason for failed deployments.

If the proposed changes are tested against a copy of the production database that Isn’t representative of the size, distribution characteristics or referential integrity of the original, the quality of the code cannot be guaranteed.

This is where a tool like Redgate Clone comes into play, which takes advantage of database virtualization technologies to create small and light copies, or clones, of production databases which can be provisioned in seconds for use in testing and development.

Teams working with SQL Server, PostgreSQL, MySQL and Oracle can use Redgate Clone to branch, reset and version copies of database instances at application-code speed as they develop, with zero risk of affecting other team members.

Key takeaway

Releasing features and improvements faster increases the frequency of delivering value. However, speed often comes with the compromise of quality. As a result, more and more IT teams are adopting Test Data Management to ‘shift left’ the testing of database changes against a realistic, but compliant, copy of the production database. This practice flags any potential errors or problems earlier in the pipeline, at a point when they can be corrected quickly and efficiently.

Using a tool like Redgate Clone, developers can self-serve production-like clones of database instances in seconds, and validate database updates with an advanced set of tests, checks and reports, which can be run as part of an automated process.

Released in early 2023, Redgate Clone already works with SQL Server, PostgreSQL, MySQL and Oracle databases. Development is now underway to enhance Redgate's data masking and cataloging capabilities to ease the provisioning of sanitized data.

Read next

Blog post

DevOps 101: The role of automation in Database DevOps

This is the fifth part in the DevOps 101 series and it’s time to talk about automation. Before we get into it, I just want to recap what DevOps is. Microsoft’s Donovan Brown sums it up nicely in a single sentence: DevOps is the union of people, process, and products to enable continuous delivery of value to our end users. The important thing to remember here is the order in which he talks: people, process, and products. That’s the way DevOps works. Now that’s covered, we’re going to be talking about automation, and specifically the role of automation within database

Product learning article

Better Database Development: The Role of Test Data Management

Any organization that aims for reliable, online deployment of database changes needs a Test Data Management (TDM) strategy that allows developers to test the database early, thoroughly, and repeatedly, with the right test data. The benefits include more resilient database deployments, fewer bugs, and shorter lead time for changes.

Resource

2023 Gartner® Hype Cycle™️ for Agile & DevOps

Accelerate and improve software delivery lifecycle