Static Data and Database Builds

Whichever way you wish to ensure that a database, when built, has all the data that will enable it to function properly, there are reasonably simple ways of doing it. Phil Factor explains the alternatives.

Often, we can’t build a fully functional SQL Server database just from the DDL code. Most databases also require what is often referred to as ‘static’, or ‘reference’ data, which will include such things as error messages, names of geographical locations, currency names or tax information. This data must be in place before the database can be used in any effective way. The static data needs to be in source control because, like the code, if it changes you need to know why and when.

The idea of ‘static data’ or ‘reference data’ is rather an alien concept for databases (it comes more from languages like C with their idea of enumerations). Relational databases do not distinguish between static and dynamic data, and all that is required to make data static is access control. Nevertheless, if this data is essential for a functioning database, then it needs to be part of the build, and this article will discuss ways to include it.

If the required data is a list of major cities, or world currencies, then it is safe to call it static, within the lifetime of a database release. However, there are occasions when the data is more volatile, yet must be there for the database to function. This is likely to require more complicated handling, which we’ll discuss later.

If the volume of static data is small it is easy just to include it in the object-level build script for that table, with an INSERT statement, so we’ll start there. Next, we’ll discuss a couple of alternative techniques, such as using a view of table-value function, in place of any table that requires static data. Some database developers prefer a special post-build stage to insert static data into the tables that need it. This technique is better for larger volumes of static data, where you’ll be importing it using bulk copy program (bcp), so we’ll cover that approach too.

Generating schema and data scripts

Both SSMS and SMO allow you to generate scripts that include both schema and data for tables. They are intended only where the table has fewer than a thousand rows.

In SSMS, go to the browser pane, right-click on the database, and from the context menu select Tasks, followed by Generate Scripts and then choose either all the tables, or specific tables. Then, on the Set Scripting Options screen, select Advanced, find the Types of data to script option, and change it from Schema only to Schema and Data (or to Data only if you want to script the data separately from the schema). This technique uses single row INSERT statements so is certainly only suitable for small volumes.

Alternatively, I have included in my article Scripting out several databases on a server a way of doing this in PowerShell.

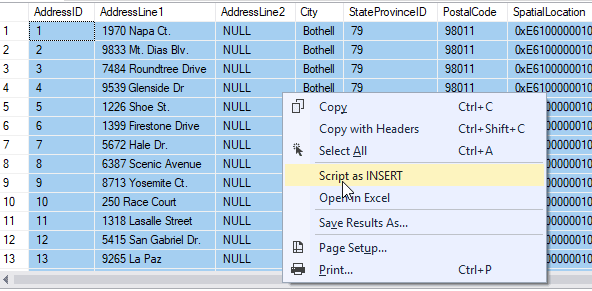

Sometimes, you’ll want to create a copy of a table, including data, by scripting the results of a query into a multi-row VALUES clause. This is faster than inserting the individual rows. If you have SQL Prompt, you can generate these data insertion scripts from a grid result, using its Script as INSERT feature. You will get a new query pane with a creation script for a temporary table, a set of insertion scripts and a delete table statement at the end. You then edit this into the form you need.

Including static data in the object-level build scripts

A CREATE TABLE statement cannot determine what data is placed in a table, but it is perfectly easy to insert the data required to ‘initialize’ a table, using DML statements, directly after the DDL statement that builds the table. If the subsequent table must be read-only, then we deny INSERT, UPDATE and DELETE access to the table. This doesn’t affect the initial INSERT statement, because this will be done by a login that has System Administrator or Database Owner privileges.

Therefore, if a table such as TypeOfPhone, Honorifics or Salutation needs to be filled in with small amounts of reference data, then you could do it using a multi-row INSERT statement, or an INSERT from a UNION query. If the volume of required data is significant, then all relational databases also have a ‘Bulk Import’ device (BCP in Sybase and SQL Server) to deal with this.

AdventureWorks is typical in that it has several tables that aren’t going to alter very often, such as Production.ProductCategory. An object-level build script for this table would be as simple as that shown in Listing 1 (leaving out comments, extended properties and so on).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

CREATE SCHEMA production CREATE TABLE Production.ProductCategory ( ProductCategoryID INT IDENTITY(1, 1) NOT NULL, Name NVARCHAR(50) NOT NULL, rowguid UNIQUEIDENTIFIER ROWGUIDCOL NOT NULL, ModifiedDate DATETIME NOT NULL, CONSTRAINT PK_ProductCategory_ProductCategoryID PRIMARY KEY CLUSTERED (ProductCategoryID ASC) -- WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON ) ON [PRIMARY] ) ON [PRIMARY]; GO ALTER TABLE Production.ProductCategory ADD CONSTRAINT DF_ProductCategory_rowguid DEFAULT (NewId()) FOR rowguid; ALTER TABLE Production.ProductCategory ADD CONSTRAINT DF_ProductCategory_ModifiedDate DEFAULT (GetDate()) FOR ModifiedDate; GO SET IDENTITY_INSERT Production.ProductCategory on INSERT INTO Production.ProductCategory (ProductCategoryID, Name, rowguid, ModifiedDate) VALUES (1, N'Bikes', '{cfbda25c-df71-47a7-b81b-64ee161aa37c}', N'2008-04-30T00:00:00'), (2, N'Components', '{c657828d-d808-4aba-91a3-af2ce02300e9}', N'2008-04-30T00:00:00'), (3, N'Clothing', '{10a7c342-ca82-48d4-8a38-46a2eb089b74}', N'2008-04-30T00:00:00'), (4, N'Accessories', '{2be3be36-d9a2-4eee-b593-ed895d97c2a6}', N'2008-04-30T00:00:00'); SET IDENTITY_INSERT Production.ProductCategory off |

Listing 1

Even though a build script like this allows you to do any post-build initialisation you want, most object-level scripts don’t generally seem to include either unit tests or initialization. This is for no good technical reason, but purely by convention, and because any change in data is considered a DDL change.

This unwillingness to integrate other types of SQL statements into a DDL script could have started out as a security issue, to prevent developers creating accounts with greater permissions than the one that the DBA has assigned them. It is, however, easy to check for the presence of DCL (Data Control Language) statements. It is sometimes said that you shouldn’t mix logic and data. This emerges as a superstition that one must not mix DML and DDL in the same script, but the reason for this seems to have been lost. Certainly, it doesn’t impact migration-style build scripts that do precisely that.

Building a view or table-valued function

If your build system doesn’t, for some reason, allow you to include initialization routines in the object-level scripts, then you can build a view instead, based on a derived table, instead of using a table. We can do this using either a UNION or the multi-line VALUES clause. Not much is lost through doing this, for a reasonably small table, but such a view cannot be referenced by a foreign key. Also, the resulting view, in either case, cannot be indexed since both the UNION technique and using a derived table precludes it.

Listing 2 shows the classic UNION ALL method of creating static data tables and is fine for anything less than 8000 rows.

|

1 2 3 4 5 6 7 8 9 10 |

CREATE VIEW Production.ProductCategory WITH SCHEMABINDING AS SELECT 1 AS ProductCategoryID, N'Bikes' AS NAME, '{cfbda25c-df71-47a7-b81b-64ee161aa37c}' AS rowguid, N'2008-04-30T00:00:00' AS ModifiedDate UNION ALL SELECT 2, N'Components', '{c657828d-d808-4aba-91a3-af2ce02300e9}', N'2008-04-30T00:00:00' UNION ALL SELECT 3, N'Clothing', '{10a7c342-ca82-48d4-8a38-46a2eb089b74}', N'2008-04-30T00:00:00' UNION ALL SELECT 4, N'Accessories', '{2be3be36-d9a2-4eee-b593-ed895d97c2a6}', N'2008-04-30T00:00:00' |

Listing 2

Nowadays, we would more likely use the neater multirow VALUES clause, as shown in Listing 3, but again, an index cannot be created for a view containing a derived table.

|

1 2 3 4 5 6 7 |

CREATE VIEW Production.ProductCategory WITH SCHEMABINDING AS SELECT ProductCategoryID, Name, rowguid, ModifiedDate FROM (VALUES (1, N'Bikes', '{cfbda25c-df71-47a7-b81b-64ee161aa37c}', N'2008-04-30T00:00:00'), (2, N'Components', '{c657828d-d808-4aba-91a3-af2ce02300e9}', N'2008-04-30T00:00:00'), (3, N'Clothing', '{10a7c342-ca82-48d4-8a38-46a2eb089b74}', N'2008-04-30T00:00:00'), (4, N'Accessories', '{2be3be36-d9a2-4eee-b593-ed895d97c2a6}', N'2008-04-30T00:00:00') )categories(ProductCategoryID, Name, rowguid, ModifiedDate) |

Listing 3

If you need the resulting table to be indexed, you can use a schema-bound multi-statement table-valued function instead of a view. However, it only gives an advantage in certain unusual circumstances. For most purposes, a view containing either a UNION or a derived table wouldn’t perform much better even if it was an index. In my testing, I joined a view, based on a derived table with 1480 rows, to a two-million row table. The query performance was only 40% -50% slower than when using an indexed table.

Using sp_ProcOption

As an alternative, it is possible to specify a stored procedure in 'master' for execution on start-up of SQL Server. This obviously cannot have parameters, but you can use it to check all tables that require ‘static data’ and insert it if necessary. There are several ways of doing this. All the data can be stored within the stored procedure, in SELECT…VALUES statements.

Although it is effective, it wastes resources since the stored procedure should only be needed once. It is also frowned-on by many DBAs because it is easy to forget about the presence of such a stored procedure. You can easily check on them with…

|

1 2 3 4 |

SELECT [name] FROM sysobjects WHERE type = 'P' AND OBJECTPROPERTY(id, 'ExecIsStartUp') = 1; |

Listing 4

It also is a server-based solution rather than a database solution, and so may require a separate installation script. However, you’ll probably need these anyway for Agent jobs, so this is usually just a minor complication.

Using a post-installation script

Almost all database build and release systems allow post-installation scripts. Where a database table is large, it is far better to store the data as native-format BCP and install this after the database itself is built. I have published several ways of doing this, even including using JSON-format scripts to do represent the data rather than native BCP..

If this post-installation script is run with elevated permissions that allow insertion into a static table, then this becomes very easy. The problem is that the data must either copied to a local directory on the server that is hosting the instance on which your database is being built, or it must be on a network location that is accessible to the target server via a UNC.

Every database developer has their own favored way of doing this. I use a directory that has native BCP files in it. The name of the file represents the name of the table. The name of the directory represents the database. If you need to put initial data into a table, you just put a BCP file in a directory. The post-build PowerShell script looks in the directory, picks up each file and puts it into the appropriate table. The order of insertion doesn’t matter, because a native BCP will disable check constraints by default, but you will just need to ensure that CHECK constraints are enabled afterwards otherwise SQL Server will mark the table as ‘untrusted’, and therefore will be unable to use CHECK constraints for helping to create effective query plans.

If your build server doesn’t allow PowerShell scripts than you can do a similar operation in SQL, but you need a different way of specifying the tables that need data, and the order of insertion. I use a manifest that is based in a view, or a simple list.

Dealing with more volatile data

A build is just a matter of executing the code to create the database and all the objects within it, and then adding any required data to it. If data is truly static (such as a list of days of the week) then it is simply inserted in a post-build script, using data stored in source control, as described previously. If you’re updating a database, rather than doing a fresh build, that has truly static data, and you aren’t using the ‘view of derived table’ technique, then you ought to over-write the target database’s static data with that which is appropriate to the version.

What if your static data isn’t static, but ‘slow-moving’? In which case, you must ensure that the ‘static’ slow-moving data contains all the values in the dataset stored in source control as well as any other data in the target ‘slow-moving’ table that has ‘slowly’ been inserted in the meantime. How do you deal with legitimately changed data? This will most likely need to be changed in source control before the build process. If, for example, an exchange rate changes, then that should be updated immediately in production by a privileged user and reflected immediately in an update of the development static data in source control. If, however, static data is personal or private, then this isn’t possible. Instead, the production values of the static data must be preserved only in production and must be represented in test and development by anonymized or simulated data. This means that under these circumstances only the schema can be synchronized when a new version is delivered to staging or Production databases.

Conclusions

Whichever way you wish to ensure that a database, when built, has all the data that will enable it to function properly, there are reasonably simple ways of doing it. When there are no team-based constraints to the contrary, I personally prefer any table source with static data as a view with a derived table. The insertion of data as part of the object-level build is a relatively safe method just so long as any error stops the build. You can even insert all the data after the schema is built.

Matters can get confusing if two different development efforts are working with different data. Testers, for example, often want, as a deliverable of the build process, a clean schema with no table data. They can then insert different data sets according to the type of test. With a development team that communicates well, this sort of confusion is easily ironed out, especially where there are so many options in ensuring that static data can be put in the build in a number of ways.