How a Viking Princess defeated ChatGPT

In the first of a series of articles about how ChatGPT and other AI tools like DALL-E will change the way we work with words, code and images, we put ChatGPT through its paces and find out how intelligent it really is, and what its limitations are when it meets someone from over a thousand years ago.

You may well have heard of ChatGPT, the new wunderkind AI chatbot that can write articles, essays and computer code, compose poems and song lyrics, provide answers to test questions, and interact with users in a conversational manner.

In less prosaic terms, it’s a Large Language Model which, according to Stanford University, has 175 billion parameters and was trained on 570 gigabytes of text. ‘GPT’ in the name means ‘Generative Pre-trained Transformer’.

In more common terms, it’s a robot that answers almost any question you put to it with text that you can simply copy and paste anywhere. It’s been said that it’s the end of Google. You don’t get pages of potential answers to a question, you get the answer in the form of an essay, an article, a string of code.

But there’s a problem.

Meet Thora

Thora Hákonsdóttir was born in 952, the daughter of King Haakon the Good of Norway. He ruled Norway for 27 years until 961 when he died following the Battle of Fitjar in Hordaland. He actually won the battle, fought against three of his nephews, but he passed away shortly after, some say from a poisoned arrow fired by the shoe-boy of the nephews’ mother, the infamous Gunnhildr, known as the Mother of Kings. (If it sounds like an episode of the TV series, Vikings, it’s not. This is the real deal.)

And Thora, who was nine years old at the time? She disappeared. Literally. In all of the Norse sagas and tales that stem from that era, there is only one sentence about Thora, in chapter 32 of Haakon the Good’s Saga:

He had only one child, a daughter, called Thora, and had no son.

Now that is unusual. When the sagas name a character from the oral history they transcribe, you also get a run-down of their ancestors and their own life. Where they lived, what they did, who they married, who their children were. It goes on. And on.

Apart from Thora.

This has puzzled me for a decade and more. How could a Viking Princess, the daughter of one of the most famous Kings of Norway, disappear? I found one sliver of a hint years ago that she was married in 978, in Orkney. But was she?

Where better to turn than ChatGPT? Surely the most advanced chatbot ever created, its memory crammed with what is estimated to be 300 billion words of text scraped from thousands and thousands of sources all around the world could help.

I decided we should have a chat.

Meet Charlie

I named ChatGPT ‘Charlie’. Okay, I’m anthropomorphising a chatbot, but hey, if ChatGPT is as good as they say it is, then it should have a name.

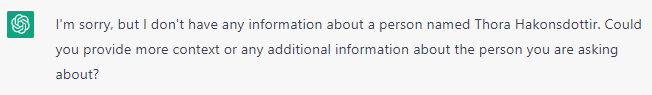

So I asked Charlie the obvious first question: “Who was Thora Hakonsdottir?”

No problem. You can converse with Charlie. I expanded on the answer, and we were off to the races: “She was the daughter of King Haakon the Good.”

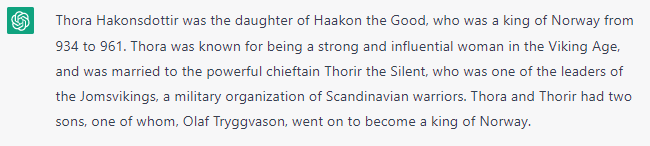

Only we weren’t. Thora never married Thorir the Silent. I’m a history geek. It was time to disagree with Charlie: “You’re incorrect. Thorir the Silent married Ålov Årbot Haraldsdatter, daughter of Harald I of Norway, not Thora Hakonsdottir.”

Now that was established, it naturally begged the next question: “Who did marry Thora Hakonsdottir, who was the daughter of Haakon the Good?”

Darn. The same incorrect response. A dead end. Even with 175 billion parameters, 570 gigabytes of text, and 300 billion words scraped from thousands and thousands of sources, Charlie still wasn’t the sharpest chisel in the toolbox.

And that’s the point of the first post in this series about AI. AI in general, and ChatGPT in particular, isn’t about accuracy: it’s about probability. Based on the machine learning that goes on behind the scenes, AI software gives you a statistically probable response based on what’s in its database. Yep, AI is a database on steroids. It isn’t intelligent and it doesn’t think.

It can’t be prompted to learn, either, based on responses like those I gave above. If anyone did have the bright idea that you could program AI software to ‘learn’ from responses, you’d end up with a headline something along the lines of the one that appeared in The Verge on March 24, 2016:

Microsoft were sucker-punched when they introduced Tay to the world, an AI chatbot that would get smarter by engaging with people, and learning from them, on Twitter. It did ‘learn’, but it learned about the worst of people, not the best of people.

Rather than facing the same risk, ChatGPT is closed-off in an endless loop, regenerating the same answers based on the same database, huge though it is. That database also only goes up to 2021, so it doesn’t know everything. It never gets better, it never gets worse, it just is. And that ‘is’ isn’t intelligent. It’s just artificial.

So while Charlie does have uses – and it does – it needs a warning label like one of those public information ads from years ago: DON’T TRUST EVERYTHING CHARLIE SAYS.

Don’t get me wrong here. I think the way ChatGPT presents its responses is terrific. The text looks like it has been written by a human, and it gives the facts in a logical, linear way. This is why it’s getting a lot of attention. If you’re a High School student with an essay due tomorrow morning, ChatGPT is a good way to get a big head start on meeting that deadline. But make sure you double check everything.

Consider ChatGPT as a huge, sometimes unreliable encyclopedia that you can ask any question you like. Why is the sky blue? What is Database DevOps? Why do dogs bark not meow? It’s got answers to all of those and a lot more. But it bases responses on probability, depending on the preponderance of reference points in the data in its database, rather than the accuracy of that data. It doesn’t think, it computes, it’s sometimes wrong. Hence the warning label.

But what about Thora?

Good point. I’ve found a trace of Thora in the Orkneys in 978 – an analogue trace, not a digital one. The Viking Princess, orphaned in Fitjar in 961, had journeyed to the Orkneys by 978. Why had she crossed 300 miles of open sea? How had she survived those 17 years? What did she do? That’s a story for another day. And ChatGPT? Sorry, Charlie, you can’t help me. I’ve seen enough. I’m going back to the old-fashioned way of doing things. AI is what it says on the tin. It’s artificial. I’m looking for real.

If you’d like to know if AI chatbots like ChatGPT and Bing can be used to create content for you, read the second post in this series: Can ChatGPT be your next Technology Writer?