Handling failures when deploying to multiple databases with SQL Change Automation

How do you handle deployment failures with a single-tenant database architecture?

We’ve had a question come up from a couple of customers regarding an interesting use case with Redgate’s SQL Change Automation. In this scenario, customers say:

-

- We have a single-tenant architecture where each of our customers has their own database.

- The databases have identical (or nearly identical) schemas, which we manage in a single project.

- When deploying database changes, we loop through the databases and deploy the project sequentially.

A natural question that arises is what to do when a deployment fails against a single database.

For example:

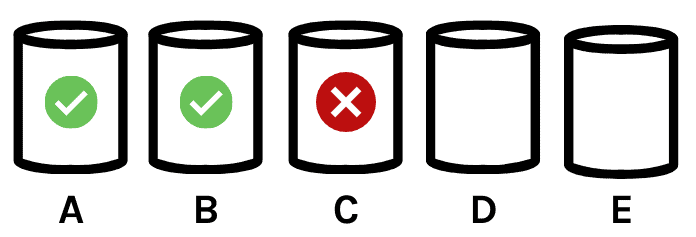

-

- We’ve deployed successfully to the CustomerA and CustomerB databases.

- Deployment to the CustomerC database fails midway through.

- We have our deployment process set to stop on error, so deployment has not occurred to databases for CustomerD and CustomerE.

One approach that seems simplest to folks is wrapping the entire multi-database deployment into a single transaction.

In this post, I cover why that’s not a good idea, then step through alternatives for handling deployment failures in this single-tenant database scenario.

Along the way I discuss why it’s worth thinking through your plan to handle a larger class of failures: not just failures that might happen during a deployment, but also problems which might be caused by changes made by a deployment that are only detected afterward.

Short on time? Jump to a summary of the post.

A cross-database transaction isn’t the right solution

SQL Change Automation doesn’t support doing a single transaction across multiple databases. We are unlikely to change this because doing a cross database deployment in a single transaction in SQL Server quickly becomes problematic.

SQL Change Automation doesn’t support doing a single transaction across multiple databases. We are unlikely to change this because doing a cross database deployment in a single transaction in SQL Server quickly becomes problematic.

Deploying with cross-database transactions requires holding schema modification locks for the duration of the transaction: this is a recipe for blocking chains and may also invite deadlocks. It also risks building up large amounts of transaction log space across all databases. Finally, it can lead to very long rollback scenarios in the case of failure.

Additionally, cross-database transactions bring in significant limitations for your environment over time:

-

- Certain operations in SQL Server are not allowed to be done within explicit transactions, so this wouldn’t be a reliable approach for all changes — you’d end up with weird exceptions for some deployments when you weren’t covered.

- If your production environment uses AlwaysOn Availability Groups, you will be required to use the Distributed Transaction Coordinator (DTC) for cross-database operations.

- If you wish to deploy some databases to a hosted cloud service such as Azure SQL Database or Amazon RDS SQL Server, this approach also won’t fit.

- It may also become attractive to deploy to multiple databases in parallel, rather than deployment sequentially – at which point the single transaction approach won’t fit.

A final consideration is that not all failures are deployment failures.

What happens if our release introduces bad code which causes a significant production issue? We need to be prepared to react to this as well. Organizations often want a process to handle general failures in the release process, not simply deployment failures.

Database backups are useful for one type of deployment failure, but not all

Backups are another approach that seem like a simple fix, but often don’t make sense in practice for most failure scenarios.

Backups are another approach that seem like a simple fix, but often don’t make sense in practice for most failure scenarios.

The simplest use of a backup is to restore over a database, replacing it with an earlier copy. This often doesn’t fit the need of deployment scenarios:

-

- Most teams deploy changes to systems while they are live and open to users. In this situation, using a database backup to recover from a deployment will typically cause data loss, which is unacceptable.

- Teams who deploy changes to systems only during downtimes typically don’t rely on backups for timing and scalability reasons. There is often a requirement to limit the amount of downtime. Restoring databases is time consuming, especially as data sizes grow, making this a difficult fit.

The ability to restore backups is an important part of planning for failure handling for one important scenario: what if a deployment causes data loss?

Data recovery scenarios typically involve restoring a copy of the database to an alternate server or an alternate name, then using the recovered database to repair data in the impacted database.

Some teams leverage snapshots in SAN and storage technologies to automate the process of taking backups prior to deployments and to mounting snapshots of backups when needed. This approach can be very successful but requires the right kind of technology to support automation and ensure that performance is not impacted.

In some common scenarios, the best approach is to re-run the deployment

There are several scenarios where the right approach will be to re-run the deployment.

There are several scenarios where the right approach will be to re-run the deployment.

For example, let’s say a deployment to the CustomerC database fails due to a deadlock. We’ve already deployment to CustomerA and CustomerB, but not yet deployed to CustomerD, E, F.

In this case, we should check if there are any long-running queries in production which were involved in the deadlock. If activity has cleared, it makes sense to re-deploy to the database where the deployment failed, then continue to the remaining databases.

SQL Change Automation is set up to track which migration scripts have been deployed and will only re-deploy pre and post deployment scripts (which are designed to be run on every deployment), so it is safe to re-run deployments.

Similarly, in the case of power loss, network failures, or timeouts due to blocking, re-running a deployment is generally the best approach.

What if we want to revert all the deployments / aka roll the changes back?

Undoing database changes which have been committed is tricky business. (To be fair, this is why the “single transaction” approach is tempting to many! But as discussed above, unfortunately that has flaws and only covers limited scenarios.)

Many organizations follow patterns to reduce or eliminate the need to roll back changes

The truth about stateful databases is that we don’t truly have the ability to simply ‘undo’. We are always applying a new version of the code to the database.

Common approaches for ‘rolling back’ used in application deployment– such as the Blue/Green deployment model—don’t work for databases. Many organizations use the approach of the “Expand/Contract” model when designing database changes. This approach works to ensure that changes are always non-destructive.

If you are not able to implement the Expand/Contract model fully, you may want to work some “defensive” actions into your workflow.

The SQL Compare command line enables you to reset objects to their state before the deployment

SQL Change Automation projects don’t just contain the migration scripts for the change you are going to apply. They also carry a snapshot of the state for the of the database after deploying the change.

SQL Change Automation projects don’t just contain the migration scripts for the change you are going to apply. They also carry a snapshot of the state for the of the database after deploying the change.

This means that you can automate a comparison generating an ‘undo’ script in your pipeline:

-

- Create a release artifact with SQL Change Automation. This can be done against the production database, or against a restored copy / staging instance of the database if you have performed checks that the schema is identical.

- Call the SQLCompare command line

- Compare the database where you will deploy against the schema model in the release artifact.

- Use /scriptfile to output the results to a script in your release artifact file structure where you store rollback scripts

- Use the script as desired, either in automation or run manually

Consider: will this introduce a risk of data loss?

For example, if a deployment added a column or added a table, is it safe to drop that column or table? Could that result in data loss?

The answer may vary across deployments. Will the staff who are handling the deployment and the rollbacks have enough information to know the answer quickly for a given deployment?

Because of the complexity of rolling back changes to tables, some teams choose to use this automated rollback choice for “programmable objects” only, such as views, procedures, and functions. This limited rollback script can also be generated with SQLCompare, using a script like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

$SQLCompare="${env:ProgramFiles(x86)}\Red Gate\SQL Compare 14\sqlcompare.exe"# full path $MyServerInstance="DeploymentTargetInstance" $MyDatabase="DBName" $SchemaModelPath="C:\ReleaseArtifactPath\Schema-Model\" $AllArgs = @("/server1:$MyServerInstance", "/database1:$MyDatabase", "/scripts2:$SchemaModelPath", "/include:StoredProcedure", "/include:View", "/exclude:View:__MigrationLogCurrent", "/include:Function", "/Options:DecryptPost2KEncryptedObjects,DoNotOutputCommentHeader,IgnoretSQLt,IgnoreWhiteSpace,IgnoreWithElementOrder,UseCompatibilityLevel,IgnoreUsersPermissionsAndRoleMemberships", "/ScriptFile:C:\ReleaseArtifactPath\Rollback.sql", "/force" ) &$SQLCompare $AllArgs |

Rolling back table changes with “down” scripts

SQL Change Automation supports creating “down” scripts in advance if you need to prepare custom code to revert changes prior to a deployment.

Since a rollback script for programmable objects (stored procedures, views, and functions) can be created with automation via the SQLCompare command line, teams often save time by leveraging a combined approach and only creating “down” scripts for table changes and data modification scripts.

Completing the circle: bring the state of the rollback into your project

It’s important to follow up on any changes to production. Your project needs to have the information about which changes were deployed to production.

It’s important to follow up on any changes to production. Your project needs to have the information about which changes were deployed to production.

To determine the right course of action, think through what should happen on the next deployment. Should it deploy some of the changes from the prior deployment? All of them? None of them?

To “complete the circle” after a rollback, it’s important to understand that:

-

- Migration scripts are run one-time only (with the exception of pre- and post- deployment scripts). If you revert changes that were deployed in a migration script and wish to “re-run” the migration, you need to generate a new migration script for that code. While this is an extra step, it is a good thing as it preserves a linear log of changes to your database.

- Programmable objects are rerunnable scripts for views, functions, and stored procedures. SQL Change automation automatically deploys programmable objects by comparing a checksum of the definition in source control with the definition of the target database. If you revert changes which were made to a programmable object, you need to update the definition of that object in version control to prevent it from being re-deployed.

Bringing this together: a summary of approaches

We’ve covered a lot in this post. Here’s a quick run-through of things to consider when planning to handle deployment failures.

Restoring database backups makes sense when deployment failures cause data loss.

For business-critical systems, integration with SAN or cloud technologies to leverage snapshots prior to deployment and to simplify the process of mounting snapshotted databases when needed can help significantly in data loss scenarios.

Rerunning the deployment makes sense when the deployment failure was due to a transient error such as a network failure, power outage, deadlock, or timeout. Engineers will need to make sure that the transient issue has passed, and that the next deployment won’t simply have the same failure.

Operational runbooks and automation make validating environment health and activity much simpler (or automatic) following a deployment failure.

Utilizing the expand/contract model when designing your deployments helps minimize your need to undo deployments overall. Learn more about that in this post.

Reverting schema changes in the deployment: There are two popular methods for this, which are often used in combination:

-

- The SQLCompare command line can generate a rollback script prior to deployment. This is most popular for stored procedures, views, and functions, as reverting these changes has a lower risk for causing data loss.

- “Down” scripts may be written to revert schema changes. This is most popular for changes to table schema and for data modifications.

If you use either of these approaches, ensure that you follow up any deployment failures which require rollbacks by checking the final state of objects into version control.

Ready to read more?

You may also enjoy these resources (some of which I’ve linked to above):

- How to Avoid Rollbacks in Database DevOps

- Zero Downtime Database Deployments are a Lie

- Evolutionary Database Design by Pramod Sadalage and Martin Fowler

- Refactoring Databases: Evolutionary Database Design by Pramod Sadalage

- Creating ‘Down’ Scripts in SQL Change Automation (product documentation)

- SQL Compare Examples using the Command Line (product documentation)