The Need for a Data Catalog

In the event of a breach of personal data, any organization must produce proof that they understand what data they hold and where, and how it is being used, and that they have enforced the required standards for access control and security. To make all this possible, it is essential to build a complete model of the data and its lineage, and a data catalog is the first step in this process.

Five hundred million citizens of the EU, along with forty-four million residents of California, now have guarantees of their data rights, under law. Data about individual people, whether they are employees, customers or the general public, belongs to them, not the organization that holds it. Organizations are accountable to the owners of the data for the care with which they manage and use that data. With more legislation on the way, the executives of almost every organization are now obliged to be aware of the risks presented by the processing of personal and sensitive data, and to manage those risks appropriately. If any of the organization’s activities risk infringing the rights and freedoms of people, then the organization must assess and mitigate these risks and keep them under review.

Every organization needs to raise staff awareness of the legal requirements around storage and use of personal data. They also need to create a data catalog that describes precisely what data is being processed, where and why. They can then use this information to build a knowledge of data lineage, to determine where the data comes from, and where it goes.

Does every organization need a data catalog?

Under GRPR, if it is likely that high-risk data processing is taking place or is planned, then the organisation needs to start a process for building and demonstrating compliance with whatever legislative frameworks cover data protection in the areas in which it operates, and establishing the nature and risk of the data processing that is being considered or undertaken.

Even if the organisation can be certain that there are no such risks associated with the data that it collects and uses, it still needs to justify in a document how it established, beyond doubt, that its data processing is low-risk (see, for example, Article 29 working party). Basically, whether your organisation has no risk at all, a low risk or a high one, you still need to document the evidence for this conclusion. You can only do this by establishing and recording where and how the data is held, and who in the organisation has access to it.

Whether or not you have high-risk data, you must know that you’ve checked thoroughly. Not knowing about the data, or the risks, is not a legitimate excuse for the executives of an organisation.

Perhaps you are blissfully unaware that some AI whizz is using the data you hold to profile customers for marketing campaigns, or of which other organizations you use to track people who look at your adverts. You may also not know that Sales have got hold of customer data, obtained by joining several sources on a common identifying attribute, or that Manufacturing create “productivity profiles” for various teams and store them in a NoSQL Database in the cloud.

If any or all of this is happening, the law is clear that it is because the executives of the organisation have failed in their responsibilities to be aware of, and accountable for, what is happening with data in the organisation for which they are responsible.

Data Protection and Privacy measures

To reduce risk, you need to protect sensitive and personal data both in storage (static) and on the move. A data catalog will tell you exactly what sensitive and personal information you store, and where. After that, you also need to understand all the steps in the data lineage, as data moves through the organisation. A data catalog will help you do that too, because even if the documentation of the organisation’s data processes is incomplete, the column or key names of data tend to be preserved as it moves through the organization. The data itself will tend to preserve its characteristics, and so can be traced.

Creating and implementing an encryption policy

One of the quickest ways of reducing the level of risk is through the encryption of the data. For example, if you know what sensitive and personal information you store, in which SQL Server databases, then you know exactly what data must be encrypted in these databases.

The GDPR emphasizes the effectiveness of encryption for high-risk data. It recommends an encryption policy that governs how and when you implement encryption, the standards of encryption to use (e.g. FIPS 140-2 and FIPS 197), and for the categories of data that require this. It recommends that staff are shown how to apply this policy and trained in the use and importance of encryption.

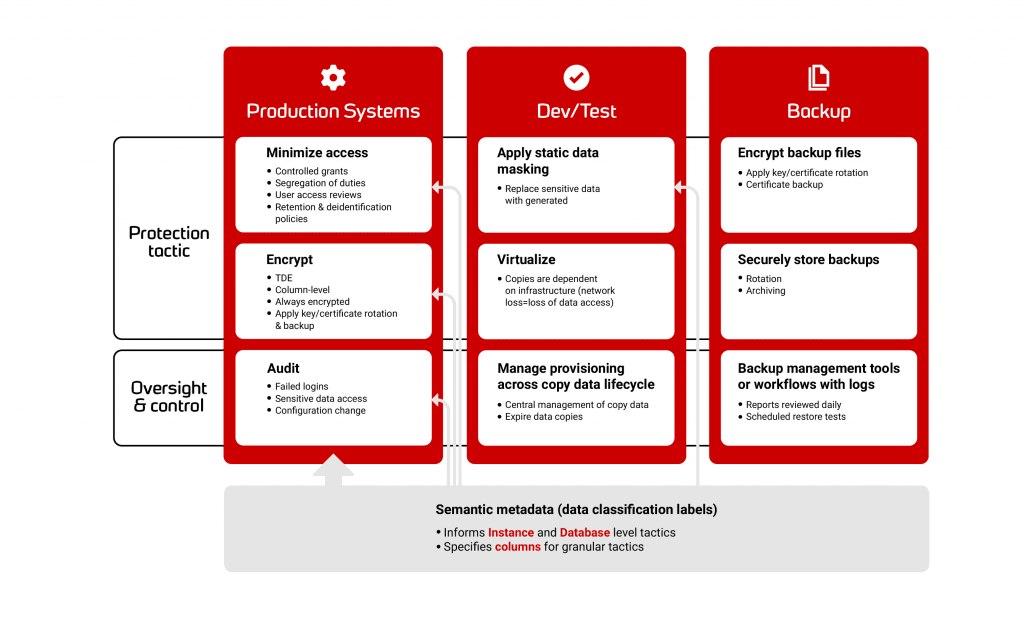

A data catalog will help to create your encryption policy and alert you to any appropriate data that is not yet encrypted in line with this policy. It will also make it clear what data elements must be masked, or pseudonymized, before copies of that data can be reused for development, testing, analysis or training work, within the organization.

This is only the start, because it is usually outside the database that breaches happen. You therefore also need to understand all the steps in the data lineage, as data moves through the organisation. SQL Server databases are unlikely to be the only places where data is stored. There will be many places where data security will be at risk. Spreadsheets, PDFs, Word files, NoSQL databases, cache stores, backups, ETL pipelines, paper copies and reporting systems can all be used for breaching data. However, the SQL Server databases will be the best place to start to unpick the threads and investigate the way that the organization handles information such as “customer purchases”.

Once you’ve understood the nature of the data within the SQL Server databases, you can work ‘upstream’ to determine the route by which data enters, and ‘downstream’ to check what happens when it leaves. This will help to ensure that encryption takes place throughout the organisation and that access is always properly controlled.

Data privacy by design

Encryption is necessary in high-risk data, in order to ensure that your data handling complies with the data protection principles and that you safeguard individual rights, but so are other technologies, practices and measures.

These measures come under the heading of ‘data protection by design and by default’ or ‘data privacy by design’. Appropriate access control is one example and auditable access to high-risk data that can identify the individual user is another. Essentially, you must integrate data protection into your processing activities and business practices, from the design stage right through the data lifecycle. All these activities require a common understanding of the data held by the organization, the risks attached to it, and the access rights needed for the roles and teams that use the data.

Is this yet another burden on IT?

What is the relevance of all this for the DBA, or database professional? There are already well-understood obligations on operations staff, such as disaster recovery and service-level maintenance. However, the maintenance of data models and the cataloguing of data are just as important, and these tasks can only be done by those who have the appropriate security access levels.

It is only when armed with a low-level catalog that compliance teams and security experts can then use the results to understand the flow of data through the organisation. They can then produce, if necessary, and then maintain, a high-level model of the lifecycle of the organisation’s data from acquisition to disposal, using the evidence you provide.

They can put in place workflows and, where possible, automation to ensure that the agreed protection measures are implemented and documented. When assessing whether software change might require further scrutiny, such as a Data Protection Impact Assessment, a catalog with an API will allow the team to perform that check as part of a CI process.

The low-level hunt for data

The business will understand data in terms of customers, invoices, orders, payments and so on. Before you can liaise with other parts of the business about data curation you must be able to translate between business data concepts and the technology view of data.

It is at the low-level that data must first be understood. Whether held in a spreadsheet, on typewritten paper, in a relational database or in a NoSQL cloud-based service, it is the data that will provide the clues to the business-level view of data processes and usage, within an organization. If, for example, the organization decides that all personal data must be encrypted throughout its lifecycle, that means knowing all the places where all the component parts of that personal data is held.

A column-level analysis of table data, views or functions, or the key fields of XML or JSON mark-up, provides the lowest level of analysis. If data had a smell, it would be of columns and parameters. If a data store contains numbers that comply with Luhn’s algorithm, then it is likely to contain credit card information. If it has a column that says, ‘Credit card’, then you can feel lucky. If a user-defined data type is employed to define the column or parameter datatype for this data, then you are likely to have an easier task of cataloguing it. A user data type called CreditCardNo or CVC is likely to hint at the nature of the data.

All the different classes of sensitive data leave their own mark. Once you know what is in the tables, and have classified the different types of data, you can work out how the data is loaded into the database and where it comes from. You also generally know where it goes, because you know what columns in what tables are accessed in views and table-valued functions. From the soft and hard dependencies (references from code and key relationships between tables), you know all the other tables that together contribute components of the dataset. For example, a customer, as understood by the business, will be represented by many related tables.

Gradually, emerging from the data, forms a picture of the entire data flow through the databases in your charge. Once you know some or all the sources of the data, where it goes (the downstream services), you have identified other components in the data lineage. Piece by piece, you can identify and investigate all the parts of the lineage of the data. At each stage of the progress of the data, you will be able to identify its ‘ownership’ (the activity primarily responsible ), the protection measures required for it, the transformations and aggregations performed on it, and the activities within the organisation that use the data.

Delivering the results

Once you have a model of the data that passes through all the IT services, your part in the process of identifying personal information can be handed on to other specialist teams in IT who are responsible for data governance, compliance and data security. This might include information security teams, application developers, business analysts and IT strategists. Just as important are the non-technical people interested in what data is stored within the organization and how it is protected.

Your model, initiated from the data catalog, will dictate how your policy on encryption and access-controls will need to be implemented, or at least checked. It will also provide many of the answers to questions about the data behind the applications that they are familiar with, what happens to it, who looks after it, who is able to access it, and where it came from.

Can the whole matter of compliance in an organization be devolved to IT operations? No. The biggest problem is that any sort of carelessness within the organization can result in a breach. Although the breaches of personal data from websites get the most publicity, almost all the successful prosecutions that have happened since the GDPR passed into law have been caused by stupidity, carelessness and malice within the organization. An insecure laptop left on the train; confidential information kept in files kept in a shared folder on the network, politically motivated leakage, or even filing cabinets abandoned in insecure storage with files in them.

Data passes via several routes into the organisation, and goes through many stages, some of which are outside the control of IT. This means that compliance is an issue for the whole organisation, and IT can provide only part of the required information. The IT Operations team has the advantage of being able to construct from the database systems it hosts a picture of the various datasets that are hosted within the organisation. However, if the organisation contracts part or all its data processing activity to a third party, or hosts its systems in cloud services, this will limit what can be achieved to data categorization or discovery.

Conclusions

There is no technical novelty in the art of processing personal or sensitive data responsibly. The process of documenting the model of data for the organisation isn’t the most exciting DevOps process but without it, the organization lacks the information that allows it to judge whether they are responsible custodians of data.

This sort of work takes effort and it is sometimes difficult even to get an objective measure of its progress of. However, it is nowadays essential and with automation, it need not slow down the speed at which new functionality can be delivered.

Society at large has caught up with the uses and abuses of data that are made possible by the introduction of information technology. It is increasingly insistent that organisations that hold data should be able to police its use and to enforce standards for access control, security and the ethical use of personal data. Additionally, organisations who hold data must be able to explain how it is used when data breaches happen. To make this possible, it is essential to make sure there is a model of the data and its lineage, and a data catalog is the first step in this process.