Practical steps for end-to-end data protection

If you plan to make production data available for development and test purposes, you'll need to understand which columns contain personal or sensitive data, create a data catalog to record those decisions, devise and implement a data masking, and then provision the sanitized database copies. Richard Macaskill show how to automate as much of this process as possible.

There have been plenty of opinion pieces and vendor guides about the need for DBAs and data teams to take data protection seriously and integrate good practice into their workflows. I’ve been guilty of a few myself. There are also some great technical guides to using data protection tools.

What we don’t see much of are the implementation details, between the high-level ‘why should I’ and the configuration of specific tools.

This article starts from a reasonably realistic ‘assessment of risk’ and follows it through to an implementation of a data protection strategy for databases that need to be delivered to development and test servers. It uses a combination of Redgate and Microsoft platform tools.

To follow along, you will need;

- Azure Data Studio

- A non-Production SQL Server instance

- Redgate SQL Data Catalog

- Redgate SQL Provision

- A copy of the StackOverflow database, publicly available thanks to Brent Ozar

I’ve also posted some resources to GitHub; the SQL Notebook file I used is here, for example.

Discovering and understanding your data

I’ve been given the task of protecting the database for our web application, StackOverflow. Specifically, my manager wants me to make sure we aren’t risking GDPR non-compliance by using personal data beyond the purpose for which consent was obtained. But here’s the rub; GDPR isn’t necessarily about confidentiality. It’s about taking a risk-based approach to the handling of personal data.

So my first task is to identify is whether the contents of StackOverflow qualify as personal data.

Let’s check with the UK regulator for the GDPR for a definition we can use.

Personal data is information that relates to an identified or identifiable individual. What identifies an individual could be as simple as a name or a number or could include other identifiers such as an IP address or a cookie identifier, or other factors.

We could debate the nuances, and so much depends on context, but this is a practical guide so let’s get stuck in.

Here are the steps:

- Risk Assessment: Review the StackOverflow schema and make some decisions about which columns contain personal data

- Data classification: Create some classification metadata in a data catalog to record those decisions

- Data masking: Use that metadata to generate a masking set for Data Masker for SQL Server

- Provision development servers with masked database copies:

- Create a database image in SQL Provision for future cloning

- Deliver sanitized clones of the original database for development, test and analysis tasks

Step 1. Risk assessment – review the schema

The first thing I’m going to do is connect to the database, and start making notes on the schema and content. I don’t want to lose my notes (and I love combining executable code with notes) so I’m creating a notebook in Azure Data Studio, and saving it in GitHub.

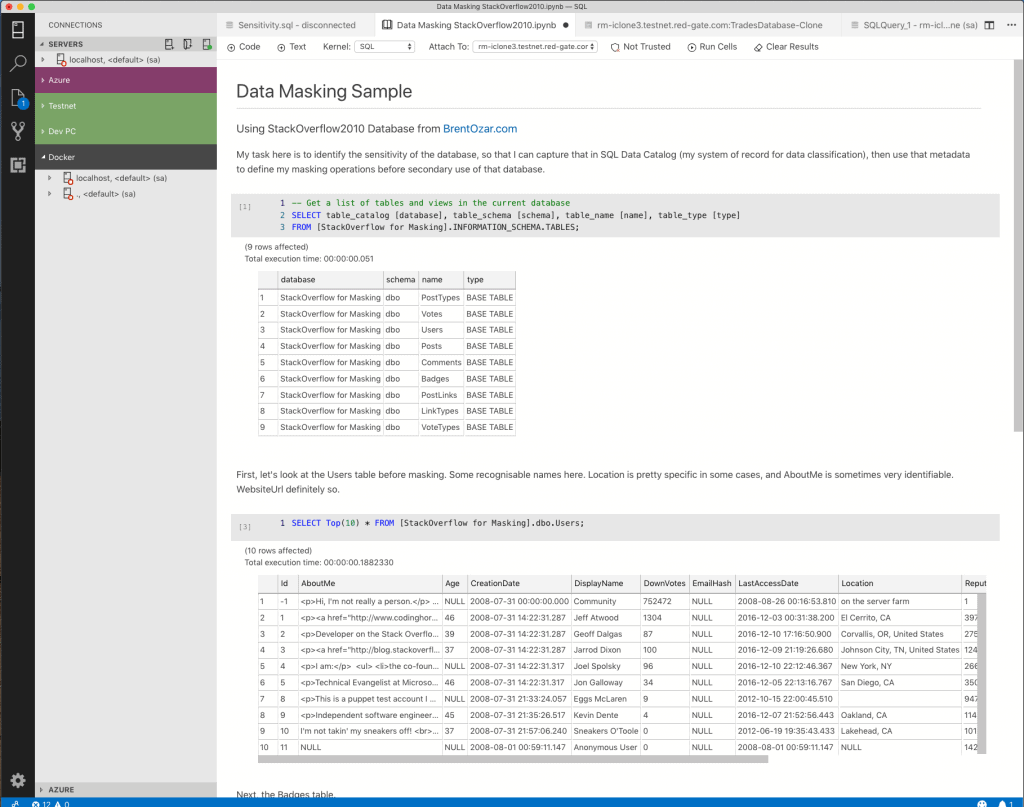

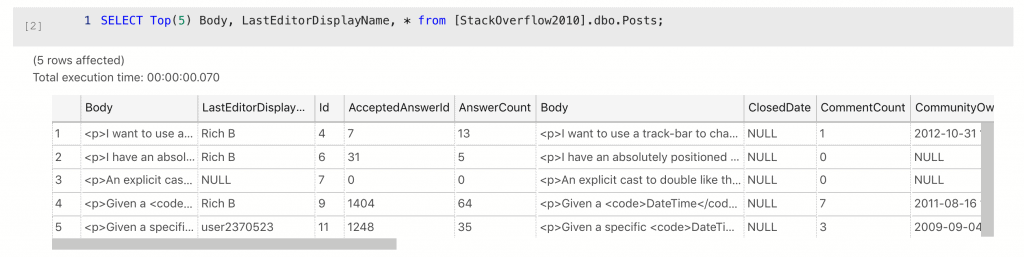

Working through the schema, table-by-table, I’m compiling a list of columns which may contain personal data. Thankfully, StackOverflow2010 is pretty simple, and I end up with a list of seven columns. Here’s roughly how my review looks in Azure Data Studio (I’ve recreated it below; you can see the actual SQL Notebook on my GitHub here).

Data Masking Sample

Using StackOverflow2010 Database from BrentOzar.com

My task here is to identify the sensitivity of the database, so that I can capture that in SQL Data Catalog (my system of record for data classification), then use that metadata to define my masking operations before secondary use of that database.

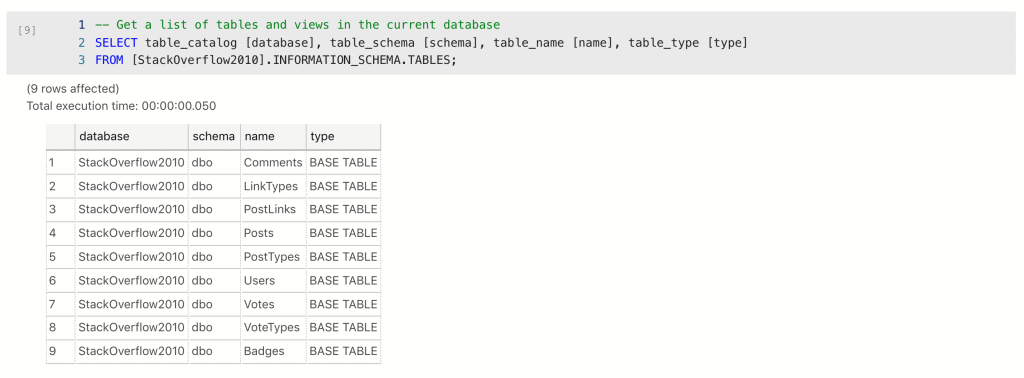

There are 9 tables to review.

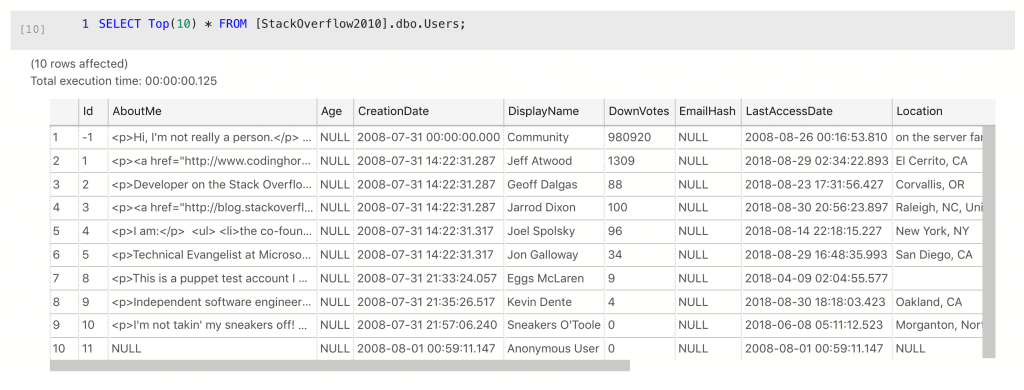

First, let’s look at the Users table before considering protection.

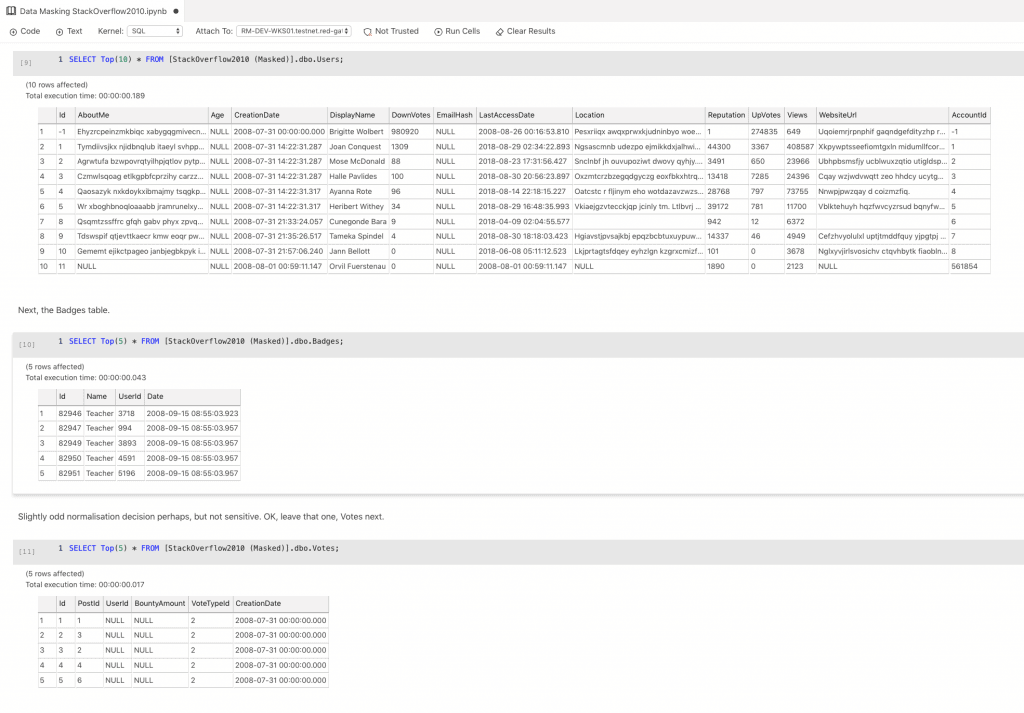

Some recognizable names here. Location is pretty specific in some cases, and AboutMe is sometimes very identifiable. WebsiteUrl definitely so. EmailHash is curious; it’s null in all cases. What if it started to be used though? This is something I would flag up for refactoring; if it’s not used, let’s not leave it in the schema. Sometimes there are common-sense risk reduction measure, that we can use this opportunity to implement.

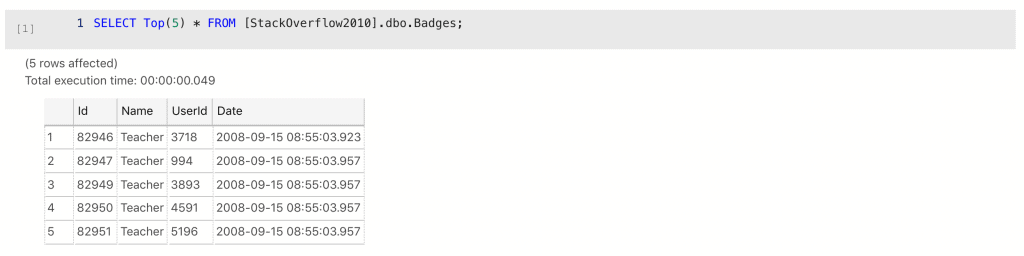

Next, the Badges table.

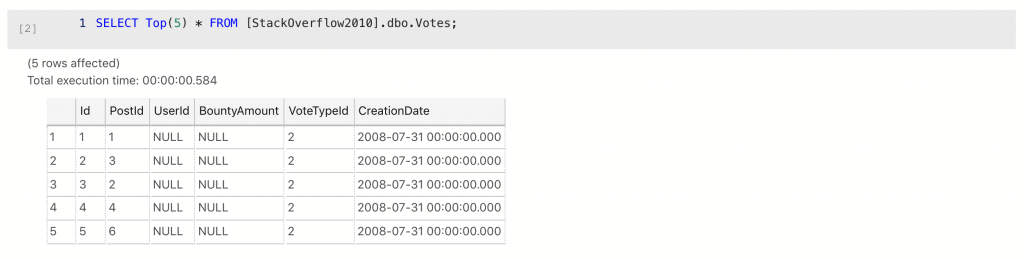

Slightly odd normalization decision perhaps, but not sensitive, or personal. OK, leave that one, Votes next.

Can’t see anything there. If we’ve masked the Users table well, what they’ve voted for won’t be very interesting or likely to identify them.

Or will it? If I know the context, I might be able to do re-identification. Jon Skeet is famously prolific for example. One of those slightly tricky calls that requires business context and a risk assessment. Who is going to see the masked database? What would happen if they re-identified using their knowledge?

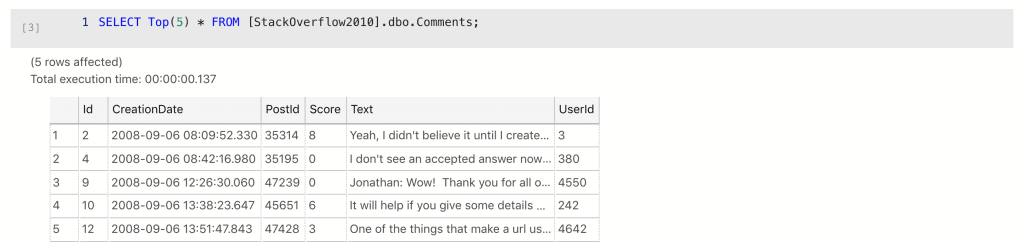

Going to need to handle that Text column I think. OK, the Posts table next.

LastEditorDisplayName is going on the list.

How about Body? This is a bit trickier; the body is in most cases a public post on a website about a technical matter. How could that be confidential? There are references to other people, that looks like someone’s personal blog URL there as well. This is identifiable data. It might be low in risk and confidentiality, but that’s an assessment for later.

Fortunately for me, the StackOverflow2010 database is pretty simple; I think I’ve got my initial list of columns that could be considered personal data, and which I therefore want to mask before distributing within my organisation for secondary use (still under a reasonable level of control of course. I’m reducing risk, not eliminating it).

I don’t want this list to be a fire-and-forget exercise so we’re going to have to capture much more information about this database, and maintain it. After all, this is going to underpin our new technical policy, so I’d much rather have a system of record so that I can work on this over time, share it within my organisation and to governance functions or auditor, and use it to automate and check on some behaviors.

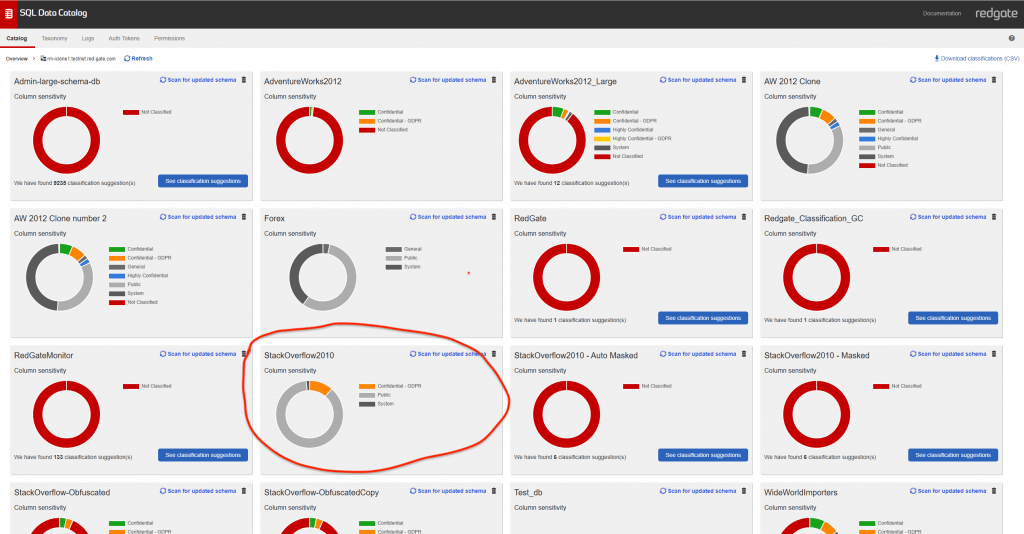

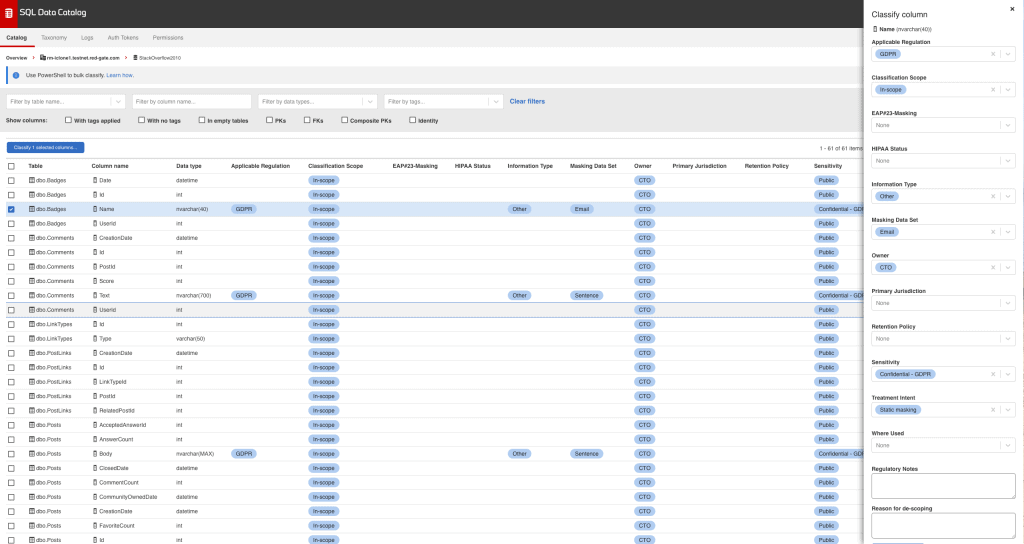

Step 2: Data classification – Create the data catalog

Next, we need to create the data classification metadata, so enter SQL Data Catalog (as of writing this is a pre-release product, in private preview).Once I’ve registered my instance, I can see my target database as a card in the application.

Click on the card and I can see a column view, from which I can search and filter my columns, and then select them by characteristics, such as whether these columns are keys, whether the tables that these columns are on actually contain data (sometimes empty tables are a indicator of an unused module or deprecated feature), and so on.

As an API-first application, there are also endpoints I can use to classify or access metadata programatically, and some PowerShell functions to make them easy to work with.

However I’ve done it, let’s assume I’ve now recorded my decisions about my schema in SQL Data Catalog’s metadata store, along with some other tags.

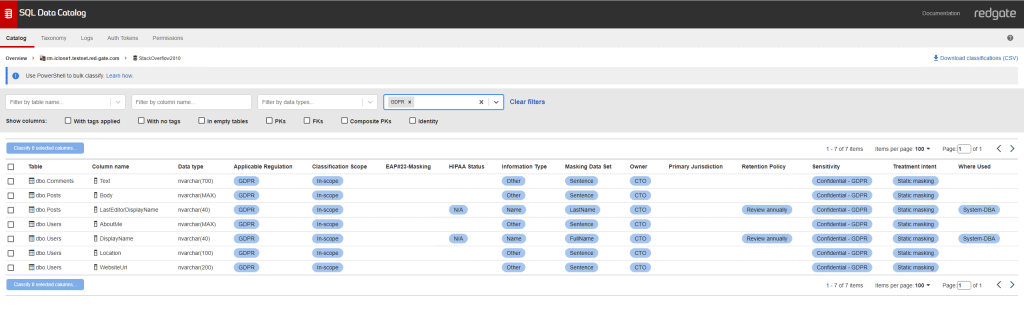

If I filter by ‘GDPR’ in the Tags filter, I see my seven columns which have been selected as candidates for masking.

Part 3 – Generate a Masking Set

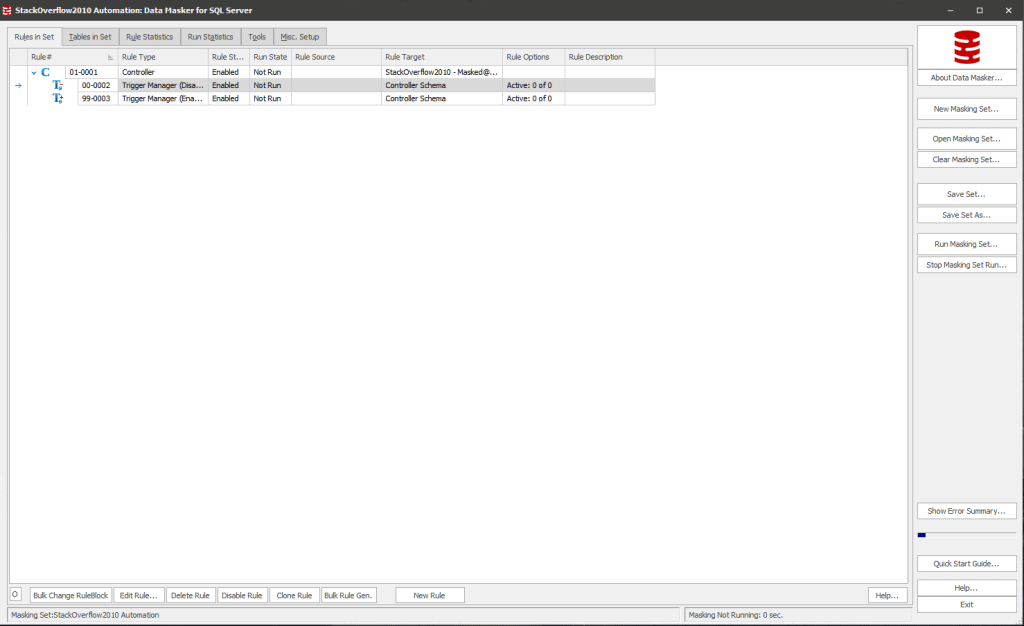

I could simply fire up my masking tool, Data Masker for SQL Server and start manually creating some masking rules. (An Oracle version is also available).

However, instead, I want to see how much can be achieved through automation. After all, most databases aren’t as simple as StackOverflow. How will I cope when I have several hundred columns to mask?

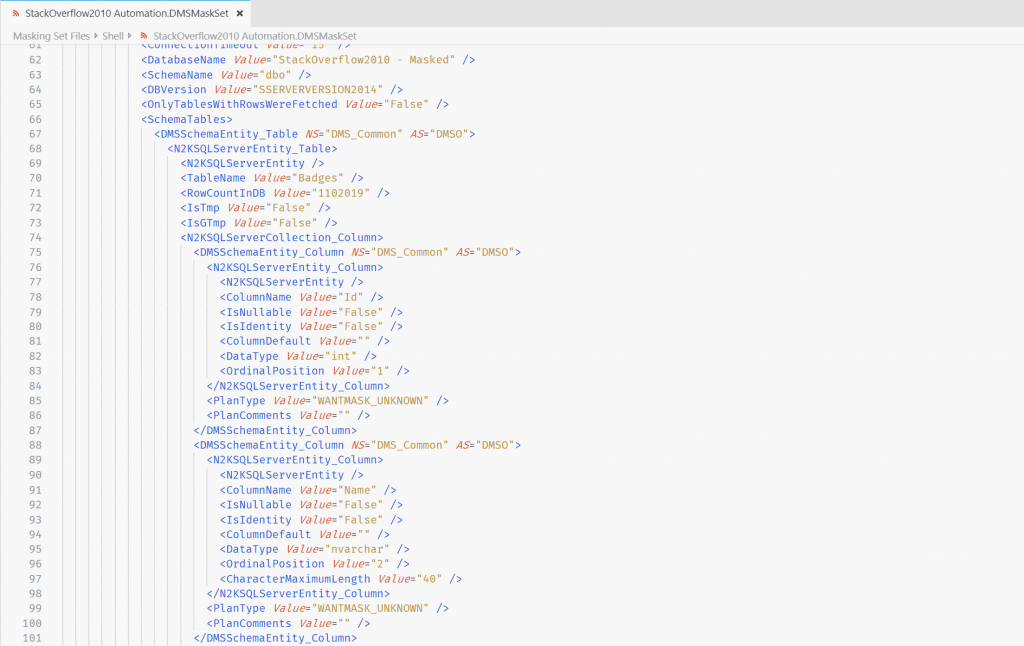

Data Masker works by executing the instructions in a .DMSMaskSet file, which is a bespoke xml – type file describing the operations to be performed, their sequence, conditions, which masking rules will run in parallel, and so on.

When I open a .DMSMaskSet file in the Data Masker application, it looks something like this;

If I open the file in a text viewer, I can see the bespoke xml-like format.

Data masking is a complex subject. In many cases, there are complexities that require advanced features in your masking tool, like handling sensitive keyed values, or ensuring mask consistency across tables or across databases. I’d recommend reading the Redgate white paper on the subject.

Some tasks are simpler, however, like replacing the values in a column with generated values from a masking tool’s supplied dataset. In this scenario – what we call Substitution Rules – I can see that the xml in the masking set file follows a simple format, so I should be able to manipulate the file to add some rules.

And since SQL Data Catalog has a full API, I can access the metadata for my database, and use that to map against some simple substitution rules, then inject them into a .DMSMaskSet file.

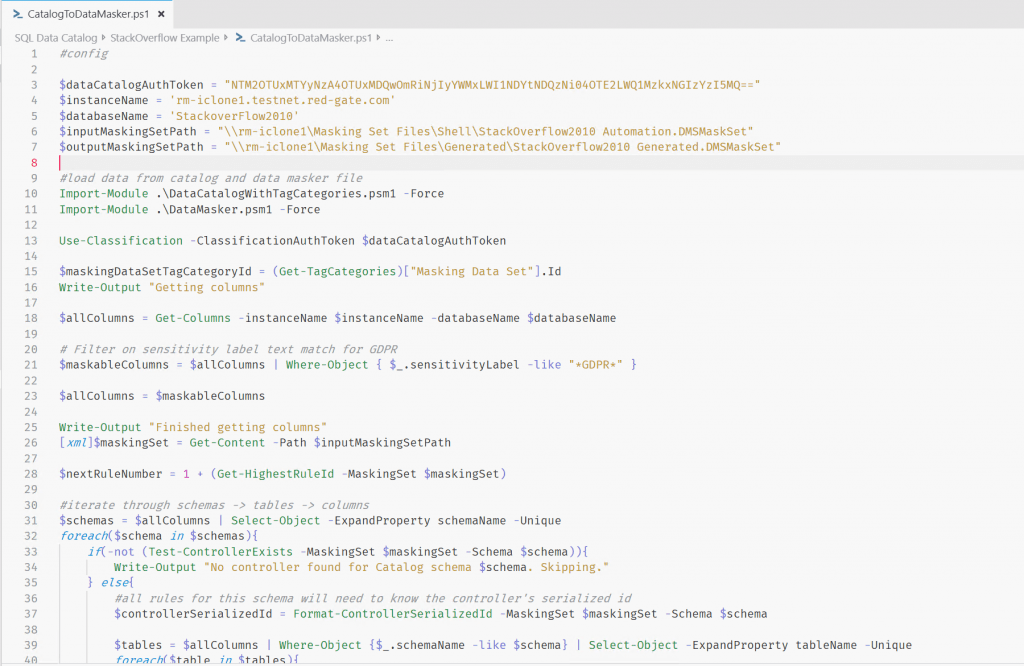

Now this is a bit of ‘special sauce’, and a little bespoke to the task at hand. One of the customers on the SQL Data Catalog private preview wanted a hand with this, so we put together some PowerShell scripts to generate a .DMSMaskSet file that Data Masker can execute.

If there is interest, we may be able to make this more available, but for now here is an image showing the general approach.

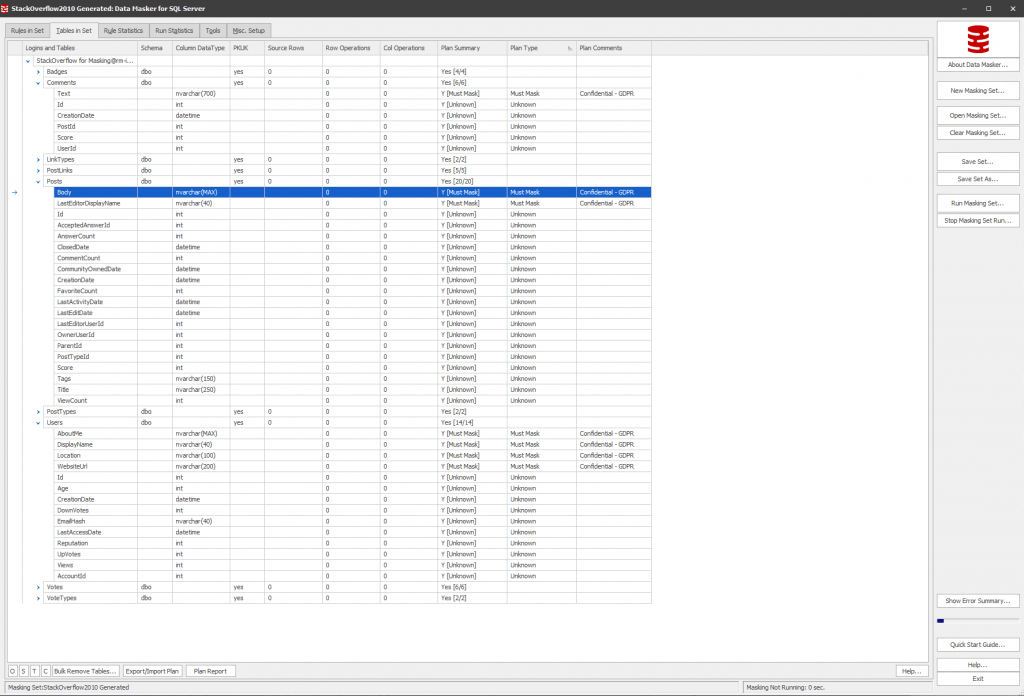

After I execute my PowerShell script, and open the file en the Data Masker application, my ‘Masking Plan’ now looks like this.

Note that the tag from SQL Data Catalog’s ‘Sensitivity’ category has been also injected into the Plan Comments, and whether the column should be masked is recorded. We’ll see later why this is important.

Part 4a: Database provisioning – create a masked database image

Here, we use SQL Provision. Again, I could just jump into the use the user interface , point it at my original database, reference my .DMSMaskSet file and create an image that I can use to create clones.

However, automation, automation, automation! SQL Provision is also highly operable, with PowerShell cmdlets for pretty much anything you’ll need to do. The script below applies our data masking set during SQL Clone image creation, by invoking the code to generate the .DMSMaskSet file.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

Clear-Host # Call as a script. TODO - rebuild as function, export as module Measure-Command -Expression{./CatalogToDataMasker.ps1} | Select-Object Minutes, Seconds Write-Output "Created masking set based on SQL Data Catalog tag values." # Create SQL Clone image using masking set # Script to create a new SQL Clone data image and run a Data Masker masking set on it Connect-SqlClone -ServerUrl 'http://sql-clone.testnet.red-gate.com:14145/' $SqlServerInstance = Get-SqlCloneSqlServerInstance -MachineName 'rm-iclone1' -InstanceName '' $ImageDestination = Get-SqlCloneImageLocation -Path '\\rm-iclone1.testnet.red-gate.com\SQL Clone Images' $MaskingSet = New-SqlCloneMask -Path "\\rm-iclone1.testnet.red-gate.com\Masking Set Files\Generated\StackOverflow2010 Generated.DMSMaskSet" $ImageOperation = New-SqlCloneImage -Name "StackOverflow2010-$(Get-Date -Format yyyyMMddHHmmss)-Cleansed" ` -SqlServerInstance $SqlServerInstance ` -DatabaseName 'StackOverflow2010' ` -Destination $ImageDestination ` -Modifications @($MaskingSet) Measure-Command -Expression { Wait-SqlCloneOperation -Operation $ImageOperation } | Select-Object Minutes, Seconds Write-Output "Finished call to create SQL Clone image using generated masking set." |

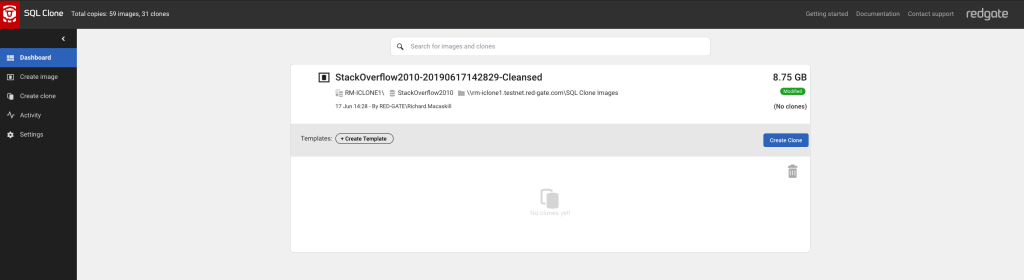

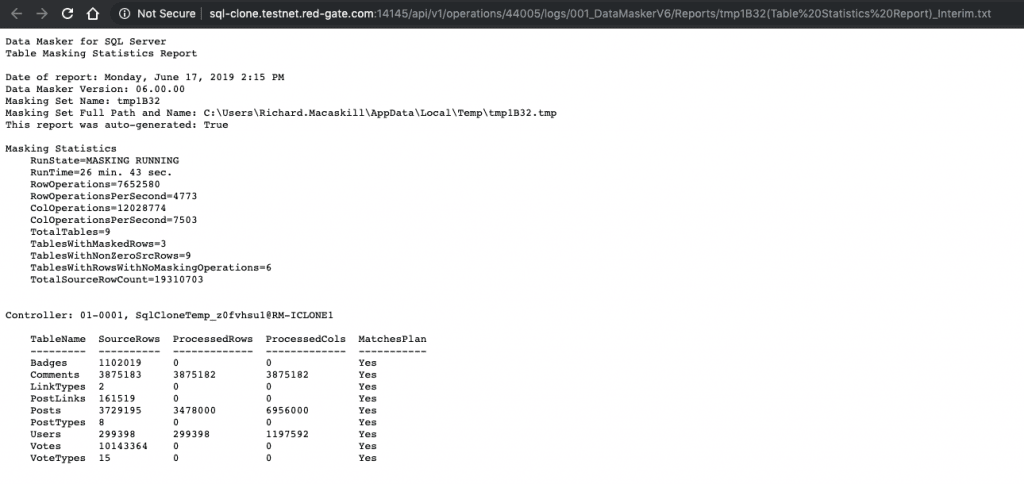

For my 10GB copy of StackOverflow2010, this operation took a little under 30 minutes, on some pretty average hardware.

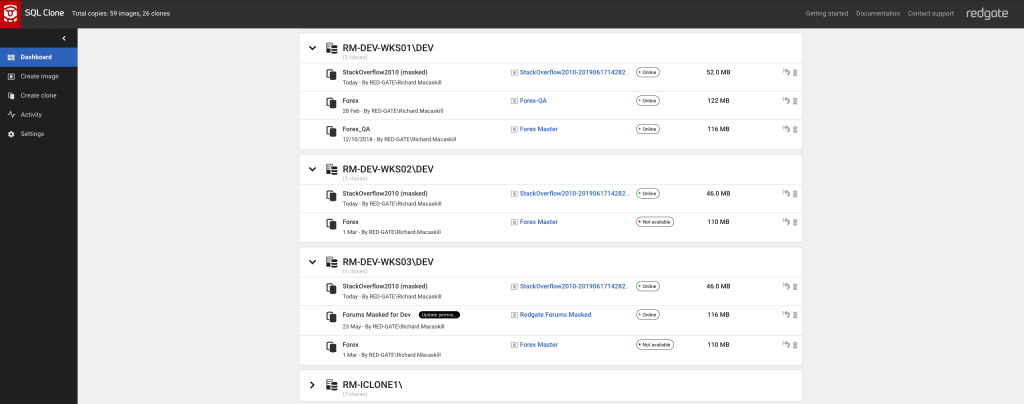

Once it’s completed, I can see my image in the SQL Clone web application. Note the ‘modified’ tag. This is now available to distribute as Clone databases to workstations or other SQL Server instances on my network, arriving in seconds and taking up only a few megabytes of diskspace (thanks to the .vhd virtualization technology SQL Clone uses).

Part 4b: Database provisioning – deliver sanitized clones of the original database

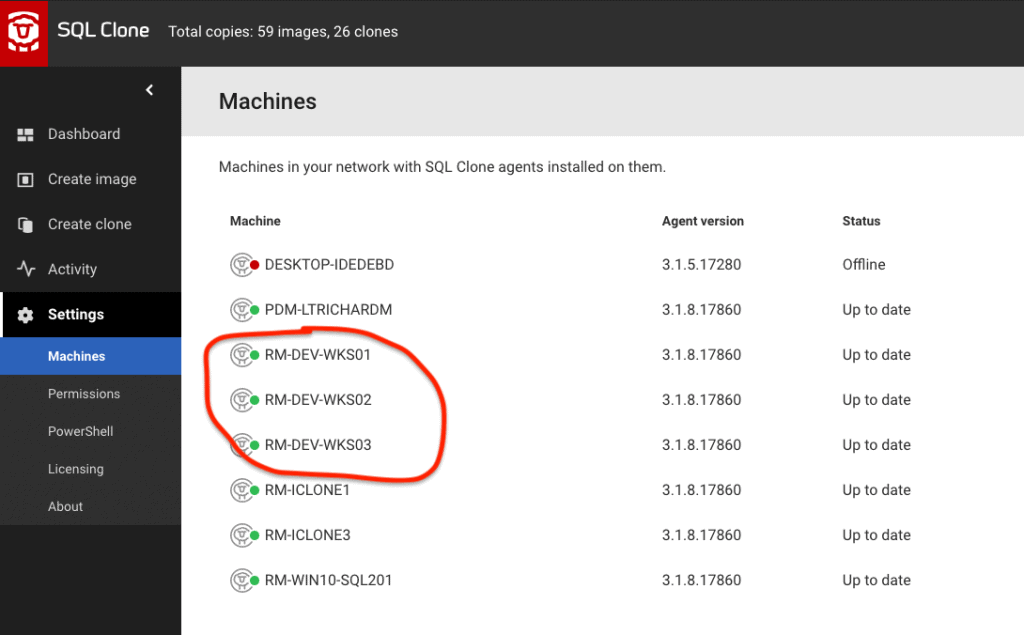

Finally, we must deliver sanitized clones of the original database to our developer workstations. I’ve got three of them to which I’d like to deliver Clone databases.

They all match a ‘WKS‘ pattern, so I can identify them in my script, and deliver a clone of the sanitized StackOverflow image.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

# Script to create a new SQL Clone database on each of my connected machines matching a name pattern Clear-Host $SQLCloneServer = "http://sql-clone.testnet.red-gate.com:14145" Connect-SqlClone -ServerUrl $SQLCloneServer $StackOverflowImage = Get-SqlCloneImage -Name 'StackOverflow2010-20190617142829-Cleansed' $StackOverflowCloneName = 'StackOverflow2010 (masked)' # I have several SQL Server instances registered on my SQL Clone Server - I want to deliver a copy to all of them $Destinations = Get-SqlCloneSqlServerInstance | Where-Object -FilterScript { $_.Server -like '*WKS*' -and $_.Instance -eq 'Dev' } # Start a timer $elapsed = [System.Diagnostics.Stopwatch]::StartNew() "Started at {0}, creating clone databases for image ""{1}""" -f $(get-date) , $ForumsImage.Name $Destinations | ForEach-Object { $StackOverflowImage | New-SqlClone -Name $StackOverflowCloneName -Location $_ | Wait-SqlCloneOperation "Created clone in instance {0}" -f $_.Server + '\' + $_.Instance; } "Total Elapsed Time: {0}" -f $($elapsed.Elapsed.ToString()) |

That took 20 seconds. I now have my 3 clone databases, available on the developer workstations, taking up 46mb of disk space each.

Summary

I’ve completed all of the tasks I set myself. I have a repeatable process. But what else do I have?

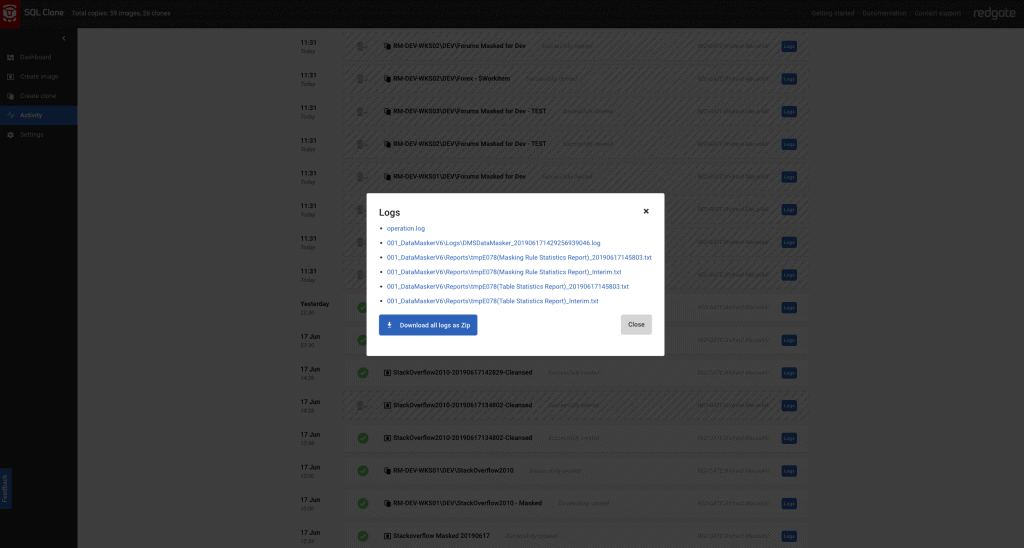

If I go to the Activity tab of the SQL Clone application, I can see that I have a log available, over an API, showing that I did indeed mask what I intended to mask. Logs are also available for the delivery of Clone databases, showing which image they came from, and validating that the source was the correctly protected copy.

We didn’t just perform a task, we created a defensible process for protecting data.

Using this approach would give me the confidence to create a technical policy which will withstand scrutiny.

“Prior to using data for secondary purposes, we identify personal data, then mask it to reduce risk.”

An auditor reading that policy will most likely look for evidence that each part is performed in accordance with the stated policy.

We can anticipate the questions for this policy (or IT Control in auditor parlance);

On occasions that data is supplied for personal use, show me evidence that the following are true;

- Data is identified as personal using a known process

- Subsequent masking operations match that definition

- Supplied data is from that masked sanitised copy, not any other

With this process, I am confident I can answer those questions to their satisfaction.

One last thing

Another advantage of my approach using SQL Notebooks in Azure Data Studio is that I can simply change the connection string in order to review the effect of my work.

Looks good to me!

SQL Data Catalog is running an Early Access Program and planning to launch later in the year.

SQL Provision is available to buy now.

Tools in this post

SQL Provision

Provision virtualized clones of databases in seconds, with sensitive data shielded