Why Database Deployments Sometimes Go Bad

Phil Factor shares some of the common ways database deployments go wrong, and the testing strategies and sanity checks that will stop them happening to you.

Database deployments need testing, including unit, regression, penetration, exploratory and soak/limit testing. Even that isn’t enough. They also need staging with production data volumes, concurrency simulation, and a review of all assumptions. If a deployment can fail silently, it probably will. If a migration script can skip a corner case, it will. Most disasters aren’t caused by wild mistakes, but by quiet oversights.

I’ve seen plenty of ways database deployments can go wrong. The good news? Every one of these failures is preventable if you know why it happened last time, and make sure your process now catches it.

Most often, this means adding a new test, a different type of test, or testing under more realistic conditions, with better data. If I take one lesson from a long database career, it’s that testing is not optional, so you need a development toolset that helps you build a culture of testing into your process.

“When one failed deployment can cost the company thousands, testing is what lets you sleep at night.”

Adam Hafner, Senior Database Engineer, Interstates

Dear Rich Bastard (a cautionary tale)

I often think that some divine power intervenes with database deployments to make them go wrong, just to teach developers humility. They often go wrong in unexpected and bewildering ways. After one deployment in the 1990s, for example, some major customers of a Telecoms company received a letter addressed to ‘Rich Bastard’. A UK-based direct marketing organization undertook a project for this Telecoms company to promote a new ‘gold’ calling card. The campaign involved sending personalized letters to prospective customers, using data extracted from a customer database.

Due to inconsistencies and poor formatting in the name fields of some records, a placeholder salutation was needed for entries with missing or corrupted names. A programmer playfully set this temporary placeholder to “Dear Rich Bastard.” Unfortunately, during the project’s stop-and-start progression, this placeholder was never replaced with an appropriate salutation. As a result, several letters were sent out with the unintended greeting.

While the exact number of affected letters was small, the incident required personal apologies to the recipients. Interestingly, one recipient reportedly framed the letter, and another individual complained about not receiving it, feeling he qualified for the title. The programmer concerned left the profession.

Anticipating the unimaginable

If you just get the message that temporary placeholders need rigorous checking, you will never make a success of deployments. No. As Oscar Wilde wrote “To expect the unexpected shows a thoroughly modern intellect“. There really isn’t any alternative to the Wildean virtue of planning for all eventualities. You must assume that even apparently well-tested deployments can go off the rails and sometimes for reasons that are hard to predict.

One time, a database deployment for a telecoms company seemed plain-sailing, but within hours, the entire production server locked up and had to be rebooted. What happened? Well, the system occasionally ran a scheduled command-line task to import telephone usage data for client billing. It had been tested in development and staging, of course, and yet, that night, the import process suddenly blew through the memory limits on the production server and froze the entire system.

The root cause? An unprecedented spike in phone activity, triggered by the British football team winning an important match. There isn’t a unit or regression test that would have caught this sort of ‘runtime environment’ failure. You also need soak, or limit, testing that exercises the system under real-world workloads and concurrency limits and then pushes beyond them.

We’re not just testing functionality, we’re testing system resilience.

Testing for the unexpected and unreasonable

Practices like rapid or continuous deployments can help pinpoint deployment problems earlier. Deployment safeguards, like feature-switching, can also help limit the damage if a failure does occur. However, none of this means that any part of testing can be skipped.

A common misconception is that automated regression tests are enough, checking that all end-to-end processes produce the correct results for known input datasets. These tests are vital, of course but on their own, they are nowhere near sufficient.

When I designed databases for a large retail bank, my lovingly crafted work was regularly attacked by testers who took great pleasure in breaking it. I could hear their laughter echoing down the corridor when they found a bug. They achieved their high kill-rate by practicing what is usually called exploratory or “tourist” testing: feeding in unexpected inputs to break development processes in ways the developer hadn’t anticipated. There’s also stress testing: applying extreme workloads to see how gracefully performance degrades.

How database deployments go wrong, and how to stop it happening to you

Every experienced database professional has a mental list of war stories: migrations that silently corrupted data, deployments that took down production, optimizations that didn’t scale. This isn’t just storytelling; it’s practical wisdom. Here are some of the ways things can go wrong, and the testing and monitoring strategies and tools that can help prevent them.

A tool like Flyway helps enormously with testing. It can trigger tests automatically on every migration, so they’re never skipped. It also makes it easy to maintain a “test cell” where tests, such as those targeting all supported variants of a database, can be run in parallel. You can read my Database Testing in Flyway Developments series to see what’s possible, and my Better Test Data Management series for advice on managing test data effectively in a migrations-based approach.

Combined with a monitoring tool to catch signs of trouble during testing, you can detect and fix many of the deployment-breaking issues covered in this article before they ever reach production.

1. Data lost in a table redesign

Data loss can occur when redesigning a group of related tables, often because the data migration didn’t preserve every data relationship, or because corner cases were silently dropped or mis-mapped. Rare or unusual data values may be dropped, or incorrectly transformed, without triggering errors. To prevent this, always validate changes in the base tables. Many potential issues can be caught with a full test run, but this must also be repeated in staging, using production-like data. Only then will you catch issues that don’t appear with clean test data.

A tool like Flyway makes it much easier to adopt test-driven development practices that will catch these issues early. It allows you to test your database migration scripts thoroughly to ensure that they don’t break any existing processes and no existing data is lost or corrupted. I’ve shown, for example, how to use explicit transactions to repeatedly test and roll back complex SQL migrations, such as those that require data migration.

Over time, this encourages the team to design applications to be more easily tested and fixed.

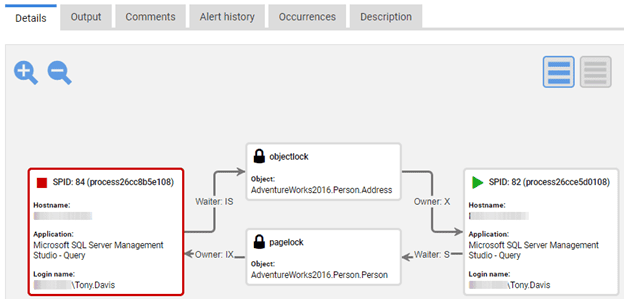

2. Deadly embrace: mutual deadlocks in a new release

Mutual deadlocks can occur when two changes in logic, rolled out together, introduce new locking conflicts under high concurrency. This is a common risk for any intensive OLTP process under heavy load. The interactions may appear safe in single-user or low-concurrency test environments, but under real production load, deadlocks emerge.

It’s far better to avoid deadlocks in production by making it possible to observe locking and blocking patterns during development. Establish a baseline for normal locking behavior and monitor for changes, such as increased lock wait times, as you test new releases. To do this, you’ll need to simulate high-concurrency workloads as part of your test cycle.

Monitoring tools, like Redgate Monitor for SQL Server, Oracle and Postgres, can detect deadlocks and help trace the design flaws or patterns of conflicting updates that cause them. See Diagnosing Deadlocks for some examples.

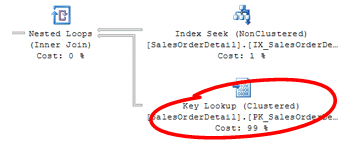

3. The Illusion of a good index

Occasionally, an index that performs beautifully on development data runs like a fly in syrup in production, severely degrading performance. This usually happens when your test data doesn’t match the cardinality and distribution of the production data. Indexes that work well on small, uniform datasets can behave very differently on large, skewed, or unevenly distributed data.

To design indexes that hold up under production conditions, you need realistic, scaled-up test data. But permanently stocking servers with large volumes of data can be a provisioning nightmare. A better option is to use a tool like Redgate Test Data Manager to quickly spin up a short-lived test cell using lightweight database copies (“clones”) based on anonymized production data. I’ve demonstrated how you can use it with Flyway to load, test, and reset any version of the database rapidly, enabling a fast, iterative test cycle.

4. Localized OLTP pain: the unseen hotspot

A deployment can go sour when a high-throughput OLTP process causes severe contention, but only on a narrow range of data. The result is unexpectedly poor performance, but only at certain times. This kind of localized hotspot often goes undetected in development, especially if synthetic workloads and test data don’t reflect the real distribution, intermittency, or volume of production data.

The best way to catch this is to monitor actual usage patterns in production and watch for signs of increased blocking or row-level lock contention after each release. Useful indicators include spikes in lock wait times, blocking chains, or queries whose performance deteriorates unpredictably under load.

Query telemetry tools like SQL Server’s Query Store or PostgreSQL’s pg_stat_statements provide the data to detect these issues, while monitoring tools like Redgate Monitor can help surface them with alerts and track trends over time.

5. Deployment script fails midway

When a deployment script fails midway, whether due to an unexpected error, environmental issue, or missed dependency, it can leave the database in an inconsistent state. If there’s no automated rollback, or if partial schema changes make rollback impossible, the clean-up can be long and difficult.

Flyway : ERROR: Migration of schema 'dbo' to version "1.1.7 - Add Indexes" failed! Please restore backups and roll back database and code!

At line:1 char:1

+ Flyway @MyArgs migrate -target="1.1.7"

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : NotSpecified: (ERROR: Migratio...abase and code!:String) [], RemoteException

+ FullyQualifiedErrorId : NativeCommandError

This happened to me only once, when the company I worked for decided that a staging environment was an unnecessary part of the deployment process. It isn’t, of course. The release candidate should always be deployed to a staging environment, running a restored backup of the production server, and put through the full automated test cycle.

In this case, the failure happened at 3 AM, when good decisions are rarely made. My advice? Don’t attempt overnight deployments. With a tool like Flyway and a test cell seeded with realistic test data, you’ll be able to run the tests that will catch these issues much earlier, so the team can move toward smaller, more frequent deployments during business hours.

“Some say, if you have a good process, you can deploy whenever you want. But if it’s 5 o’clock, or a Public Holiday weekend, why take the risk? With the best process in the world, something could always go wrong.”

Ryan Hird, Technical Lead at Bennetts

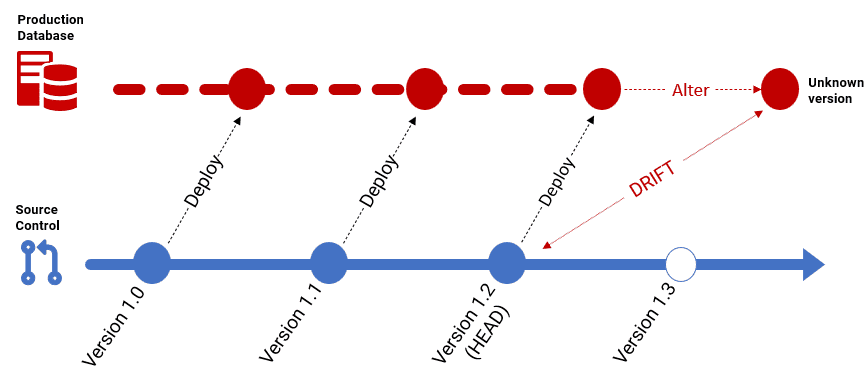

6. Version drift between environments

Drift happens when changes are made directly to a database, outside the controlled deployment process. Automated drift checks are essential for production deployments, to prevent failures caused by unexpected changes, such as ad hoc or emergency fixes applied manually.

In a migration-based approach, it’s equally important to maintain version consistency all the way from development to production. There’s no point in the QA team rigorously testing the “V3” release candidate if it doesn’t exactly match the V3 produced from the versioned code base, due to drift. What we test and sign off must be exactly what gets deployed.

Since Flyway tracks the version of every database it migrates, and using Redgate’s schema comparison engine can also detect any drift from the expected state. Version consistency ensures the team is always testing the correct version, and that what’s released is exactly what was tested and approved.

7. Delayed-impact deployment mistakes

Often, a deployment failure won’t surface until long after the release is complete. A wide range of issues can cause this: table changes that break dependencies in views, functions, or procedures; missing indexes; errors in constraint logic; datatype changes with hidden incompatibilities; changes to scheduled jobs; or faulty data migration for new columns or tables.

“The most stressful deployments fail silently…everything seems fine, until, days later, a client reports a problem, an irate manager appears behind you demanding answers, and there’s no quick fix.”

Thomas Rushton, Principal Data Platform Engineer at Coeo

There’s a common thread to these delayed failures: they become obvious as soon as that part of the system, such as an overnight reconciliation process, is tested thoroughly under a simulated workload. This testing must happen in development, supported by an automated test library that provides broad coverage. Staging should then use the same test library to confirm the new release works correctly with production data. It’s also only in Staging that any required DCL changes for production can be safely verified.

8. Collation or locale mismatches

This is a favorite trick of experienced testers: switching locale settings to see what breaks. It’s all too easy to overlook collation rules in string comparisons, or to assume that date and currency formats will behave the same across locales. A database that works perfectly in en-US can fail horribly in en-GB.

Even more revealing is to test using a right-to-left language or one with pictographic characters. These can quickly expose subtle bugs in string handling, formatting, or display logic. Issues like these often slip through standard test cycles and are only caught by deliberate, exploratory testing that probes system assumptions.

‘9. The ‘Act of God’

Sometimes, fate intervenes in more literal ways. At a large multinational enterprise where I once built database applications, they were developed with iron discipline. Every precaution was taken, and the test department ruled development. I had my own personal tester! They even built their own data center in the countryside, big enough to be visible from the motorway.

On one occasion, a major release went out after six months of rigorous testing. As the Ops team stared out the window, sighing with relief that it was done, there was a hellish noise and a passenger jet crashed into a field near the motorway, narrowly missing the data center. Mercifully, the passengers survived, along with the data center, and the release.

You can’t plan for everything, but you can and should expect the unexpected and design your systems for resilience and fast recovery. Rigorous testing is essential, but so are working backups, source control, and a deployment process that assumes that, even on the calmest day, something might still go wrong.

Conclusion

Before you implement a feature, ask: “How will we test this?” Visualize every stage it touches, each test, edge case, and interaction. Rapid release is only safe when testing is continuous, automated, and tied to strict database versioning.

We need a cultural shift: testers integrated into development, and creative people rewarded for breaking things before users do. Call it DevTestOps. Call it common sense. But let’s give testing back its pride of place in database work.

If you can automate testing, tie it to strict database versioning, and maintain a test cell for parallel testing, as a tool like Flyway enables, then you can promise faster, more frequent, and safer releases. Equally important is to monitor testing with a tool that provides alerts designed to look for signs of some of the problems I’ve listed in this article and warn developers early about problems that could derail a deployment.

Tools in this post

Redgate Monitor

Real-time multi-platform performance monitoring, with alerts and diagnostics