What is Test Data Management (TDM)?

In a world of too many acronyms and initialisms, we’re here to talk about one more – TDM, aka DevOps test data management.

What is DevOps Test Data Management?

Like a cliché best-person wedding speech, let’s start with a definition. Gartner says test data management is ‘the process of providing DevOps teams with data to evaluate the performance, functionality and security of applications…it typically includes copying production data, anonymization or masking and virtualization.’

How does it support DevOps and Database DevOps?

Database DevOps is all about addressing the data bottleneck of the software development lifecycle. By introducing data (or better yet, good quality data) into your testing, you can improve release quality, decrease time to market and reduce risk.

Why should I care about it?

Test data management (TDM) plays a critical role in increasing the quality of your software releases, the speed of those releases and related process, and reduces risk in relation to non-compliance and data breaches.

DevOps TDM touches processes across the whole software development lifecycle (SDLC). So, whether you’re a Developer, DevOps Manager or Platform Architect, here’s why T, D, and M should be your new favorite letters – and what the right DevOps TDM solution can do for you:

Developers, Testers, QA Teams

- Develop and test with confidence - bringing production-like data into lower environments, so you can trust your testing.

- Rapid and secure self-service - self-serve the data you need, when you need it, with sensitive data handled.

- Simplify your workflow - enjoy uninterrupted working and reduce the number of bottlenecks throughout your day, so you can work efficiently without stepping on your colleagues' toes.

DBAs, DevOps Managers

- Improve the quality of software releases - fresh, realistic data in lower environments enables consistent and confident development and testing, reducing your time to market and improving the quality of your releases.

- Simplify workflows - accelerate data provisioning for test and development by enabling developers and testers to self-serve dedicated compliant environments. Streamline and automate the data provisioning workflow so you can deliver predictable value into the hands of your customers.

Platform Engineer, Architects, Site Reliability Engineer, Data Protection Teams

- Improve the developer experience - minimize disruption, encourage collaboration and enable automation so teams can do their best work.

- Automate test data for CI/CD - automate the delivery of high-quality test data as part of your CI/CD pipeline. Enable shift-left testing for better quality code while minimizing CI/CD runtimes.

- Secure data and scale confidently - simplify data security and ease the compliance burden. Safeguard customer data in development and test environments, and control data access.

What are some typical test data management capabilities I should look out for?

- Data virtualization - a virtualized, lightweight copy of a database is used. This will be an exact replica of the original but is much smaller in size.

- Subsetting - creates a slice of a database.

- Synthetic data - data is synthetically generated; no original data is used.

- Data classification and deterministic masking - PII and sensitive data is identified and obfuscated with fake but realistic data - a bit like synthetic data generation, but with only the sensitive data replaced.

What does good test data look like?

For those of you familiar with the ‘3 V’s’ of big data, you might recognize these categories, which are useful when thinking about what good test data looks like:

1. Variety - your test dataset needs to represent production, whether it’s a subset or a masked copy. This means any test and dev data should accurately reflect the variety of data found in production.

2. Volume - although the volume of data you need might differ depending on what type of testing or work you’re doing, making sure you have a statistically significant dataset to test against is important. This means ensuring you have the right volume of data while avoiding costly storage requirements.

3. Velocity - bringing data into your DevOps process shouldn’t slow it down. In fact, it should do the opposite. Making sure you have data that addresses points 1 and 2, while being quick to self-serve and refresh, is key to keeping your processes agile.

4. Vulnerability - with test data management, there’s an added ‘V’ to consider, vulnerability. Throughout all of the above points, you must consider: is your customer’s PII safe, or are there vulnerabilities in the process?

If you’re keen to know more about test data management, and how Redgate can help you, check out our test data management tool here, or get in touch to chat to our teams!

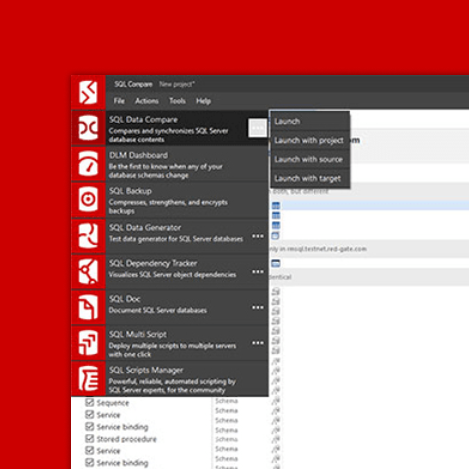

Redgate Test Data Manager

Improve your release quality and reduce your risk, with the flexibility to fit your workflow.