The promise – and the perils – of GitHub Copilot

There’s been a lot of talk about GitHub Copilot recently, loudly touted as Your AI pair programmer. According to GitHub, Copilot for Business brings the power of generative AI to engineering teams, accelerating the speed of software development and innovation.

At the back end is OpenAI Codex, a modified version of the GPT-3 Large Language Model (LLM) used in ChatGPT. At the front end it integrates with code editors like Visual Studio and JetBrains to automatically generate code. It does so either by providing autocomplete-style suggestions as developers code, or as a response to a developer writing a comment about they want the code to do.

It’s joined the increasing variety of AI tools like ChatGPT and Google Bard, DALL-E and Stable Diffusion, all of which promise to change the way people write content, develop code, and create images.

But does it live up to the promise? Just how good at pair programming is it? Is it all it’s cracked up to be? To find out, I spoke to Jeff Foster, Director of Technology & Innovation at Redgate, about Redgate’s recent decision to introduce GitHub Copilot to the engineering teams.

Why did you initially look at GitHub Copilot?

I’d seen the press that touts the productivity gains and I’m a big fan of these Large Language Models – have been for a long time. Who wouldn’t want to use a tool on steroids that gives you a productivity boost? It’s difficult not to be super excited by it.

Plus, GitHub Copilot is one of the few tools that has a business level subscription which give us the ability to manage it centrally. From a data privacy and protection point of view, GitHub Copilot for Business also has a clear privacy statement that states: Copilot for Business does not retain any code snippets data. That gives us some guarantees that the code we’re typing isn’t going to be used to train our competitors so that they can use our code.

The other thing the GitHub business subscription gives you is that it won’t emit code that’s an exact duplicate of an open source project. There’s a setting that basically says Copilot will not produce suggestions of more than 150 characters that are copied verbatim from an existing repository somewhere. We’ve got that other tooling in place that checks the licenses of open source code and does the inspections and security steps that you get through a CI process, but that set the minds of our legal team at rest.

How did you assess its capabilities to ensure it was fit for purpose?

The effort has been driven primarily through engineering, so in terms of actually making the decision for Redgate to try it, that was down to me. I’d played with it on a personal account, as had a number of other people at Redgate, and just heard things on the grapevine about how useful it was. So we made the decision to enable it for every engineer at Redgate for a three-month evaluation.

How did you introduce it?

It was literally just turn on the switch for everyone and shout on Slack that it’s available. It’s the sort of technology that sells itself. Once it’s set up, it’s like having another programmer who’s just typing ahead of you. Sometimes it will be garbage because it’s not aligned with you. Sometimes it will be super useful and save you ten minutes of typing.

Is it really like having a pair programmer beside you?

In lots of way, yes. I’ve got someone who can add comments to my code. I’ve got someone I can have a dialogue with, like Can you simplify that, Could I make that simpler, What are the test cases I could generate for this. I’ve got this conversation I can have almost with myself, and it doesn’t interrupt my flow.

Are you and the developers using it to solve a problem, or to write code for you?

It’s both at once. If I write the comments in code, to explain what I’m going to do, it will fill in the code for me. If I write the code, and it’s similar to other patterns in the code, it will fill that in for me. It uses the context of the files I’ve got open and the text around it to try and complete it. That’s how these LLMs work. So it’s all things to all people. I just need to start writing and it will fill in the rest. It won’t always be perfect and, in fact, it’s probably wrong more often than not, but it’s still a start in the right direction.

Is there a recommended way for developers to use it?

They can use it how they like because it fits in with different workflows. For someone like me, I will be quite accepting of Copilot’s suggestions and then happy to fiddle about with it afterwards and correct whatever mistakes it makes. Others like to structure their code more top down. So they’ll write some comments that describe what the code is going to do, and then they’ll go back and use Copilot to fill in the blanks.

Oh, and some people don’t like it at all. There’s a cohort who think they know better and, while that may well be true today. I’m not so sure it will be true tomorrow. Engineers need to learn to embrace this. This is the future. It’s semi-assisted coding. The need for the human is still there but it’s like having another integrated development environment. You need to bring that along on the journey with you to help you solve problems faster.

How do you check the quality of the code it generates? Are there peer reviews or are you using Test Driven Development?

All code at Red Gate is peer reviewed because we want to write ingeniously simple code, which basically means if you can’t explain it to your co-worker, it shouldn’t be going into the codebase. So if Copilot does generate something fantastically complicated, we’ll take that apart and simplify it, because that’s what we do at Redgate. We also write tests to make sure the code does what we think it does. Again, that’s just part of how we work, with or without Copilot.

We’ve still got all of the guardrails around the outside to make sure that we’re not accidentally deleting code. We’re not sending dodgy data off anywhere, and we always test and inspect the code that Copilot writes, just as we would if a human has written it. We treat it with that same level of respect, even if respect is a strange word in this respect.

Would you say that’s what’s needed to avoid technical debt when using a tool like GitHub Copilot?

That’s exactly the case. If you give a bad carpenter a faster hammer, they’re going to build you a crap table in half the time. If a company is prone to technical debt, if they haven’t got any of the practices and guardrails in place, this is like giving them a coding machine gun to spit out bad code.

You’ve got to have the fundamentals like decent code reviews and the right kind of organizational culture. One thing I really impress on Redgate engineering teams is that we review each other’s code: we make it simple. If you’ve got that, then you’re protected against technical debt, whether Copilot writes it, or some junior developer writes it, or I write it when I’m having a bad day and haven’t had enough coffee. All of those are equally likely to produce bad code, but the system should stop that happening,

Does Copilot increase the quality of code it generates? Or does it depend on the right system and guardrails being in place to check, review and test the code?

Sometimes Copilot will increase the quality of code. You might, for example, ask it: How do I solve this using .NET 7? and it already knows. It’s read all the documentation; it knows the features. So there’s just as much opportunity for it to simplify your code and provide new insights as there is to scattergun rubbish everywhere.

That’s where peer reviews come into play because whoever is doing the review will bring a new perspective to the party. The review will either amplify the cleverness of Copilot, or perhaps nullify it. That’s why we do it.

What about speed? Has Copilot increased the speed at which code is created?

This is one of those questions I never really want to answer because the speed at which code is created is kind of irrelevant. From a Redgate perspective, I’m interested in the value that we generate. So whether it’s 1,000 lines of code or one line of code, it’s all about solving the right problem.

At Redgate, we probably invest ten times more time finding the right problem to solve than on the actual coding. That said, I suspect Copilot does make the coding faster, but because we spend so little of our time coding compared to thinking about the right problem to solve. I don’t think it’s going to make Redgate ten times faster, if that makes sense.

How would you recommend IT teams outside Redgate adopt GitHub Copilot?

Firstly, make sure that you’re not just blindly assuming Copilot will do the right thing. Go in with the experimental mindset that there is no one best way to use this technology. You should create an environment where your developers can learn how to use it in your context, and share that experience so that everyone can get the best out of it. It’s so new, I don’t think there are a huge list of best practices.

If you’ve got technical debt problems, Copilot is not going to help. If you want to release faster, Copilot is not going to help. It’s all about having the fundamentals already in place. Those guardrails for continuous delivery and continuous integration, like peer reviews and test driven development or some other way of being more confident in the code that’s being delivered to your customers. In fact, I probably see those as precursors to getting the most value out of a tool like Copilot.

If you’re already performing well, I think Copilot will take you to the next level. If you’re not performing well as an organization, and you’re shipping bad code, it’s going to make things worse.

Summary

As we’ve seen, GitHub Copilot promises a lot – and can deliver a lot, providing there are guardrails in place to maintain the quality of the code it delivers. A notable outcome of Redgate’s three-month evaluation was the feedback from the engineering team.

In terms of productivity, 67% of developers agreed that GitHub Copilot made them more productive. That sentiment was also seen in the freeform responses about how they felt about the tool which ranged from Don’t take it away! and When used correctly, it can be a gamechanger and a lifesaver, to Copilot gives more bad suggestions than good, and reading those takes effort.

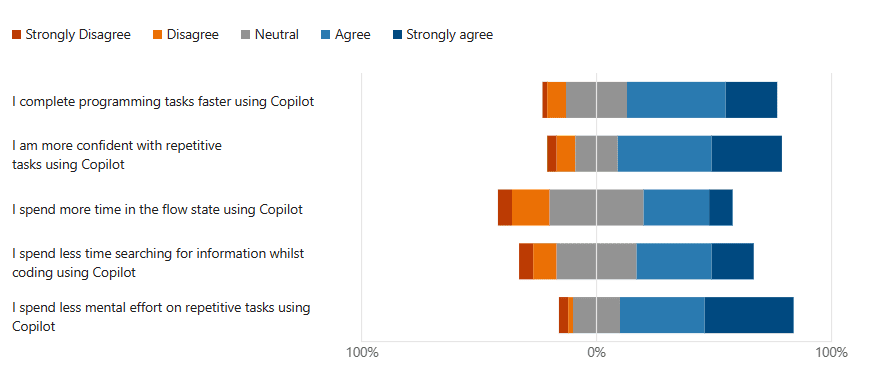

In terms of efficiency and flow, the sentiment was very positive:

What’s interesting here is that the biggest advantage of GitHub Copilot isn’t the increased speed at which code can be generated, which is often seen as the major incentive for introducing the tool. Instead, it’s that engineers spend less mental effort and feel more confident when working on repetitive tasks.

As a result, GitHub Copilot has now become part of the default toolset now being used at Redgate. As Jeff Foster concludes: As Copilot evolves, I expect usability to improve alongside the quality of suggestions. Copilot X offers a tantalizing picture of the future, and we look forward to that being generally available.

If you’d like to know if you can also use AI chatbots like ChatGPT and Bing to write content for you, read: Can ChatGPT be your next Technology Writer?