Problems and Solutions for Agility with Legacy Monolith Databases

Legacy databases can have nice things, too

DevOps processes and tooling are terrific for Greenfield projects -- but they also add significant value to development work on your legacy databases.

This is a big topic, so here's a clickable table of contents for what I cover in this post:

- Can legacy databases work with agile development and DevOps techniques?

- Version Control, builds, tests, and automation reduce the pains associated with legacy database scenarios

- Concerned about conflicts? Embrace Git, especially branching

- Invalid objects, circular dependencies, or slow builds? Use new 'baselining' techniques to begin right away

- Cross-team dependency problems? Document your database schema as you go

- Splitting up a monolith? Build automated tests that align with your architecture plans

Can legacy databases work with agile development and DevOps techniques?

I often hear a variation of the following concern from teams who are preparing to modernize their development practices for database development:

We're ready for DevOps, but only for new databases.

--Managers and Tech Leads

When I ask why this is the case, I always find that the reason is NOT because the older databases are obsolete. Every time I've had this conversation, the legacy databases in question are critical to the business and are under very active development-- and will be for some time.

Instead, I've found that teams don't believe that modern development techniques will work for legacy databases where:

- Multiple teams, some with a large number of engineers, work against objects in the same schema

- Some of these teams may take a long time to ship changes

- Complex patterns are in place such as circular dependencies between multiple databases

- Lack of automated builds have created databases where invalid objects exist

There are real reasons why folks believe these hurdles can't be overcome: it's true that some tooling doesn't work well with circular dependencies and invalid objects. It's also true that release management can be tricky when working with multiple teams with different deployment cadences -- but the truth is this scenario will be tricky no matter what!

Version Control, builds, tests, and automation reduce the pains associated with legacy database scenarios

One blocker is simply the word 'DevOps'. Many of us have so much baggage associated with entrenched processes and pains for legacy database development that it's almost impossible to imagine using 'DevOps' in the same sentence.

Instead of 'DevOps' or 'Agile', think about the successful tools and processes in use in your organization already with applications. You are probably using a Version Control System (VCS) such as Git, automation to build code shortly after it's checked in, automated tests to build quality into the code, and other forms processes and tooling to enable developers to have reliable, private development environments where they can experiment and also validate their changes. All of these tools and processes can be used with your databases -- even your legacy databases.

These tools and processes reduce the pains teams have with legacy database environments by:

- Helping people across teams quickly create accurate development environments -- even when multiple databases or production instances are involved, and complex dependencies exist between them

- Providing ways to automate documentation, merge code, and review changes across teams with significantly lower risk of errors (as compared to sharing code via shared databases or a file share)

- Creating a foundation for test automation, which helps identify errors early before they become an issue between teams, and which also can help prevent complex dependencies from increasing

- Dramatically reducing the toil associated with resetting test and user acceptance environments whenever needed due to changing deployment schedules

At Redgate, we've found that even as teams work to evolve their architecture using a variety of database technologies, they still need to actively develop and deploy changes to their legacy databases -- and that these teams are the most successful when they can use the same workflows to continuously improve speed and quality for changes to all databases in their environment. For these reasons

Concerned about conflicts? Embrace Git, especially branching

It may seem counterintuitive, but isolating development work is critical to working together in a modern Version Control System -- even if you have a large team.

As I wrote in "The Manager's Guide to Git Training for Database Administrators," branching is a critical element to Git. Branching allows developers to:

- Have an isolated place to work on changes, which can be maintained both in their local repo and which can be pushed to a central repo to safely share with others for review and feedback

- Frequently merge in changes from the main code line as well as other active developer branches as needed, in a controlled way

When combined with dedicated database development environments, Git branches can be associated with dedicated databases and updated on the fly with automation as needed. This enables developers to quickly change contexts to review changes from other teams for the same database, without ever losing their own work.

This may seem like a big change, but the good news is that teams on your organization who work on application development are very likely already using these patterns successfully today. Some of these folks may already be part of the teams who work with your legacy databases, or they may be able to temporarily work with you and serve as agents of change.

Invalid objects, circular dependencies, or slow builds? Use new 'baselining' techniques to begin right away

Databases are like everything in life -- they are rarely perfect. For a variety of reasons, if you aren't using version control, builds, and testing for your databases, it's quite easy for ugly patterns and even invalid code to be present in your production environment. Because of this there are technical concerns for some databases which may slow down your ability to get started with some tooling in the marketplace.

Due to the continuing importance of legacy databases in ongoing development, we have invested in tooling and research on ways of working at Redgate to enable you to overcome these problems and get started with legacy databases right away. You should not have to "fix" problems in your database to get started with DevOps -- in part because that dramatically reduces your chance of ever making progress.

This doesn't mean that you shouldn't ever improve the quality of your database -- you should! But having your database in version control and implementing automated builds, tests, and workflows which make it easier for you to validate code quality and track the lineage of changes enables you to improve quality with less pain, lower risk, and more reliability. Additionally, we frequently find that teams are most successful at paying down technical debt when they can do so with incremental changes over time.

To enable teams to get started quickly, even with circular dependencies, invalid objects, or databases with huge schemas, Redgate has introduced technology that allows you to use lightweight clones to quickly get these tricky databases into source control. Clone-as-baseline technology uses a representation of the production state of your database -- no matter its flaws -- as the "base" of new changes you write, allowing your team to truly shift left simulated production deployments in development environments and code builds. This technology also has the perk of producing faster, more lightweight builds for very codebases with thousands of complex objects and dependencies.

Cross-team dependency problems? Document your database schema as you go

A common concern about legacy databases is that it can be very difficult to understand what an object is used by, and what may be impacted if an object is changed.

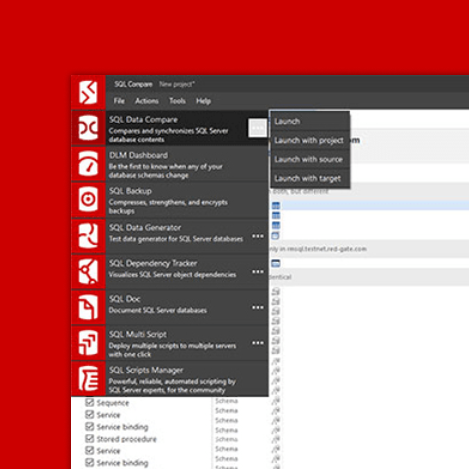

While some "programmable" database objects (for example, a stored procedure), allow comments in the code of the procedure itself, other very important objects like tables don't allow this. Look into the database platform and tooling you are using to find if there is a way that you can automate documentation of these objects, and store that documentation in your Version Control System along with the database schema itself. For example, at Redgate we have tooling that enables developers to easily document SQL Server Databases using Extended Properties, which are stored in the VCS. We also have the option to publish documentation in your automated builds.

Documenting database objects in ways that integrate with Version Control itself has big benefits: this makes the documentation visible to the developer as they check in the code -- there is no risk of forgetting to look up the documentation.

But let's be real -- nobody has time to sit down and document an entire schema, right?

People aren't going to actually do this, Kendra.

---You, probably

Well, nobody should sit down and do this all at once. That isn't realistic. Instead, team members should add documentation as they work on the database, doing a little bit at a time... and there is a way that you can make sure it happens!

You can create an automated test that checks if an extended property has been created associated with the table, column, view, etc. which is being checked in, and you can have that test run as part of the automated build required for a Pull Request. This testing pattern is similar to what we'll over in the next problem and solution...

Splitting up a monolith? Build automated tests that align with your architecture plans

Many teams are working on reducing the number of dependencies for their critical production databases over time. I hear this especially from teams who work in Financial Services, but also from teams in other industries like Health Care, Technology Services and Consulting, and Retail. Approaches for reducing this complexity vary: sometimes the goal is microservices, sometimes it is a different service-based architecture which is, simply, less of a convoluted mess.

One popular approach to reduce complexity is to first encourage the use of schemas within the existing database as a preparatory step. Using schemas helps make ownership of objects clear and can pave the way to more dramatic architecture changes later on. Schemas don't solve everything, however, because complex dependencies may exist between schemas. The goal is to incrementally reduce the dependencies between schemas over time. However, it can be very easy for changes to accidentally introduce new dependencies between schemas over time without anyone realizing what has happened.

To combat this problem, I recommend the following:

- Use a Pull Request based workflow

- In your orchestrator or build tooling, set up rules to identify the name of the schema changed for a database object, and to automatically assign someone from the team who "owns" that schema to review a pull request based on the name

- Create database tests and static data tables that document the cross-schema dependencies which exist at the start of your project. As part of pull request automation, the test will check the current cross-schema dependencies in the database and compare them with the static data tables to identify if new cross-schema dependencies have been introduced.

Documentation and dependencies are only two possible uses for database tests. As with other types of automated tests, it's helpful to build up a body of tests over time, and the tests I've described in this post will hopefully just be the first in a series of automated tests which help improve your code quality.

How to get started

I recommend that folks get started with Database DevOps by, well, actually getting started!

I'm a huge fan of Proof of Concept (POC) exercises, which help you get real, hands on experience with the tooling and processes I've described in this post -- but in a safe environment. Research such as the 2019 Accelerate State of DevOps Report also supports that POCs are an effective way to learn and to adopt new processes.

There is no time like the present to start making things better.