How to Improve the Quality of Database Releases using Clones

This article explains how we can use the ephemeral, containerized databases delivered by Redgate Clone to increase database code quality and therefore the stability, reliability, and performance of the databases we release.

Follow the series

▶ What are Clones? – Get Started with Clones – Development and Testing with Clones

Redgate Clone is a Kubernetes cluster-based technology that makes it very quick and easy to create many copies, or clones, of a database, such as for database development and testing purposes. Each clone is delivered as a data container running on an instance inside a Kubernetes cluster and is a fully functioning database, complete with all the data. However, it is only a small fraction of the original size of the database, and so can be created and reset in seconds.

Clones, delivered as ‘virtualized’ data containers, bring to database development all the same advantages that we get with containerized application development, but without the ‘heavy lifting’ associated with copying large data files into each container. The technology is cross-RDBMS. It also allows cloning an entire instance of potentially interdependent databases, rather than just individual databases. Suddenly, regardless of the RDBMS or the complexity of the database system under development, the database is just another ‘standard resource’ that developers can create, use and reset, as often as required.

Clones give developers the freedom to quickly create, test, break and reset versions of databases, without affecting anyone else, or any other process. This article explains how we can exploit these ‘ephemeral databases’ to improve our development and testing processes in ways that will reduce the number of bugs entering the deployment pipeline, drive up the quality of database releases, and so improve the reliability of database deployments to production.

1. Enable dedicated development databases

Traditionally, databases have been regarded as a ‘heavyweight’ asset. Making a copy of a very large database for development or testing work, or refreshing an existing copy, takes many hours, and requires a lot of disk space. This is one reason why teams are often restricted to one shared development database, one shared test database.

Clones, by contrast, have a very ‘light’ footprint. A clone of a 500GB database can be created or refreshed in 30 seconds rather than many hours and requires only megabytes of storage space.

How does Redgate Clone work?

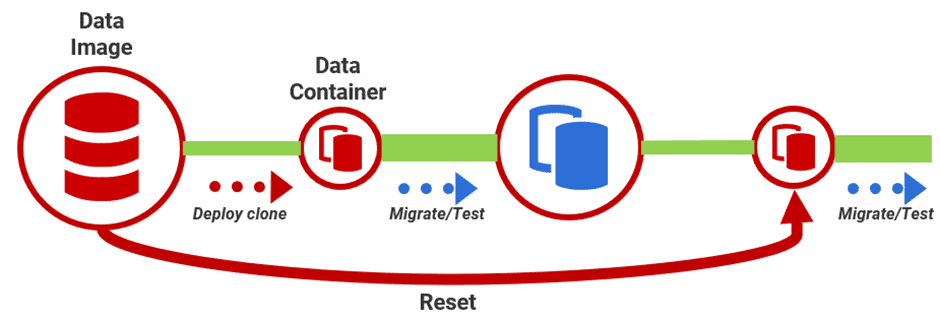

Redgate Clone creates one complete, read-only image of the database files (a data image). It then exploits data virtualization operators in the Kubernetes cluster to allow many database instances to share access to this image. This means that it can install multiple clones (data containers) very quickly, because it doesn’t need to copy the files to every container. Each clone takes up minimal initial storage space because it is using data from the image and only stores locally the data blocks for any changes made to the clone. Please see the documentation for further details.

These clones allow each developer to work on an isolated ‘sandbox’ database, at a specific version, without fear of disrupting the work of others. Developers can reset a clone as required, meaning that development databases cease to be precious and fragile things that must be preserved at all costs.

Developers can experiment, try out new ideas, try out different ways of creating new features to see which one works best, run destructive tests. If a test or experiment messes up a database, then resetting the data container back to its original version takes seconds. The team can save data images for each new version of the database. This is especially useful for cases where you can’t rollback a failed migration (for example, MySQL cannot roll back DDL code within a transaction) since the developer can simply reset the container back to the original version.

This sort of creative freedom tends to significantly improve the quality of the resulting code.

2. Don’t postpone database testing

Database tests have always been difficult and very time consuming to set up accurately and therefore testing is often a slow, separate process, performed exclusively by specialist testers, after the developers have a release candidate.

With clones, the team can do more testing, and more effective database testing, earlier, while they are developing the code. This is often referred to as ‘shift left testing’. If developers run database tests as well as code quality and coding standards checks, before even committing the code, then it prevents problems ever reaching the main branch of development.

Clones aren’t always necessary for running simple unit and integration tests early in development work; it can be done with a build script and a script to load the relevant standardized test dataset. However, they do make certain types of tests, unit or integration, much more viable for developers, such as those that require several copies of the data, or where you want to run a succession of tests on a routine that affects the data or schema.

Also, using clones, the team have the option to maintain several data images per database version, each one containing the test data required for each type of test (integration testing, performance testing and so on). This simplifies test data management and makes it possible to compare test results between versions.

With clones, developers will also be able to test branches thoroughly before merging. They can compare a clone of the development branch at the version when their branch was created to how it exists now, to review whether any of their branch changes potentially conflict with changes committed by others. They can test their migration scripts thoroughly, by spinning up a clone from the latest data image, applying the branch migration script, and then running all the necessary tests to ensure it works as expected.

All this reduces the risk that merging the branch into ‘main’ will introduce errors or bugs that subsequently break the build. Stopping bugs early in development is the most effective way to improve the quality and reliability of database deployments.

3. Run rapid, parallel database testing cycles

Any database test cycle will typically need to: 1) create a copy of the database at the right version, including the data; 2) run the test and assess the result; and 3) tear down the test set up, and reset the database to its original state, ready for the next test.

With a large database, steps 1) and 3) take too much time. Also, tests don’t generally pass the first time. There are usually a series of failures, corrections, reruns, until the code passes. This can mean creating and resetting a database many times.

With clones, this setup-test-reset process is rapid and lightweight, meaning that much more extensive and effective testing can be achieved in the same testing window. Developers and testers can now perform rapid series of test cycles and spin up multiple copies of a database, to run tests in parallel. For example, they can:

- Test different database implementations – a developer can spin up two clones, each implementing the same set of functions or stored procedures but using different techniques, or the same tables with different datatypes or different indexing strategies, and test them side by side to see which performs best.

- Fully test difficult migrations scripts – migrations that perform extensive table refactoring must be tested very thoroughly to ensure that existing data is not affected in any way. With clones this becomes much easier: the developer can establish the two versions of the database, before and after refactoring, as clones, and then run tests to prove that all reports still return identical, correct results.

4. Improve testing accuracy with realistic test data

Certain types of tests, like performance tests or UAT tests, require realistic data. We can create data images that accurately reflect the production system, in data characteristics, volume and distribution, either by a process of masking the production database, or by realistic data generation. Data containers can then be deployed to all development and test systems that require them, quickly and efficiently.

Use of realistic data during development, and in integration tests, will help the team find data compatibility issues earlier in the release pipeline, reducing the occurrence of data-related bugs.

Also, with Redgate Clone, the Ops team can pre-configure the data container as closely as possible to match the production environment. This leads to much greater confidence that the behavior and performance seen in testing will match closely that observed in the production system. It also allows the team to do deployment dress rehearsals that will mimic ‘the real thing’ as closely as possible and give greater confidence that the deployment will succeed.

5. Deploy clones in CI pipelines for continuous verification and testing

In many CI processes, the database is ‘mocked’ since it simply isn’t viable to include it (for the reasons discussed above). Clones, by contrast, are designed to fit into CI processes, where the resource, in this case a database, can be spun up for a quick, specific task, modified as required, and then destroyed and recreated. Clones can be deployed directly into existing build and Continuous Integration services such as Azure DevOps or Jenkins.

The team can build a CI pipeline that automates database builds throughout the development process, continuously verifying that the source code and data files can be used to successfully create the database, with its development data, to the correct version. They can also run integration tests with the ‘real database’. These practices will drive up the quality of the changes delivered, reduce the likelihood of a failed build, and drive down future maintenance costs.

6. Reproduce difficult production bugs, and develop fixes, faster

By testing earlier (see 2, above), we can attempt to minimize the number and severity of the bugs that aren’t detected until after they are deployed to Production, but inevitably they will still occur occasionally.

Improving the quality of database releases also means diagnosing any production bugs quickly and efficiently. Some problems are very difficult to replicate outside the production environment, and without the production data. With clones, the data images can be masked automatically, at the point of creation, so teams can develop and test fixes in a secure environment, on clones with real, but obfuscated, data.

Also, since the clone can be installed in a ‘production-configured’ container, it will help identify issues with other environmental configuration, or dependency, issues that are causing the problem.

7. Enable collaborative branch-based development

With clones and a tool like Flyway to manage migrations, it becomes viable for teams to split each item in the development backlog (new feature, update, bugfix) into a task-based branch, supported by a dedicated database (a clone).

The ability to separate tasks in this way means a developer, or small team of developers, can focus on each specific end-user requirement, leading to higher-quality code. It also encourages DevOps collaboration: with potentially disruptive database changes isolated from other ongoing work, Dev and Ops can work on them in partnership. This left-shifts operational knowledge into the development cycle to fix problems, optimize performance or tighten security. All of which leads to higher quality code and few deployment problems.

Similarly, developers will find branch-switching and collaboration much easier, and without the fear of losing work. They can respond to the demands of end users and changing priorities without compromising quality.

Conclusions

The ‘virtualized data containers’ delivered by Redgate Clone give developers access to self-service ephemeral databases for their development and testing work. They offer all the well-known advantages of delivering an application, in this case a database, in a container, but with none of the maintenance headache, administrative burden or security issues associated with uploading multiples copies of large database files to each container.

Ephemeral, or ‘disposable’, databases enable agile development and testing practices that entail frequent rapid cycles of database development and testing. Developers catch bugs earlier when they are much easier and cheaper to fix. They can work on isolated databases, one clone per branch of development, if required, meaning experimental and destructive changes and tests can be run without fear of disrupting the whole development effort. Changes that affect the data model or data, or that have security or performance implications, can be isolated in a branch, and resolved collaboratively with operational expertise. The resulting code will be higher quality and much safer to deploy without risk of causing disruption to live services.

By incorporating clones into CI pipelines, the team can continuously verify a working database system throughout development. By testing with realistic data, and using a container configured with the same settings as the live production instance, we can find data-related bugs quickly and have confidence that the behavior seen during testing will reflect accurately what will be expected in the production system.