When SQL Server Performance Goes Bad: Implicit Conversions

In this article, you'll learn how to detect and remove a common cause of SQL Server query performance problems: reliance on implicit datatype conversions. We'll use a combination of plan cache queries, extended events, and Redgate Monitor.

When you’re developing a database, the pressure is on to get something that works, using an efficient algorithm. When you are getting close to a release candidate, however, there are some programming habits that must be removed from the code, because they can cause unexpected performance problems. The reliance on implicit conversions, the plan_affecting_convert, is part of the technical debt that you must expunge before the release of the changed code. The use of implicit conversion in a query won’t necessarily cause performance problems unless it forces the query optimizer to use a scan where a seek would be faster, and you won’t get rid of all of the code that causes the warning to happen. However, all your production-quality code must ensure that data that is being compared in a join or a filter is of the same datatype. It’s not hard work, just unexciting.

What is an implicit conversion?

SQL is designed to be obliging. Although it is strictly typed, it tolerates a degree of carelessness in the SQL code it consumes. It allows such things as implicit conversion just so long as the database engine can work out what you want from your SQL Query. It doesn’t have to be good code; any reasonable RDBMS will execute it, but it will grumble quietly about it, if you know where to look. It will certainly raise its eyebrows at anything that causes it to have to select an inefficient query plan. There will be a warning in the query plan that you can see in SSMS or in suitable DMV code. Query performance is often affected, and if it is a commonly executed query, then it will degrade the performance of your application.

Implicit conversions generally happen when, in a WHERE or FROM clause filter of a query, you specify a column with a datatype that doesn’t match the datatype of the column in the table. When two items of data with different data types are being compared in SQL, all the values with the lower-precedence datatype must be converted up to the higher precedence type before the comparison can proceed, and the filter applied.

For example, if you had an EmployeeNumber column in your Employees table, stored as a string, and you decided to specify your filter as an integer, this will cause an implicit conversion on the ‘table-side’, meaning the optimizer must scan every value of that column for every row and convert it to an INT, the datatype of the filter predicate. Conversely, if the EmployeeNumber column was an integer, and the predicate supplied a string, it would just require a conversion of the single parameter value.

By specifying the wrong data type, you render the predicate “unusable” to the optimizer (often referred to as non-SARGable, meaning simply that the “search argument” can’t be used). In some cases, this will have negligible impact, but where it means that the optimizer cannot o use an index that would otherwise have allowed an efficient seek operation, it can cause surprising performance problems. This is most likely to happen if you are converting from string types to numeric types.

What is the performance impact?

Of course, it depends on size of the tables, the datatypes involved, but let’s take the old chestnut from Adventureworks where the NationalIDNumber is a string, an NVARCHAR, but our query supplies it as an integer.

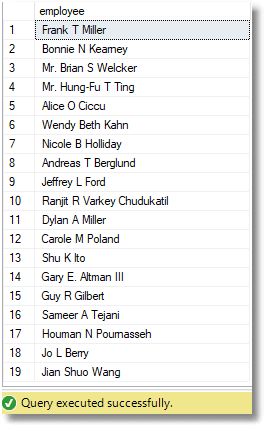

|

1 2 3 4 5 6 7 8 9 10 |

SELECT Coalesce(Title + ' ', '') + FirstName + Coalesce(' ' + MiddleName, '') + ' ' + LastName + Coalesce(' ' + Suffix, '') AS employee FROM AdventureWorks2017.HumanResources.Employee INNER JOIN Person.Person ON Employee.BusinessEntityID = Person.BusinessEntityID WHERE NationalIDNumber IN (10708100, 109272464, 112432117, 11245789, 113393530, 113695504, 121491555, 1300049, 131471224, 132674823, 134219713, 134969118, 138280935, 139397894, 141165819, 14417807, 152085091, 153288994, 153479919, 160739235); |

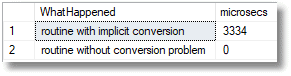

Now that seemed to be OK. There was no noticeable pause when it executed, but then it is a very small table. Let’s just put some figures on this though, using my SQL Prompt snippet (How to record T-SQL execution times using a SQL Prompt snippet) to do simple timings. We’ll take the ‘implicit conversion’ query and run it in a very simple test harness alongside a version that supplies the correct NVARCHAR datatypes in the predicate.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

/** Based on code by Phil Factor (https://www.red-gate.com/hub/product-learning/sql-prompt/record-t-sql-execution-times-using-sql-prompt-snippet). **/ DECLARE @log table ( TheOrder int IDENTITY(1, 1), WhatHappened varchar(200), WhenItDid datetime2 DEFAULT GETDATE() ) ----start of timing INSERT INTO @log(WhatHappened) SELECT 'Starting routine' --place at the start --First version where the list of NationIDNumbers are presented to the filter as INTs SELECT COALESCE(Title + ' ', '') + FirstName + COALESCE(' ' + MiddleName, '') + ' ' + LastName + COALESCE(' ' + Suffix, '') AS employee FROM adventureworks2016.HumanResources.Employee INNER JOIN Person.Person ON employee.BusinessEntityID = Person.BusinessEntityID WHERE NationalIDNumber IN ( 10708100,109272464,112432117,11245789,113393530,113695504,121491555, 1300049,131471224,132674823,134219713,134969118,138280935,139397894, 141165819,14417807,152085091,153288994,153479919,160739235) INSERT INTO @log(WhatHappened) SELECT 'routine with implicit conversion' -- <a id="post-1992691-_Hlk46333224"></a>--Now the version where the list of NationIDNumbers are presented to the filter as NVARCHARs SELECT COALESCE(Title + ' ', '') + FirstName + COALESCE(' ' + MiddleName, '') + ' ' + LastName + COALESCE(' ' + Suffix, '') AS employee FROM AdventureWorks2016.HumanResources.Employee INNER JOIN Person.Person ON Employee.BusinessEntityID = Person.BusinessEntityID WHERE NationalIDNumber IN ( N'10708100', N'109272464', N'112432117', N'112457891', N'113393530', N'113695504', N'121491555', N'1300049', N'131471224', N'132674823', N'134219713', N'134969118', N'138280935', N'139397894', N'141165819', N'14417807', N'152085091', N'153288994', N'153479919', N'160739235' ); --where the routine you want to time ends INSERT INTO @log(WhatHappened) SELECT 'routine without conversion problem ' SELECT ending.WhatHappened, DATEDIFF(mcs, starting.WhenItDid, ending.WhenItDid) AS microsecs FROM @log starting INNER JOIN @log ending ON ending.TheOrder = starting.TheOrder + 1 |

Basically, the result, when the SQL was correct, was instantaneous, whereas it waxed slow when we supplied the list of employees as numbers. OK; 3334 microseconds isn’t enough time to eat a sandwich, but this is just a demo: your million-row will be thrashed, guaranteed. Remember that the second query is too fast to be measurable, and you’re comparing the two.

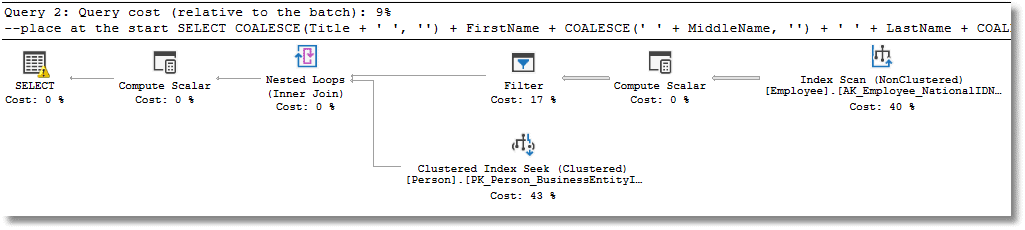

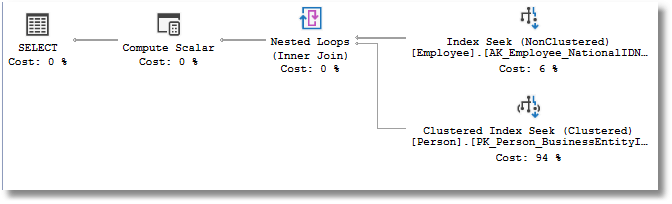

The query plan for the first was …

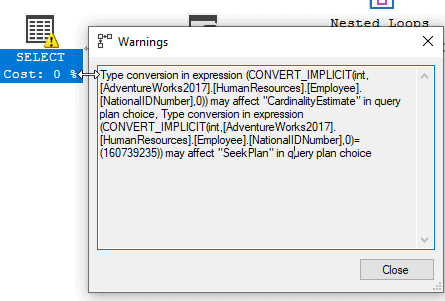

The warning on the SELECT operator is for the ‘plan affecting convert’, which you’ll also see if you have an Extended Events sessions running (we’ll cover that a little later):

The query plan for the second query shows the simple index seek that one would have expected.

Investigating the problem

The “non-SARGable predicate” is just one of many query-related mistakes that can spell trouble for SQL Server performance. In cases where it forces the optimizer to compile an execution plan containing scans of large clustered indexes, or tables, it degrades performance.

If the optimizer is forced to scan every row in a 500K-row table, just to return small number of them, then it will cause avoidable resource pressure. Affected queries will require significantly more CPU processing time, and the optimizer may choose to use parallel execution, speeding the execution of what should be simple and fast query across multiple cores. This will often cause blocking of other queries. Also, many more pages will need to be read in and out of memory, potentially causing both IO and memory ‘bottleneck’.

To detect whether implicit conversions are part of the problem, SQL Server provides two tools:

- The

sys.dm_exec_cached_plansDMV, and other DMVs that provide query plan metadata - The

sqlserver.plan_affecting_convertevent in Extended Events

Using the plan cache

If you have good performance-testers, all they must do is find the code that relies on implicit conversions, by running the database through a range of integration tests. You can then look in the plan cache for query plans from the current database where there has been an implicit conversion on the table-side of the query, as demonstrated by Jonathan Kehayias. This provides all the information you need about the offending queries and columns

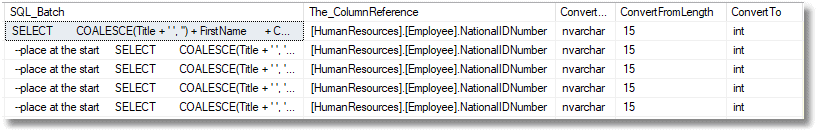

This DMV query returns the statement from the batch that is causing the conversion, the schema, table name, and the name of the column being converted, as well as the original and converted datatypes. It isn’t a fast query, but it gets to the heart of the problem.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

DECLARE @dbname SYSNAME = QUOTENAME(DB_NAME()); WITH XMLNAMESPACES (DEFAULT 'http://schemas.microsoft.com/sqlserver/2004/07/showplan') SELECT stmt.value('(@StatementText)[1]', 'varchar(max)') AS SQL_Batch, + t.value('(ScalarOperator/Identifier/ColumnReference/@Schema)[1]', 'varchar(128)') +'.' + t.value('(ScalarOperator/Identifier/ColumnReference/@Table)[1]', 'varchar(128)')+ '.' + t.value('(ScalarOperator/Identifier/ColumnReference/@Column)[1]', 'varchar(128)') AS The_ColumnReference, ic.DATA_TYPE AS ConvertFrom, ic.CHARACTER_MAXIMUM_LENGTH AS ConvertFromLength, t.value('(@DataType)[1]', 'varchar(128)') AS ConvertTo, t.value('(@Length)[1]', 'int') AS ConvertToLength, query_plan FROM sys.dm_exec_cached_plans AS cp CROSS APPLY sys.dm_exec_query_plan(plan_handle) AS qp CROSS APPLY query_plan.nodes('/ShowPlanXML/BatchSequence/Batch/Statements/StmtSimple') AS batch(stmt) CROSS APPLY stmt.nodes('.//Convert[@Implicit="1"]') AS n(t) JOIN INFORMATION_SCHEMA.COLUMNS AS ic ON QUOTENAME(ic.TABLE_SCHEMA) = t.value('(ScalarOperator/Identifier/ColumnReference/@Schema)[1]', 'varchar(128)') AND QUOTENAME(ic.TABLE_NAME) = t.value('(ScalarOperator/Identifier/ColumnReference/@Table)[1]', 'varchar(128)') AND ic.COLUMN_NAME = t.value('(ScalarOperator/Identifier/ColumnReference/@Column)[1]', 'varchar(128)') WHERE t.exist('ScalarOperator/Identifier/ColumnReference[@Database=sql:variable("@dbname")][@Schema!="[sys]"]') = 1 |

So, all those NVARCHARs had to be converted to INTs!

Extended Events

My preferred way to spot this problem is to run an extended events session that captures the sqlserver.plan_affecting_convert event. The great thing about running these is that those places where an implicit conversion has ruined a good execution plan instantly appear when you run the code.

To prevent any embarrassment on the part of the database developer, it is far better to do this in development, so this is one of the extended event sessions I like to have ready on the development server.

Here is the code that defines the extended events session. it is set to just filter for plan-affecting implicit conversions on AdventureWorks2016. You’ll want to change that, obviously, for your database.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

IF EXISTS --if the session already exists, then delete it. We are assuming you've changed something ( SELECT * FROM sys.server_event_sessions WHERE server_event_sessions.name = 'Find_Implicit_Conversions_Affecting_Performance' ) DROP EVENT SESSION Find_Implicit_Conversions_Affecting_Performance ON SERVER; GO CREATE EVENT SESSION Find_Implicit_Conversions_Affecting_Performance ON SERVER ADD EVENT sqlserver.plan_affecting_convert( ACTION(sqlserver.database_name,sqlserver.username,sqlserver.session_nt_username,sqlserver.sql_text) WHERE ([sqlserver].[database_name]=N'AdventureWorks2016')) ADD TARGET package0.ring_buffer WITH (STARTUP_STATE=ON) GO ALTER EVENT SESSION Find_Implicit_Conversions_Affecting_Performance ON SERVER STATE = START; |

This extended event occurs when a type convert issue affects the plan. It returns an expression value that shows the conversion that can cause inaccurate cardinality estimation, or that has prevented the query optimizer from using a seek-based query plan. If performance is affected, then you’ll need to rewrite the query to make any conversion explicit and to ensure that all your filter predicates are of the right datatype!

Now we can query it for all plan_affecting_convert events, and include the text of the SQL batch that caused the problem

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

DECLARE @Target_Data XML = ( SELECT TOP 1 Cast(xet.target_data AS XML) AS targetdata FROM sys.dm_xe_session_targets AS xet INNER JOIN sys.dm_xe_sessions AS xes ON xes.address = xet.event_session_address WHERE xes.name = 'Find_Implicit_Conversions_Affecting_Performance' AND xet.target_name = 'ring_buffer' ); SELECT CONVERT(datetime2, SwitchOffset(CONVERT(datetimeoffset,the.event_data.value('(@timestamp)[1]', 'datetime2')), DateName(TzOffset, SYSDATETIMEOFFSET()))) AS datetime_local, the.event_data.value('(data[@name="compile_time"]/value)[1]', 'nvarchar(5)') AS [Compile_Time], CASE the.event_data.value('(data[@name="convert_issue"]/value)[1]', 'int') WHEN 1 THEN 'cardinality estimate'ELSE 'seek plan'END AS [Convert_Issue], the.event_data.value('(data[@name="expression"]/value)[1]', 'nvarchar(max)') AS [Expression], the.event_data.value('(action[@name="database_name"]/value)[1]', 'sysname') AS [Database], the.event_data.value('(action[@name="username"]/value)[1]', 'sysname') AS Username, the.event_data.value('(action[@name="session_nt_username"]/value)[1]', 'sysname') AS [Session NT Username], the.event_data.value('(action[@name="sql_text"]/value)[1]', 'nvarchar(max)') AS [SQL Context] FROM @Target_Data.nodes('//RingBufferTarget/event') AS the (event_data) |

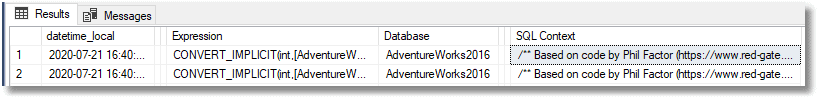

Here’s the result:

Spotting signs of trouble quickly using Redgate Monitor

The ‘art’ of SQL Server performance tuning is, as always, is in being able to spot the signs of trouble early, in the waits and queues that start to form in SQL Server, and correlate this with the processes and queries running on the server at that time.

SQL Server’s performance counters and wait statistics will tell you why requests are being forced to wait, and which SQL Server resource (CPU, IO, memory), if any, is currently limiting performance. The query execution details captured over that period, will then allow you to determine the source of the problem.

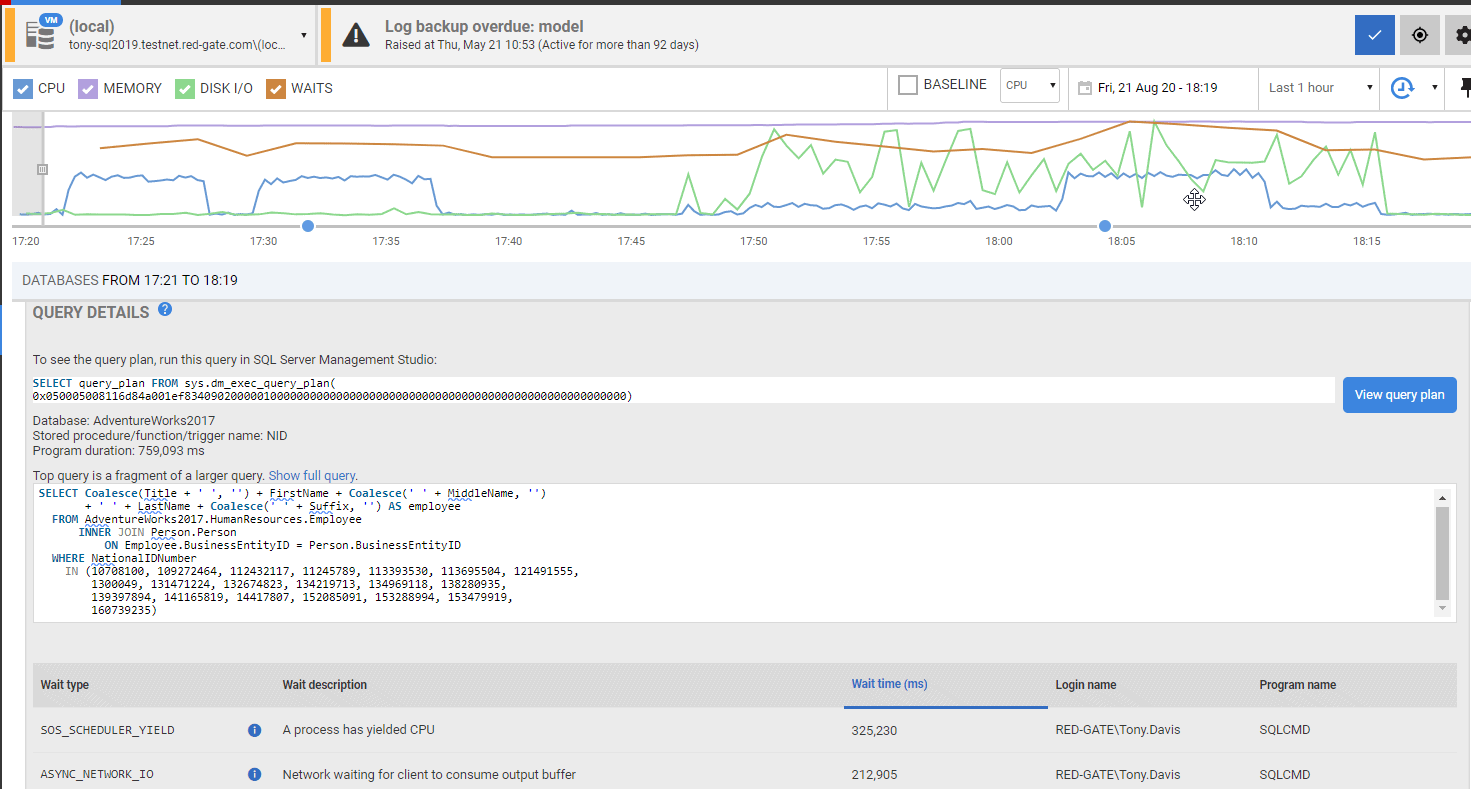

In the following screen, you can see the general pattern of CPU, IO and memory use, along with the wait profiles, for a SQL Server instance, over a period of time when a couple of “Long running query” alerts were raised (the blue circles on the timeline). Below the graph, you can see the details of our previous “NID” query, for Redgate Monitor’s Top Queries list, with associated wait types:

You can view the query plan within the tool, where you’ll see the same type conversion warning on the SELECT operator as we saw earlier, and you can drill into the details using the previous plan cache queries or Extended Events session.

If you use Redgate Monitor to keep an eye on your development and test servers, you’ll prevent most or all these problems from reaching your end users.

If you’re investigating a performance issues on a production SQL Server, you’ll be able to determine quickly, whether it’s a problem you can resolved by tuning the query, or if you can’t, then potentially increasing the capacity or speed of the performance-liming resource.

If you suspect implicit conversions are a strong contributing factor to performance problems, you might consider setting up a custom metric, using a query such as the following, which returns a count of the number of cached plans, for queries executed in the last 10 minutes that took more than 0.1 seconds to execute and which contain implicit conversion warnings.

|

1 2 3 4 5 6 7 |

SELECT Count(*) FROM sys.dm_exec_query_stats qStats CROSS APPLY sys.dm_exec_query_plan(plan_handle) AS execution_plan WHERE Cast(query_plan AS VARCHAR(MAX)) LIKE ('%CONVERT_IMPLICIT%') AND last_execution_time > DateAdd(MINUTE, -10, GetDate()) AND max_elapsed_time > 100000 AND execution_plan.dbid =Db_Id() |

Simply enter the query, and then the instances and databases for which you want to collect this metric. You can collect it for a database, or specific databases, or you could simply remove the filter on db_id, in the last line of the query, and collect it for all databases. You’ll also need to establish the collection frequency (every 5 mins might be a reasonable starting point).

If you see an upward trend or sudden rise in the value of this metric, during periods of server slowdown, you can the following query will list all the queries that contributed to the figure in the custom metric:

|

1 2 3 4 5 6 7 8 9 10 |

SELECT max_elapsed_time, execution_plan.[dbid], Db_Name(execution_plan.[dbid]), SQL_Text.text FROM sys.dm_exec_query_stats qStats OUTER APPLY sys.dm_exec_sql_text(sql_handle) SQL_Text CROSS APPLY sys.dm_exec_query_plan(plan_handle) AS execution_plan WHERE Cast(query_plan AS VARCHAR(MAX)) LIKE ('%CONVERT_IMPLICIT%') AND last_execution_time > DateAdd(MINUTE, -10, GetDate()) AND max_elapsed_time > 100000 AND execution_plan.dbid =Db_Id() ORDER BY max_elapsed_time DESC; |

Alternatively, you might consider a custom metric based on a querying the output of the previous Extended Events session. I’ve described that process in previous articles, see for example: Monitoring TempDB Contention using Extended Events and Redgate Monitor.

Summary

It pays to check warnings from the Query optimizer. The best time to deal with them is when you are tidying up code ready for release, when you have code that has an efficient algorithm and clear purpose. I’d hate to discourage database developers from bouts of wild experimentation and spontaneity, but the race to bring code up to production quality means that all those shortcuts and sketchy routines must be cleaned up. When you are working with development data, a poorly performing section of code may not be obvious, but that’s no excuse. You can see these warnings in the execution plans you see in SSMS, you can see them if you trawl through the cached execution plans via DMVs, and you get reports from an Extended events session. You can’t convince anyone by protesting that you didn’t know. When that hatchet-faced production DBA walks up to your workstation and gives you ‘that look’, you can look in vain for support from any experienced Developer. That sort of stuff mustn’t get out of development.

Tools in this post

Redgate Monitor

Real-time multi-platform performance monitoring, with alerts and diagnostics