Running Structured Database Tests in Flyway

How to run structured Arrange-Asset-Act-Teardown (AAAT) tests, each time Flyway successfully migrates a database, where you can annotate each test script to specify exactly how the test run should proceed, and then save all results and any errors to a simple ASCII report.

This article is part of a series on the requirements and practicalities of database testing, with Flyway:

- Testing Databases: What’s Required?

- Planning a Database Testing Strategy for Flyway

- Test-Driven Development for Flyway Using Transactions

- Basic Functional Testing for Databases using Flyway

- Running Unit and Integration Tests during Flyway Migrations

- Performance Testing Databases with Flyway and PowerShell

- Running Structured Database Tests in Flyway

- Running Database Assertion Tests with Flyway

Also relevant are the series of related articles on Test Data Management.

Catching database bugs early

When you are developing a database, it is surprisingly easy to miss errors in your code, even unsubtle ones that mean that it could never run successfully. To avoid his, you need to run regular tests using a set of queries that execute all the important business processes and reports, and in doing so exercise all the obvious joins, constraints and routines. If the latest changes have introduced a bug, you’ll know about it immediately, when it’s much easier to link cause and effect. If the roof of a building comes crashing down immediately after you injudiciously saw through a brace, you can be pretty sure what caused the problem.

Back in my development prime, test teams would leap on our latest efforts, extracting our mistakes with the zeal of those fishing for sea bass, and frightening the life out of the management. It was rough, but educational. Nowadays, because of the pressure to deliver functionality so rapidly, the only way to get the same sort of immediacy into our database testing is by automating as many of the tests as possible.

Any build or migration in the Development branch should trigger numerous tests. For each new version, your automated test process will need to load the required test data set, run the necessary statements and queries, and then check the results are exactly as expected. This article will, I hope, help you on the path to this sort of continuous testing, or test-driven development. It builds on concepts of the test features in the Flyway Teamwork framework described in Running Unit and Integration Tests during Flyway Migrations and Performance Testing Databases with Flyway and PowerShell. It would be worth reading those first to get a feel for the techniques.

Arrange-Act-Assert (AAA) test pattern

In this article, I’ll take what is probably the simplest test run, starting with the initial Build, running the required queries and statements, testing the results, and ending up with a tear-down. In the unit testing and integration testing of database designs, this format is more frequently referred to as the Arrange-Act-Assert (AAA) pattern, or the ‘Given-When-Then’ pattern. With databases, this must allow for an additional Teardown component.

- Arrange (all necessary preconditions, data and inputs): In this step, you set up the initial data state of your database. This might involve creating test data, inserting records, and whatever is required to prepare the database to simulate a real-world usage.

- Act (on the Database objects under test): This is where you perform the database process or processes that you want to test.

- Assert (That the process worked as expected): After the test actions are run, you check the results or outcomes against expected values or conditions. At this point you determine whether the database operation worked as expected. Assertions can help in determining whether the test has passed or failed.

- Teardown: While not always explicitly mentioned in the AAA pattern, teardown is crucial in database testing. It involves cleaning up the database state after the test is complete. This can include deleting test data, rolling back transactions, or otherwise resetting the database to its initial state.

This pattern dictates to us that any automated test process must be able to handle four different types of script, in any test. ‘Arrange’ requires scripts that make sure that the state is as expected and that the required objects are there and, maybe, ones you will create aren’t already there. The ‘Act’ phase requires scripts to exercise the queries or statements, ‘Assert’ is where you do tests to check the results, and ‘Teardown’ code mops up any mess. While our automated process must accommodate all four types of script, we don’t necessarily have to use all four. If we are merely checking performance, for example, or just making sure that it is error-free, then the ‘Assert’ section is likely to be blank.

Each of these steps will require different handling within a test run. ‘Arrange’ must be done first. Each ‘Act’ expression or batch should be run separately, perhaps with timing, maybe several times, or possibly in a random order. ‘Assert’ tests usually require that results are returned and compared with the expected result. Teardown needs to be done even when there are terminating errors.

How Flyway makes it Simpler

Flyway can run tests automatically if they are scripted. Flyway’s architecture is devised so you can set a test process off via a ‘callback’. Basically, after every significant event, such as the start or end of a migration, Flyway calls a process that looks for a file containing a script that can be executed. The callback script must have a name that conforms to the Flyway convention.

For the leading databases, you can run the tests in an AfterEach SQL callback script so that, if a test fails with a database error, the entire migration step, which is run within a transaction, then rolls back. Alternatively, you can achieve the same thing by running the tests within the migration step itself, and raising an error if the test fails (or even if it doesn’t). You can also opt to handle a test error without a rollback, by using an After callback.

You’ll quickly see that, although it is a good start, it is not enough for a sophisticated test to merely execute a series of tests within a migration step, or within a single SQL script as a callback. For AAAT tests, we could create four separate files for the four different parts of a script for each test run (the Arrange…Act…Assert…Teardown sections), but it is a hassle, especially if you have a specific test for each migration, perhaps run via a callback. How would you test the whole set out in your favorite IDE? How do you keep scripts together and organized? It is often hard enough getting developers to write test scripts, let alone organize them.

How would you then run the tests to see if they run without error and get timings from them, to check performance? It gets worse. Some tests require you to run the ‘Act’ expressions in random order, maybe for many iterations. You’ll see this in performance tests, soak tests and in those debugging sessions for those pesky locking-and-blocking problems.

Using a conventional test script with comment-based annotations

To sidestep all these problems, I use a conventional script that, while you develop the test, allows you to create the ‘Arrange’, ‘Act’, ‘Assert’ and ‘Teardown’ in a conventional IDE such as SSMS, HeidiSQL, RazorSQL or however you prefer. Each phase is labelled by a block comment, as are any necessary instructions such as ‘execute all 45 times randomly‘, ‘execute next 45 times serially‘, ‘pause all 2 secs‘ or ‘pause next 10 secs‘. If the SQL expressions are all in the correct order of ‘Arrange’, ‘Act’, ‘Assert’ and ‘Teardown’ you can also develop and test it easily in an IDE.

I’ve provided an example test script for the Pubs database, called ThePubsExerciser, which runs all the most important business and reporting queries for the Pubs database. For each test run, all we want to know is: do they all still work correctly? Do they still meet the performance specifications? It’s a good script to run, alongside the suite of unit, integration and performance tests, each time Flyway successfully creates a new version. As I write this, it comes in two flavors: SQL Server and PostgreSQL. It has block comments to demark the various parts of the test as sections, with ‘Arrange’, ‘Act’ and ‘Teardown’ labels:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

/* Arrange */ drop view if exists dbo.Book_Purchases_By_Date; drop view if exists dbo.TitlesTopicsAuthorsAndEditions; drop view if exists dbo.SalesByMonthAndYear; drop function if exists dbo.PublishersEmployees; drop function if exists dbo.calculate_monthly_sales; drop function if exists dbo.TheYear; GO CREATE VIEW dbo.SalesByMonthAndYear …etc.… /* Act */ --Retrieve all titles along with the number of authors for each title SELECT title.title_id, title.title, Count (*) AS author_count FROM titles title LEFT JOIN titleauthor ta ON title.title_id = ta.title_id GROUP BY title.title_id, title.title; Go …etc.… /* Teardown */ drop view if exists dbo.Book_Purchases_By_Date; drop view if exists dbo.TitlesTopicsAuthorsAndEditions; …etc.… |

The script contains several expressions, which are usually delimited by the semicolon character. The expressions are in four sections. The ‘Assert’ section in this example is blank, since our goal is simply to make sure the script is error free and to check performance of each individual query. At the end of a test run, we want a report that will tell us which part of the file failed, or, if it succeeds, how long each SQL Expression took.

Let’s split the task into phases. These AAAT tests were designed to be run within the Teamwork framework, but the first two stages can also be run independently:

- Slicing up the script with a tokenizer

We use the SQL Tokenizer to splice a script into its component parts, first into the different AAAT sections of the script, then to individual SQL expressions. It parses out the ‘annotation’ comment blocks as well as keywords, identifiers, string numbers and so on. In AAAT test scripts the annotation comments can contain instructions on how you want a SQL Expression in the ACT or ASSERT sections to be executed. You can specify generally how often they are run, whether they are run in the order they are written or in random order, whether you pause after running, and if so, how long to pause. - Compiling a JSON object for running AAAT tests

The output of the SQL Tokenizer, including all the details of the individual SQL expressions, and annotations that list what needs to be executed, is ‘compiled’ into an object that can be saved as a JSON file and then reused. I’ve provided aRun-AnnotatedTestScriptfunction that reads an AAAT tests file, parses it, saves the JSON file and the executes each of the expressions in the ACT section of the test file according to the instructions. - A full AAAT test run with reporting, within the Flyway teamwork framework

In the final stage, I demo a full AAAT test run within the Flyway Teamwork framework, using either a Flyway callback or post-migration script to locate and run the required AAAT tests, allowing you to specify exactly how the run proceeds, and then saving all results and any errors to a simple ASCII report.

Slicing up a SQL script using a Tokenizer

As in any computer language, it is not a trivial task to slice up any script into its components, or search through it for individual keywords. For a start, they could be in strings or block comments. You cannot even slice up a series of SQL statements or queries that easily. If, for example, you are using semicolon (‘;’) as a query terminator, as most dialects except for SQL Server and Sybase do, how would you find it in code but reject it within a block comment, end-of-line comment or a string? You can’t. You don’t need a parser to do this, because neither a block comment, nor end-of-line comment or a string are recursive. However, you do need a tokenizer that splits up the input stream into tokens that hold their value, type, and their location within the original string.

We’ll use the same SQL Tokenizer that I use for finding details such as three-part-identifiers or CREATE TABLE statements, called Tokenize_SQLString. I’ve demoed how to use it previously to find all the dependencies in routines such as views, where we don’t hold dependency information.

It is possible to use the tokenizer in isolation, but for testing work it is most effective when used within the Flyway Teamwork framework, which already supplies built-in features to help with testing. This includes the ability to use the built-in features of the RDBMS to provide the true execution timings for individual SQL expressions.

The tokenizer will detect the expression delimiter, usually a semi-colon (;) or a GO command (but you can specify your own, or a list), and split the contents into the individual queries. We can use it to find what tables are being used in a series of SQL Expressions. Here it is, just finding out what tables are being accessed by a CTE.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

@' WITH author_sales AS (SELECT au.au_id, au.au_fname, au.au_lname, Sum (sale.qty) AS total_sales FROM dbo.authors au JOIN dbo.titleauthor ta ON au.au_id = ta.au_id JOIN dbo.sales sale ON ta.title_id = sale.title_id GROUP BY au.au_id, au.au_fname, au.au_lname) SELECT au_id, au_fname, au_lname, total_sales FROM author_sales; '@| Tokenize_SQLString|foreach -Begin{$test=0; $LocalObjects=@(); } {if (($Test -eq 0) -and ($_.Value -in ('From','Join','Apply','Insert','with')) -and ($_.Name -ieq 'Keyword')) {;$Test=1;$Previous=$_.Value} #multi-part reference elseif (($Test -eq 1) -and ($_.Value -ieq ('INTO')) -and ($_.Name -eq 'Keyword')-AND ($Previous -ieq 'Insert')) {<# do nothing #>} elseif (($Test -eq 1) -and ($_.Type -like '*Part Dotted Reference') -and ($_.Name -eq 'identifier')) { write-output $_.Value; $test=0 } #Single-part reference elseif (($Test -eq 1) -and ($_.Type -like 'identifier') -and ($_.Name -eq 'reference')) { if ($previous -eq 'WITH'){$LocalObjects+=$_.Value} else {write-output $_.Value; $test=0 } } else {$test=0;} # back to square 1 }| Sort-Object -Unique | where {$_ -notin $LocalObjects} |

Which gives:

dbo.authors dbo.sales dbo.titleauthor

The framework already contains a convenient Execute-SQLStatement cmdlet for executing ad-hoc SQL with timings. I’ve now added a Run-EachSQLExpression function that uses the tokenizer to process the supplied SQL script file, splices it up and executes each SQL expression within it, one after the other, on the target database.

|

1 |

Run-EachSQLExpression -TheFileName '<pathToTheFile>' -TheDBDetails $DBDetails |

We want to run this for any RDBMS (it is up to you to provide the valid SQL for testing!) so we need the $dbDetails object to determine the database details (the preliminary.ps1 script, in the framework, create the $dbDetails hashtable for you, containing all the information that Flyway needs).

Compiling a reusable JSON object for running AAAT tests

If you are using the same test file repeatedly, and it is a large file, it can pay to compile the SQL once and save it as a JSON file. This is done by a cmdlet in the Flyway Teamwork framework called Create-TestObject. It takes the output of the tokenizer and converts it into an object that can be executed within the framework as many times as you need.

In an AAAT test script, the block comments, parsed by the tokenizer, are being used to demark the various parts of the test as sections, with ‘Arrange’, ‘Act’, ‘Assert’ and ‘Teardown’ labels. We can annotate the block comments in the script to specify that individual statements be run a specified number of times, and that the routine should pause a specific number of seconds before between each statement, and to run the statements randomly or in order.

- /* execute all 2 times randomly */ – you just want to execute them all twice, in a random order. By default, they are only done once serially.

- /* execute all 45 times randomly */ – all the expressions in the ACT and ASSERT are done 45 times, each time in a random order

- /* execute next 45 times serially */ – you just want to execute them all forty-file times, in the order you specified in the file

- /* ‘pause all 2 secs’*/ – pause before every execution for two seconds

- /* pause next 10 secs*/ – pause before just the next execution for ten seconds

All this information is kept in the JSON object and is therefore easily repeated, and read by another Cmdlet, or accessed by any up-to-date scripting language.

To execute either a raw SQL Script file with any necessary annotations, or a JSON file containing the model, there is a cmdlet in the framework called Run-AnnotatedTestScript that you can use to test scripts. It executes each individual SQL expression, with timings, and according to the instructions in the annotated comments:

|

1 |

Run-AnnotatedTestScript $pathtoTheFile $DBDetails |

It takes the specified script, and turns it into a JSON test object using Create-TestObject (or if there is already a compiled JSON version, it will use that instead). It then runs the sections of an AAAT script, running each SQL Expression or statement individually, on the database specified by $dbDetails, and capture timings It will execute the ARRANGE section first, then do all the scripts in the ACT section as many times as you need, pausing between them if you specify it, shuffling the order if you require that, and providing timings in the report directory.

Here is just a sample of the output:

the transaction in '

-- The number OF orders for each title FROM each company

SELECT Sum(qty) AS Quantity, Title, Coalesce(stor_name, 'Direct Order') AS Bookshop

FROM

dbo.sales

INNER JOIN dbo.publications

ON publications.Publication_id = sales.title_id

INNER JOIN dbo.stores

ON stores.stor_id = sales.stor_id

GROUP BY Title, Stor_name;

-- The number OF orders of each title, and a list of who bought them FROM each company

SELECT Sum(qty) AS Quantity, title, String_Agg(stor_name,', ')AS Bookshops

FROM

dbo.sales

INNER JOIN dbo.publications

ON publications.Publication_id = sales.title_id

INNER JOIN dbo.stores

ON stores.stor_id = sales.stor_id

GROUP BY Title;

' took 47 ms.

the transaction in '

/* This finds the top 5 authors with the highest total sales, retrieves the titles

they have written, and includes the average sales quantity for each title. */

WITH top_authors

AS (SELECT TOP 5 au.au_id, au.au_fname, au.au_lname,

SUM (sale.qty) AS total_sales

FROM

authors au

JOIN titleauthor ta

ON au.au_id = ta.au_id

JOIN sales sale

ON ta.title_id = sale.title_id

GROUP BY

au.au_id, au.au_fname, au.au_lname

ORDER BY total_sales DESC), avg_sales

AS (SELECT title_id, AVG (qty) AS avg_qty FROM sales GROUP BY title_id)

SELECT ta.au_id, ta.au_fname, ta.au_lname, t.title_id, t.title, t.price,

s.avg_qty

FROM

top_authors ta

JOIN titleauthor ta2

ON ta.au_id = ta2.au_id

JOIN titles t

ON ta2.title_id = t.title_id

JOIN avg_sales s

ON t.title_id = s.title_id;' took 328 ms.

the transaction in '

--Retrieve all single-authored titles and a list of their author:

SELECT title, DatePart (YEAR, title.pubdate) AS "year published",

String_Agg (Concat (au.au_fname, ' ', au.au_lname), ', ') AS "Author(s)",

title.title_id

FROM

titles title

INNER JOIN titleauthor ta

ON ta.title_id = title.title_id

INNER JOIN authors au

ON au.au_id = ta.au_id

GROUP BY

title.title_id, title.title, title.pubdate

HAVING Count (*) = 1;' took 281 ms.

Once you have mastered the problem of reading block comments in a SQL Script, you have a solution that is easily extended. What, for example, if you need to load in a particular dataset for the test or the assertion? Put the instruction into a block comment and extend the Run-AnnotatedTestScript to let PowerShell do it for you.

A full AAAT test run within the framework, with reporting

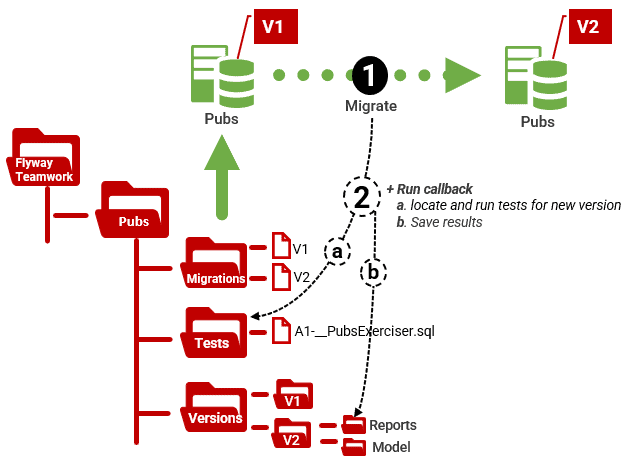

Finally, let’s see a full test run within my Flyway Teamwork framework using a Flyway callback to locate and run the required AAAT tests, and producing a report that will tell us which part of the file failed, or, if it succeeds, how long each SQL Expression took.

Included in the framework is a test mechanism where test files are kept within a Test subdirectory of a Flyway project, in much the same way as migrations files are saved in a Migrations directory. With test files, you don’t specify the version, but instead specify the start and end version for which the test is appropriate. Assuming you have a valid $DBDetails hashtable and have then got the current version using the $GetCurrentVersion script task, then the test files will be executed, if the database version is within the range of the versions specified in the filename.

All these tasks are carried out by a Run-TestsForMigration cmdlet. I’ve explained how it works previously in Performance Testing Databases with Flyway and PowerShell and I’ve now extended the cmdlet to support AAAT tests. It runs an annotated SQL script through construction to teardown, by calling the Run-AnnotatedTestScript cmdlet (described previously).

The Run-TestsForMigration cmdlet will run all the available and suitable tests in the directory you specify. By default, it runs all the tests, but you can choose the type of test (T= a Timed Test, P=a performance test, each one timed and A=an AAAT test). For example:

|

1 |

Run-TestsForMigration -TheDetails $DBDetails -ThePath $Path -type 'A' |

We simply place the AAAT test file (in this case, ThePubsExerciser) in the Test directory with an ‘A’ prefix and the start and end versions for which the test is valid. For example, A1.1.10-__PubsExerciser.sql) is an AAAT test valid from versions 1.1.10 to current version. We then use a callback script to run all the required tests, each time Flyway successfully creates a new version.

This callback script uses the $GetCurrentVersion function in the PowerShell Teamwork framework to get the version of the database specified in $dbDetails (see Cross-RDBMS Version Checks in Flyway). It then uses Run-TestsForMigration to search through the test locations, gather up all the tests that, according to their filename, are valid for the database version under test, then runs them to get the timings. It places the result in the project’s Report subdirectory, for the version tested. The report will tell you which part of the file failed, or, if it succeeds, will tell you how long each SQL Expression took. It saves the results in the Reports subdirectory of the current version. You can find an example callback in the Pubs Flyway teamwork project.

If, of course, you just wish to run all your tests without bothering about callbacks, you can just do this, which searches all your test locations in the project for every parent branch:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

#to run all the tests for this project $dbDetails.TestsLocations.Split(',') | foreach { #for each test location $TestLocation = $_ @('sql', 'ps1') | foreach { #for each type (sql or powershell) of test #run the unit and integration tests Run-TestsForMigration -DatabaseDetails $DBDetails -ThePath $TestLocation -type 'T' -script $_ #run the performance tests Run-TestsForMigration -DatabaseDetails $DBDetails -ThePath $TestLocation -type 'P' -script $_ #run the assertion tests Run-TestsForMigration -DatabaseDetails $DBDetails -ThePath $TestLocation -type 'A' -script $_ } } |

If you run a test, the result appears on the screen and is also saved in a file in the tests subdirectory of reports, for the version under test, within the Versions directory. If, for example, you ran this for a particular branch, on version 1.1.12, the report would be saved in Versions\1.1.12\reports\tests\*.

Conclusion

With database development, you are expected to deliver reliable functionality rapidly. There are many techniques and disciplines that contribute to this, such as working cooperatively with others in the team, but it is being able to fail fast and correct code rapidly that seems to me to be vital. At the heart of a DevOps culture is scripting, and scripting is the key to rapid unit, performance, and integration testing.

Without waxing philosophical, I believe that tests should happen routinely, driven as scripts. This means that they should happen after a build, merge, or migration. To do this, you need a ‘hook’ of some sort that initiates the test scripts. Though you can use GitHub for initiating a migration, Flyway shines at providing hooks, especially Flyway Teams, because you can execute test scripts in callbacks before or after an event such as a successful migration.

Although it is disconcerting to occasionally see a sea of red on your computer screen as a result of testing a migration, it is worth it to help you deliver bug-free database code and design that performs well.