Bringing DevOps to the database. Part 2: Continuous delivery

In part 1 of Bringing DevOps to the database, we saw how DevOps thinking is moving from the application to the database. By encouraging collaboration not competition between developers and Database Administrators (DBAs), choosing the right tools, and introducing version control for databases, organizations can open the doors to database DevOps. That’s not the end of the story, it’s just the beginning because with version control for the database in place, other practices like continuous integration can follow.

Version, or source, controlling database changes is the first – and vital – step in bringing DevOps to databases. It ensures database developers communicate their changes with others in the team, always have a version to roll back to if required, and maintain a solid audit trail. With the ability to share code, multiple people and teams can access pieces of code, or a database, at the same time.

However, introducing source control for databases can be problematic if new tools enforce strict, unfamiliar procedures and compel database developers to work in a different way from application developers. Far better to make the most of the infrastructure, knowledge and working practices that already exist inside organizations by integrating source control for databases with application version control systems like Team Foundation Server or Subversion.

It doesn't need to go against the principles of the IT Infrastructure Library or ITIL, either, which advocates IT services are aligned to the needs of the business and support its core IT processes. The most widely adopted framework for IT Service Management in the world, ITIL is often blamed for imposing burdensome processes on the development pipeline.

As Jez Humble explains in 'Continuous Delivery and ITIL: Change Management', source control actually contributes to making effective use of the lightweight mechanisms ITIL provides for change management. He specifically writes: “Every proposed change to your systems, whether to an application, your infrastructure, your database schema, or your build, test and deployment process itself, should be made via source control.”

Continuous delivery may not be the goal – but it can be the prize

With source control in place, application and database development teams work in parallel and coordinate changes through the same processes. It is then much easier for databases to keep pace with updates in the application, increasing the likelihood of releasing more stable, consistent builds.

The opportunity then arises to take the DevOps approach further by aligning the development of database scripts with application code in continuous integration and release management. Many application developers already use continuous integration to automatically test their code, and release management tools to automate application deployment. Database developers can join them.

The result? Continuous delivery for databases. Continuous delivery extends DevOps thinking from software development through to deployment. Rather than regarding the release of software as a separate activity, continuous delivery means software is always ready for release.

Additional data management, migration, and monitoring processes may be required to safeguard data, but the broader benefits of continuous delivery gained by standardizing deployment processes through automation remain the same for the database.

By automating onerous processes so they are quick, reliable, and predictable, DBAs and development teams are freed to concentrate on more important tasks like high availability, replication, downstream analysis, and alerts and backups.

The support continuous delivery provides for the easier, faster release of changes also means development and operations teams no longer need to rely on big bang releases to provide value to customers. Instead, organizations have the processes and mechanisms in place to provide a steadier stream of more frequent releases.

The first step is continuous integration

Continuous integration is the process of ensuring that code and related resources are integrated regularly and tested by an automated build system, allowing teams to detect problems early.

Once source control is in place, continuous integration can be used to trigger an automated build as soon as code changes are checked in, with unit tests and early feedback in the form of errors returned. If a build fails, it can be fixed efficiently and re-tested, so a stable current build is always available.

A typical continuous integration server uses a script to execute a series of commands that build an application. These commands clean directories, run a compiler on source code, and execute unit tests. Where applications rely on a database back-end, those build scripts can be extended to perform the additional tasks of testing and updating the database.

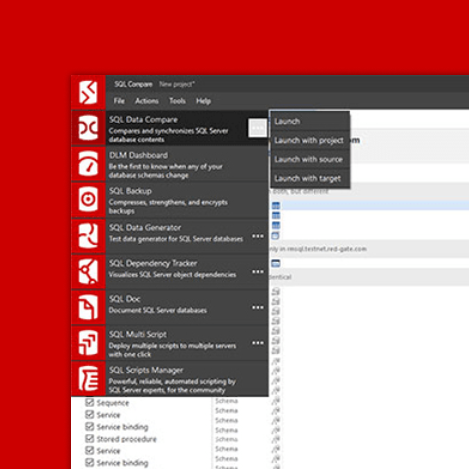

If it sounds like a hard task, it’s not. There are already tools out there that plug into existing build servers like Jenkins or TeamCity and, on each check-in to source control:

- Build and validate the SQL creation script contained in the database package the continuous integration tool needs to deploy the changes

- Run tests against the database package, by generating test data and outputting the results in JUnit XML format

- Sync the existing database with the latest version in source control

- Publish the database package to a feed artifact repository ready for deployment

Any migration scripts that have been checked in for deployment with the database changes are also executed against the target database during this step. The database package, or artifact, that is published will then include the migration scripts alongside a snapshot of a state of the database schema, and any source controlled static data.

This artifact is an important part of the release process because it represents a version validated through testing. It thus becomes a consistent starting point for the release of database changes to subsequent environments.

The second step is release management

Although the continuous integration environment often mirrors the production environment as closely as possible for applications, this is rarely the case for databases.

The artifact published at this stage therefore needs to be deployed against a staging database, which should be an exact copy of the production database, or as near as possible. This will generate an upgrade script for deployment, and the whole artifact can then be reviewed by the DBA to confirm it is production-ready.

Just as there are many strategies for application deployment, there are a variety of ways to handle database deployment. The three most common are:

Fully manual

A comparison tool is used to compare the structure of the staging database against the production environment and generate a script for the differences. DBAs then review this script before running the updates against the target environment. This makes change management simple and is often the preferred method when working with smaller databases or less frequent deployments.

Automated, one-click release using a release management tool

If the release management tool in place uses a NuGet feed as its package repository, there are tools available that can publish the package from the continuous integration server to the release management software. The release management tool can then automate the deployment to production.

Managed deployments using a staging environment

For DBAs who want more control and have a deeper insight into database deployments, tools have been developed specifically for databases that integrate with release management tools like Octopus Deploy and Bamboo to provide the update scripts, change reports, and review steps needed to make database changes to production efficiently. DBAs can review the changes, check the staging and production environments match, and use the same script to deploy to production.

Is it really worth the hassle?

Reading about the advantages of source control, continuous integration, and release management for databases is one thing. Doing something about it is another because it does require change. Adopt software that works with and alongside your existing software and you minimize some of that change, but it still means adapting current processes, encouraging communication and collaboration, and getting buy-in from different teams.

The pay-off, however, is being recognized by more and more companies as worthwhile. The main finding from the latest State of DevOps Report from DORA and Puppet Labs was that high-performing IT organizations experience a 5 times lower change failure rate – and recover from failure 96 times faster than other organizations. The report also found the same organizations deploy 46 times more frequently, and lead times are 440 times faster.

Quite simply, DevOps and continuous delivery practices create the conditions for delivering value faster, sustainably. Include the database and both application and database development become faster and more reliable. Which is good for everyone.

Part 1 of 'Bringing DevOps to the database' talked about why version control is an essential first step. Read more about it.

If you'd like to know more about how database DevOps can help you deliver value faster while keeping your data safe, visit our online resource pages.