3 ways Managed Services Providers can offer more value

For many businesses, using a Managed Services Provider (MSP) makes sense, particularly when it comes to database management, monitoring and security. They can control costs while still having access to expert resources, and dial up or down the service as required.

It’s perhaps no surprise then that 47% of people surveyed in the Channel Futures 2020 MSP 501 Report identified professional services as a growth area, 51% identified enhanced network monitoring, and 85% identified security services.

For many MSPs, this presents a challenge because, according to the 2020 Global State of the MSP Report from Datto, 25% of MSPs say hiring good people is one of the top issues that keeps them awake at night. A figure that rises to 27% in Europe where hiring good people is the #1 pain point for MSPs.

That said, there are ways database MSPs can save time, minimize effort and work more efficiently, while at the same time providing more value to their customers.

Manage growing server estates more effectively

Remote monitoring is a common task for MSPs and typically involves keeping an eye on resource usage like CPU, disk space, memory, and I/O capacity to spot trends and understand when more capacity will be needed. The same information also enables baselines to be established so that, for example, it is immediately apparent if a high-resource utilization is an abnormal spike, a worrying recent trend, or just normal behavior for the period in question.

Alongside scheduled monitoring, reactive monitoring also has its place in responding to alerts about a drop in performance or an increase in deadlocks, and drilling down to the cause of the problem before it becomes an issue. Here, the history and timelines which monitoring provides will help to identify if a stress phenomenon coincides with a particular type of processing, such as a weekly aggregation or a scheduled data import.

Many MSPs use their own scripts for monitoring and rely on the built-in resources in platforms like SQL Server and Oracle to help them. PerfMon, Dynamic Management Views (DMVs) and Extended Events in SQL Server, for example, alongside the Activity Monitor in SQL Server Management Studio, can often provide some of the data required for effective monitoring.

The cracks begin to appear when MSP server estates start to grow significantly in terms of size and complexity. The typical mixture of on-premises, on-client, and public cloud hosted servers adds another level of difficulty in terms of estate management and finding a solution. This is when a third party monitoring tool like SQL Monitor comes into play.

It doesn’t replace the use of DMVs and Extended Events, etc, which will still be required. Instead, it removes the heavy lifting of data collection and management, analyzes the data, and provides an easy-to-digest picture of activity and any issues and alerts across the monitored servers, on one dashboard.

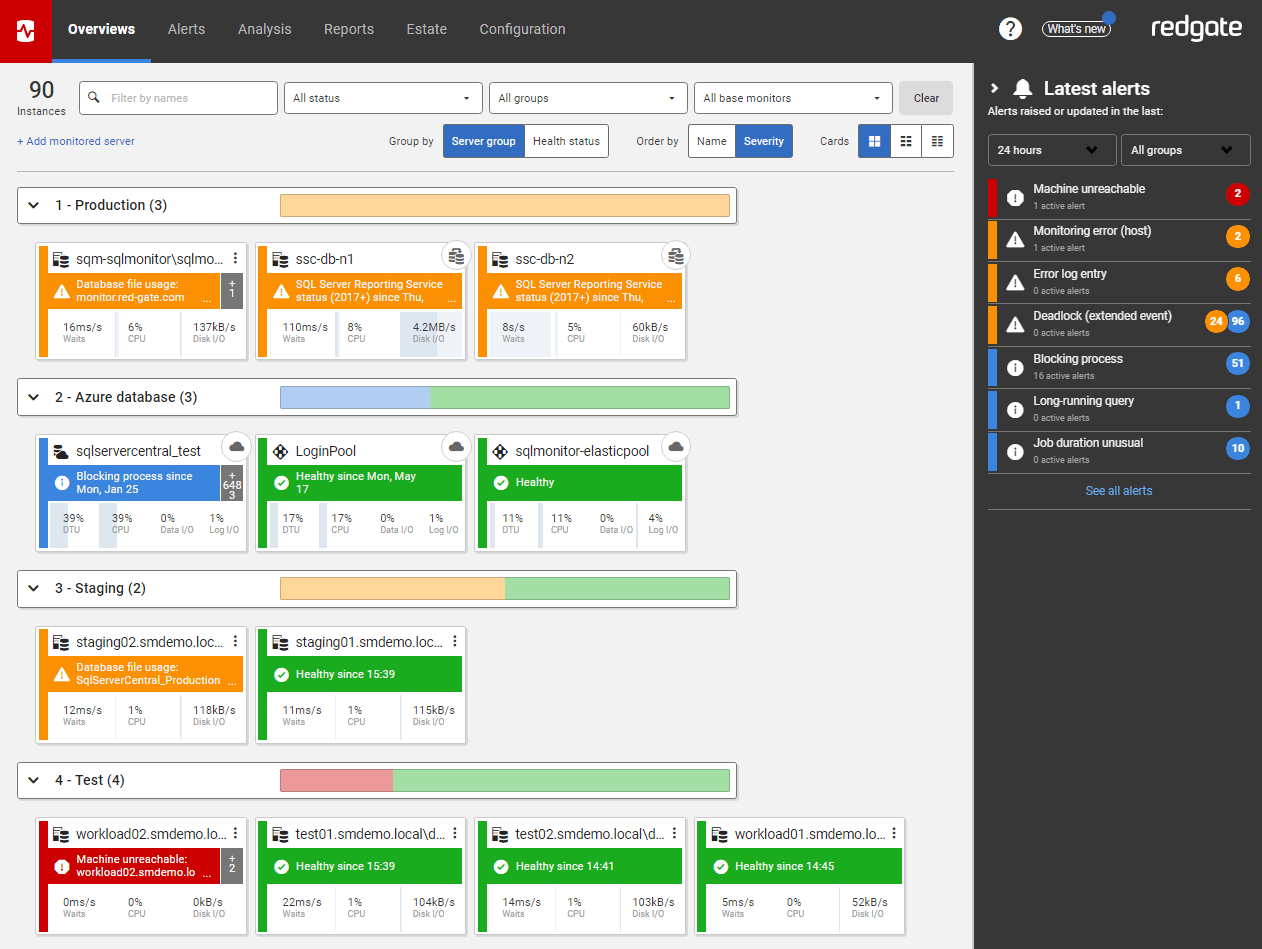

For larger estates where there are likely to be different versions and editions of SQL Server, as well as instances in the cloud, this can be particularly useful because SQL Monitor shows the status and key metrics for every server:

Importantly for MSPs, it also allows databases to be grouped in a number of different ways – in the instance above, the databases are grouped by test, staging, cloud and production environments, for example. You might prefer to group them by customer, environment type, or health status.

However they are grouped, it allows all SQL Server instances, availability groups, clusters, and virtual machines to be viewed on one central web-based interface, and has customizable alerts that can be configured to suit SQL Server estates of any size and complexity.

This removes manual, repetitive daily tasks and enables MSPs to keep pace with expanding estates, discover issues before they have an impact, and diagnose problems to find the root cause in minutes, not hours.

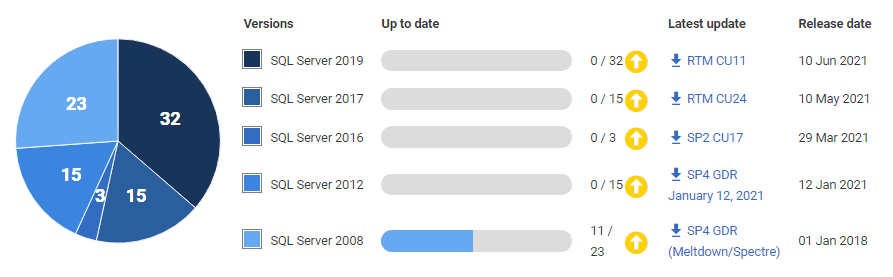

Another feature of particular use with estates where there are different flavors of SQL Server in use is the Estate tab. The summary of installed versions alone is invaluable because it shows, at a glance, the status of each SQL Server version, when the latest update was issued, and where patches are required:

Beyond this, it also offers a wealth of information about issues like SQL Server licensing, disk usage, and backups, which makes managing estates easier, however complex they are.

Monitor for security as well as performance

Alongside monitoring for performance, there is an additional and now pressing need to monitor for security. New data protection regulations, for example, require organizations to monitor and manage access, ensure data is available and identifiable, and report when any breaches occur

They also need to know factors like what servers are online, whether unscheduled changes are occurring inside databases, if tables or columns are being added or dropped, and if permissions are being altered.

So beyond traditional expectations, businesses need to know and have a record of which servers and what data is being managed, and be able to discover the reason for any performance issues quickly and accurately.

Should a data breach occur, it becomes even more crucial because organizations are obliged to describe the nature of the breach, the categories and number of individuals concerned, the likely consequences, and the measures to address it.

While this adds another element to the workload of MSPs, they can be prepared for it with an advanced monitoring solution like SQL Monitor which can monitor the availability of servers and databases containing personal data and provide alerts to issues that could lead to a data breach.

In his Monitoring SQL Server Security article on the Redgate Hub, Phil Factor looks at what’s required and details how SQL Monitor can be used to do everything from detect SQL injection attacks to identify changes in permissions, users, roles and logins.

Secure data everywhere

Another important requirement of the new data protection legislation being introduced is to protect personal data all the way through the development process. This is a major concern for MSPs that act as remote DBAs because many developers like to use a copy of production databases to test changes against – the very databases which contain the personal data that needs to be protected.

One solution is to have a version of the production database with a limited dataset of anonymous data that is always used to develop and test against. This does, though, mean testing changes against a database that is neither realistic, nor of a size where the impact on performance can be assessed.

Another solution is to take a copy of the production database and mask the data manually by replacing columns with similar but generic data. This copy can then be used in development and testing but will age very quickly as ongoing changes are deployed to the production database.

This is where data cataloging and masking tools, which identify, pseudonymize and anonymize data, are now being adopted to provide database copies that are truly representative of the original and retain the referential integrity and distribution characteristics.

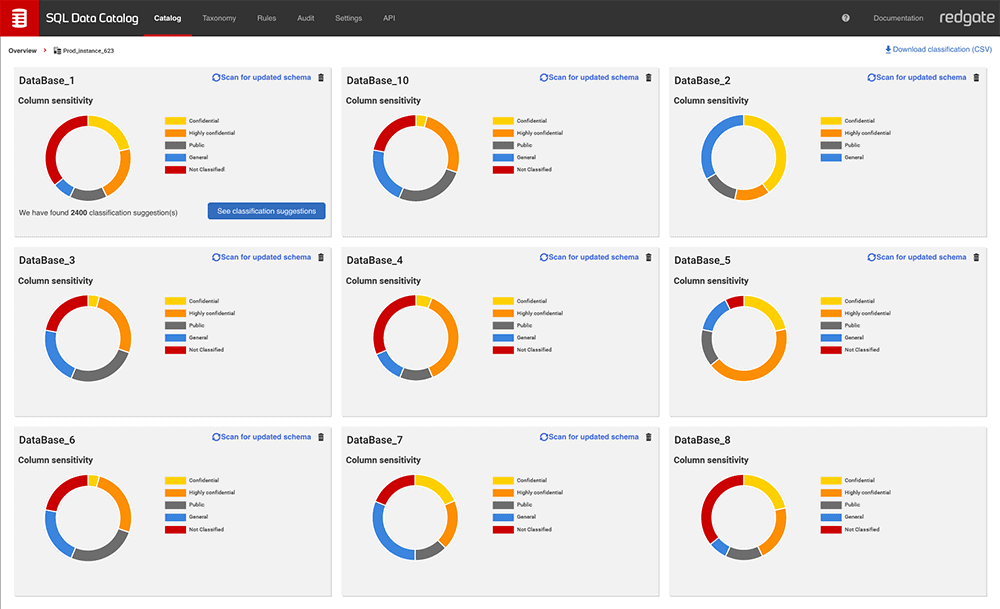

The first step is identify the data being held, so that those columns with sensitive and Personally Identifiable Information (PII) can be classified and ranked. A tool like SQL Data Catalog can rapidly accelerate the process, using intelligent rules and recommendations based on automated data scanning to remove much of the manual effort:

SQL Data Catalog integrates with Redgate Data Masker, which can then be used to provide the masking set necessary to protect the sensitive data that has been identified. To make the process easier, SQL Provision combines this masking capability with Microsoft’s proven virtualization technology to creates database copies which are a fraction of the size of the original. This enables the copies, or clones, to be created in seconds, with the data in those copies automatically masked.

Where clients have Oracle databases, Redgate has also taken the same data masking approaching with Data Masker for Oracle which has been specifically written for the target database architecture and replaces sensitive data with realistic, anonymized, test data.

The important point here is that clients will often be at different stages of this identification, classification, masking, and provisioning journey. Wherever they are, Redgate tools can be used to make one part or linked parts of that journey easier.

Summary

These are challenging times for MSPs. Clients naturally expect a premium service, as required, often at short notice. MSPs need to deliver that service when talent and time are often in short supply. Fortunately, the introduction and ongoing development of tools which can streamline and improve development practices will enable MSPs to offer additional, value-added services that integrate with a customer’s existing systems and processes.

It often starts with monitoring, but it can be extended to other parts of the development process like securing data as well. This will help MSPs to differentiate themselves and not only win new business, but retain their existing business as well.

For more information about how proactive monitoring can help you accelerate growth without increasing headcount, visit the Redgate MSP page online.

And for more information about how to optimize the performance and ensure the availability of your databases and servers, visit our monitoring resources page.