Five Ways to Simplify Data Masking

How do we protect sensitive data and still get realistic test databases without creating a masking process that is slow, brittle, or impossible to maintain? This article sets out five practical ways that Redgate Test Data Manager helps teams deliver secure, realistic test data reliably.

Data masking has two jobs: to protect sensitive data and to keep it useful. Any solution that fails at either one creates risk: compliance risk if protection is incomplete, or business risk if the masked test data is unusable or unrealistic.

The challenge is sustaining both goals as databases grow, structures evolve, and regulations change. Manual masking scripts or complex synchronization rules may meet a short-term compliance need, but they don’t scale. They’re prone to human error, create inconsistencies, and slow down development.

Redgate Test Data Manager was designed to make data masking automated, version-controlled, and easy to maintain. It gives teams a shared, testable definition of what constitutes anonymized data: which tables and columns must be protected and how that data must be transformed to ensure it cannot be linked back to individuals or reveal sensitive business information. Using simple, configuration-driven automation, it applies this protection consistently, with a clear audit trail to prove compliance, while keeping the test data realistic and fit for purpose.

This article sets out the guiding principles of effective data masking, outlines five best practices that define a scalable and maintainable approach, and explains how Redgate Test Data Manager helps teams put it into practice. The result is a process that keeps data secure, compliant, and useful without accumulating the complexity that so often turns masking into a high-cost maintenance burden.

The principles of effective data masking

When evaluating any data masking solution, IT leaders need confidence that it will deliver protected, useful data, both now and as data, regulations and systems evolve.

- Data must always be protected.

Behind every record is a customer, patient, or employee who trusts the organization to keep their information safe. Any use of production data in development, testing, analytics, or AI workflows must be fully anonymized to meet legal and moral obligations. - Masked data must remain usable.

If masking breaks queries, relationships, or reporting logic, its value is lost. The masked data must still behave like real data so it can support reliable development, testing, and analysis. - The process must stay simple and sustainable.

Even the most secure masking approach will fail if it becomes brittle, slow, or too expensive to maintain. The process needs to adapt as data volumes grow and schemas change, without introducing fragility or excessive overhead.

Five best practices for simple, maintainable data masking

So how do you build a process that achieves all this: one that is fast, sustainable, and consistently delivers safe yet realistic data? The answer lies in simple, configuration-driven masking underpinned by automation and version control, so that every run is predictable, repeatable, and auditable.

Just as Infrastructure as Code turns manual server configuration into a versioned, automated, testable, repeatable, and auditable process, we need a test data management solution that provides “test data provisioning as code.”

The following five best practices show how Redgate Test Data Manager applies these principles to its data masking process, so that it is effective and reliable, and stays that way even as data volumes grow, schemas evolve, teams change, and compliance requirements shift.

1. Start with ‘end-to-end automation’ in mind

Automation is the foundation of every scalable data masking strategy. It ensures consistency, reliability, and a verifiable audit trail as data and regulations evolve.

From the proof-of-concept stage onward, design for full automation. The goal is a process that can run unattended and end-to-end, with transparent reporting and auditability built in.

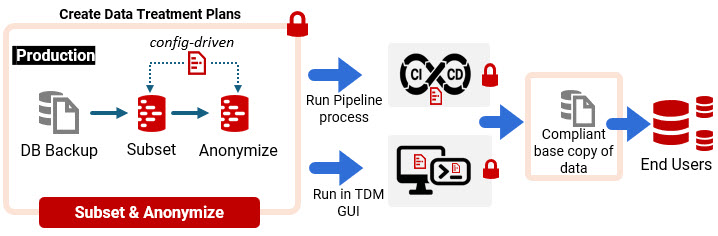

With Redgate Test Data Manager, we can define a repeatable, fully automated process that will:

- Extract a representative subset of data with referential integrity intact.

- Detect and classify sensitive data using PII discovery and a predefined taxonomy and datasets, aligned with NIST’s definition of linked information.

- Map each classification to a corresponding masking rule and dataset stored in simple configuration files.

- Apply masking rules automatically, using straightforward data masking transformations, which are deterministic where required.

- Generate a compliant “base copy” of masked production data that developers then use safely in development, testing, and CI pipelines.

Redgate’s TDM Autopilot project demonstrates this principle in practice. Once every element of the pipeline works reliably, you have a process that’s repeatable, auditable, and maintainable. Ops teams can adapt masking as schemas evolve or regulations change, without introducing fragility. Dev teams gain quick access to realistic, compliant test data, removing one of the biggest delays in the release cycle. Faster and more complete testing leads to more frequent and less fragile software releases.

This is what delivers ROI: the business is protected from the risk and cost of data breaches, and also sees higher-quality software delivered faster, with less disruption.

2. Subset first: don’t mask what you don’t need

Subsetting enforces the principle of least data exposure: protect what must be protected and remove everything that doesn’t add value to testing or analysis. At the same time, you also make every copy of data faster, cheaper, and safer to use.

In all cases, the safest way to protect data is not to include it in the first place. Teams rarely need every column or every row: often, subsets, summaries, or views provide all that’s required for testing or reporting. By excluding unnecessary data from the start, you not only reduce compliance risk but also speed up provisioning, speed up testing and lower infrastructure costs.

Redgate Test Data Manager implements this principle using its built-in subsetting utility, which automatically extracts a representative subset of the data while maintaining all key relationships and referential integrity. It will allow you to:

- Eliminate PII tables or rows of data not needed for testing

- Extract only the data paths needed to integration-test a specific business flow (e.g., customer → order → invoice)

- Produce smaller databases that provision faster and cost less to store

3: Define masking as configuration not manual scripts

Define masking in code to guarantee consistency and compliance. Manual scripting may work once but isn’t repeatable or auditable and won’t scale.

Manual or script-based masking can’t reliably guarantee compliance. As schemas evolve, gaps appear, and there’s no audit trail to prove that masking was consistently applied across all non-production environments. A masking strategy built on complex scripts and synchronization rules quickly becomes impossible to maintain or automate as requirements and data change.

Redgate Test Data Manager is designed around three principles: simplicity, control, and automation. The goal is to make test data provisioning as code a practical reality: where the full process is version-controlled, automatable, and repeatable, just like an application build or deployment pipeline.

Define masking in versioned code

Redgate Test Data Manager defines all classifications, datasets, and masking instructions in modular, JSON configuration files, creating a simple, declarative record of what data must be masked and how. When tracked in version control alongside schema migration scripts, these TDM configurations provide a single, auditable source of truth that evolves with your data structures and enables automated, consistent, and repeatable masking runs.

Handle complexity through configuration, not scripts

Many data masking solutions fall back on complex, fragile rules or custom scripts as soon as the built-in masking rules and datasets don’t meet requirements. With Redgate Test Data Manager, teams simply adapt the configuration. A single, version-controlled options file acts as the controller for each masking run. It is simple to exclude tables or columns, override default classifications, or define custom masking rules and datasets. If a masking run requires an AI-generated dataset for a specialized data type, or a column-specific generator that respects complex constraints, it’s added as a simple configuration option.

Simple, configuration-driven workflows

With all TDM config versioned and auditable, teams can orchestrate the entire masking process end-to-end using a single YAML workflow file. For example, a typical automated workflow might restore a backup, subset the data, and then classify and mask it (or apply existing, version-controlled masking configurations), before saving the masked data to a fresh copy of the database and exporting it as a backup ready for development or testing. These workflows can incorporate automated tests that continuously verify both data coverage and data utility (see step #5).

4. Use deterministic masking for consistency

A masking process that scales with the business must stay simple even when the databases aren’t.

Many database systems, especially older or highly customized ones, contain missing keys, denormalized structures, or duplicated data. Even in well-designed and normalized databases, there are often implicit relationships and dependencies: names embedded in email addresses, gender implied in names, or partial identifiers repeated across multiple tables.

In these cases, manual masking often introduces update anomalies, where the same PII value appears in multiple places but is masked differently in each. This leaves the masked data inconsistent and unreliable for testing, analysis, or reporting.

Redgate Test Data Manager supports automated, deterministic masking. It uses a secure, cryptographic salt to ensure that identical inputs always produce identical outputs within a single masking operation. For example, a customer name will appear consistently across every related table, such as orders and support tickets. Determinism, like other masking behaviors, is controlled through a simple configuration option in the masking configuration file. No extra scripts or rules are required.

By maintaining referential integrity even when relationships aren’t explicitly defined, Test Data Manager keeps complex databases consistent and useful without the need for fragile synchronization scripts or manual intervention. The result is lower maintenance effort, predictable masking behavior, and trusted test data across every environment.

5. Continuously verify masking coverage and data utility

Continuous verification is the safeguard that turns good masking into trusted masking. Automated checks ensure every table and column is covered, and the data remains usable for testing.

Effective data masking is an iterative process that continuously improves coverage and strengthens auditability, while ensuring that the masked data remains useful for testing.

Every time a table or column is added or modified, we need to ensure the corresponding change has been applied to the masking configuration. Outdated or incomplete masking rules can leave sensitive data exposed and create inconsistencies that cause test failures or misleading results. If the masking process isn’t provably complete and auditable, you carry the cost of masking every distributed copy without removing the burden of demonstrating compliance, adding overhead without eliminating risk.

To avoid this, masking classifications and rules must be treated as living documents, updated in step with both new regulations and changes to the database schema. Scheduled reviews involving compliance, security, and development teams ensure that masking continues to meet business and regulatory needs.

Teams should also run automated builds from version control that include metadata checks confirming all tables and columns are classified and masked where required, and that masked data remains usable, with relationships intact, and all queries behaving as expected.

In Redgate Test Data Manager, every masking step can be executed from the GUI, or automatically from the CLI, on demand or on a schedule. This allows teams to integrate secure test data provisioning into their existing operational workflows, data-refresh jobs, or CI/CD pipelines, ensuring each masking run is consistent, logged, and verifiable. The result is a living audit trail of masking coverage and data quality, and the end of stale, unmanaged test copies left lying around for years.

The payback

A sustainable data masking process must run efficiently and be easily adaptable, even on large, complex databases. It should provide only the data that is essential for testing, minimizing overhead, speeding up testing, and fitting cleanly into existing testing and delivery workflows.

To achieve this, the data discovery, classification, and masking of PII must be automated, auditable, and simple to maintain. This is when it delivers lasting value across both data security and development.

- Data is always protected when used outside the secure production environment, with verifiable compliance to regulations and internal policies.

- Fast time to value, because automated discovery, classification, and masking replace months of manual setup and configuration.

- Higher ROI, as developers can self-serve realistic test data quickly, enabling better testing, fewer defects, and more reliable deployments.

- Scales smoothly with the business – data masking won’t quickly become a costly and unmaintainable burden as data volumes increase, and schemas evolve.

The result is a sustainable balance: data remains protected and provably compliant, while remaining fit for purpose, across development, testing, and analytics. As a result, development and modernization move faster, safely.