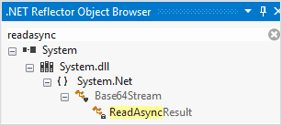

Debug third-party code fast

Using third-party technologies is a great way to get stuff done fast without re-inventing the wheel. But it's hard to debug libraries, components, and frameworks you didn't write.

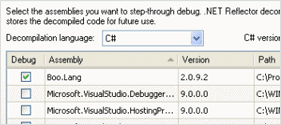

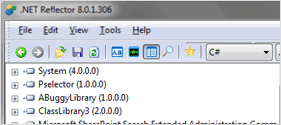

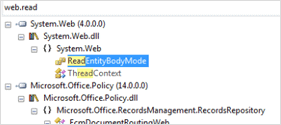

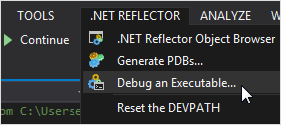

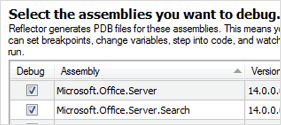

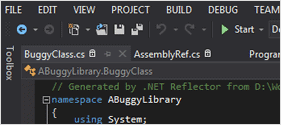

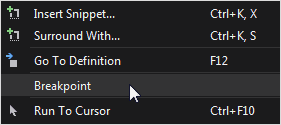

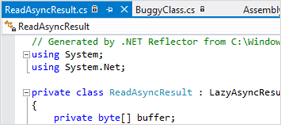

.NET Reflector saves time and simplifies development by letting you see and debug into the source of all the .NET code you work with.

Follow bugs through your own code, third-party components, and any compiled .NET code you work with. You can see third-party code in Visual Studio, and debug into it just like your own.