Data Masking in Practice

This article takes a strategic look at common data masking and anonymization techniques, and the challenges inherent in protecting certain types of sensitive and personal data, while ensuring that it still looks like the real data, and retains its referential integrity, and distribution characteristics. It also explains, briefly, with references, the tools that one can use to mask different types of data and how to provision development and test machines with these 'de-sensitized' databases, or alternatively to produce fake data that looks like the real thing, but in fact is generated randomly.

Original Publication Date: 25/04/2018.

This article is the second in a short series. The previous article described the principles of data masking and when and why we need it. This one describes the practicalities of implementing data masking in complex relational databases. It will cover:

- When does the masking take place?

- Masking techniques

- Practical difficulties with masking

- Tools for masking and anonymizing data

When does the masking take place?

Data Masking software either does its obfuscation in-situ, in passage, or uses the ‘on the fly’ approach, sometimes known as the ‘Server-To-Server’ technique.

On-the-fly data masking

In the ‘on the fly’ technique, the obfuscation is done as part of copying, or cloning, the source database. The masking rules are applied as part of the process of moving the data from the source database to the target. This has the advantage that the data is never present in an un-masked form in the target database, so there is no danger of the original data being recoverable from the target database’s transaction log. If the changes were audited, that part of the database that does the audit can, and should be, withheld.

Although there are great security advantages to the obfuscation being performed on the original, security-fenced server, the process becomes ‘all-or-nothing’, and must be repeated if it proves to be inadequate. Dynamic data masking can sometimes be used to copy versions of the data, via a login for which dynamic masking has been applied, but this type of masking does not produce realistic data and can easily fall foul of constraint checks, when the data is imported into the target.

In-passage data masking

In-passage data masking takes place after the data is exported from the source and before it is imported. This usually works on the securely-held text files of the contents, in comma-separated value (CSV) format, XML, JSON or other transfer format. The process can involve Regex, at its simplest, but is more usually read into some form of document database.

This avoids the problems of artefacts of the data appearing in logs and audit trails. It also side-steps the interference of constraints, rules and triggers. Unfortunately, this will only be a postponement of the consistency checks on the masked data because the masked data will be checked on import and will need to comply with database rules once these checks are re-enabled.

In-situ data-masking

In-situ data-masking takes place after the database is copied, so that the software simply operates on the copied database. The masking rules are executed and controlled by software that then connects to the target and controls the execution of the masking rules. Although this allows incremental application of masking rules over time, the original data will remain in the logs and on the database pages.

Masking techniques

There are several methods for masking data depending on the type of data being masked, and on the masking requirements.

Complete substitution

Data in one or more columns of a table can be randomly substituted with values from an appropriate list, or by using a data generator that can supply credible values that pass check constraints. Credit Card numbers can, for example, be substituted with numbers that obey the validation rules of the provider. This preserves the look and feel of the existing data but requires a library of credible values. The disadvantage of this is that it is bound to alter the way that the data is distributed, unless the data generation technique can check the original distribution and conform to it.

Data shuffling

Data in one or more columns can be shuffled randomly against the key fields, much like a deck of cards. This might require certain rows to be respected or some other condition to be applied. Although this technique gets over the problem of data distribution, the obvious problem is that the individuals with unusual surnames such as Jack O’Diamonds are still there. Shuffling would not have cheered the victims of Ashley Madison if they had unusual names. Also, unless the shuffling is truly random, card-sharp techniques can be employed to unshuffle the pack.

Search and replace masking

This is used to modify text data by means of rules and regular expressions. It is often used to remove names or identifiers from text, substituting random length ‘###’ strings.

Number variance

Each number or date value in a column can be varied by some random percentage, whilst maintaining the original variance, range and distribution. This can useful where it would otherwise be possible to identify individuals by an exact match, such as date of birth, but is otherwise more of a blurring than an obfuscation. It will, for example, give an approximate value for salary information.

XXXing out

A technique commonly used by dynamic data masking within the RDBMS. Certain fields are replaced with a mask character (such as an ‘###’). This removes the actual content while preserving the same formatting. For example, dates such as these:

- 12/03/2012

- 28/10/2017

- 01/08/2015

If accessed by a login for whom masking is appropriate, would appear as:

- XX/XX/20XX

- XX/XX/20XX

- XX/XX/20XX

The masking characters effectively remove much of the sensitive content from the record while still preserving as much as possible of the look and feel. However, dynamic data masking can be defeated, easily, if the user has direct access to the database to execute queries, because the masking rules are held at table-level and the masking software cannot track the data from the original table. However, it is useful where users must be trained on the live system, and can interact with the data only via an application.

Practical difficulties with masking

All this might sound straightforward to achieve, but the detail of data masking is as complicated as the database that is being obfuscated and depends also on the purpose of data masking.

Denormalization

If a database has been denormalized, the sensitive date will be stored in several tables, and isn’t always likely to be in an obvious place. The name of a customer, for example, will appear against addresses, phone numbers, invoice headers, correspondence, JSON documents, XML fragments, references, logs, transcriptions of conversations and so on. To mask even a simple customer name could be nearly impossible. Even a well-normalized database can accidentally reveal personal information if an XML or text field is stored.

In some cases, before changing a value there must exist several arcane rules that specify what else needs to be altered and where.

Row-level data consistency

Column data is very rarely unrelated. An obvious example is that a male customer is unlikely to have the title of ‘Lady’ or ‘Mrs’, or to be called ‘Joyce’. The last names of customers will vary greatly according to where they live. Company names vary considerably with their line of business: The Red Lion, White Hart, Eato Burger Bar or Wheatsheaf are unlikely to be Banks, for example. Context is important.

A single column cannot even be shuffled without harming the verisimilitude of data. To ensure that data is correct within its context, data masking software must relate columns both internally, within a table and externally, table to table. Successful masking requires that you can modify columns in groups of rows, within a table, to contain identical values to the one you’ve changed, or to modify columns in another table to contain identical values to the one you’ve just changed. You sometimes need to substitute different values, according to a value in another column.

Constraints

Databases have many constraints, rules and triggers that are there to ensure that data is consistent and reliable. In other words, they are there to restrict the very activity you are attempting, the direct alteration of data in the database tables.

- A

CHECKconstraint can do basic checks on the value in a column, but can also ensure that there is consistency at the table level. By altering one or more values, theseCHECKconstraint rules can be violated. - You can also run into problems if the column containing the value you are altering is participating in a

PRIMARYKEYorFOREIGNKEYconstraint. This can mean that many masking rules can only by executed in a particular order, or in a particular way.

You can, of course, temporarily disable triggers, unique keys, check constraints or foreign key constraints while you perform the masking. This can be extremely useful, but the disabling of triggers can conceivably result in inconsistency, depending on the operation that the triggers needed to perform. Also, of course, you’ll have to reenable the constraints and keys at some point, and this can be the time you realize that there are a lot of inconsistencies that need mending.

Faced with the difficulties of altering data within a database, you might think that a better approach is to apply in-passage masking to text versions of the base tables, using scripts and Regexes, or by reading the files into a document database and processing it there.

This gets over the problem of log artefacts, which can reveal the original sensitive data, but the problems begin when the sanitized data is imported into the target, the empty copy of the database that is destined to be the masked version. This is because at some point, triggers, rules and constraints will have to be enabled and the chances of the data being gracefully absorbed into the new database can be frighteningly remote.

Distributed databases

Another problem can happen of your extracted data set originates in more than one database or instance. Masking software tends to work only on a single database, and you can get problems with masking the data within several databases in a way that yields consistent data.

Masking data that is part of a primary key

If you attempt to alter the data in a column that participates in a PRIMARY KEY, then you are likely to destroy the referential integrity of the database. To do this effectively, using a substitution strategy in a database, you will need to create a correlation table, which contains copies of the before- and after- values of the column to be masked. The correlation table is then used to relate the masked, or substituted, key values to the original ones, making sure that the new values are unique.

Having temporarily disabled all the relevant UNIQUE and FOREIGN KEY constraints in the target table, the correlation table is then used to alter the values in the PRIMARY KEY of the target table, and then to replace the original key values in the FOREIGN KEY columns of any referencing tables, with the new value. Then, we can re-enable the constraints in the target table. Finally, the temporary correlation table must be dropped for security reasons, since it forms a lookup mechanism between the new values and the old values.

For each referencing table, the FOREIGN KEY columns will generally have an index, which will, to speed the process, need to remain in place during the update. Likewise, the columns containing the original PRIMARY KEY values, in the correlation table, should be indexed.

Free text

Documents, memos, transcripts and disciplinary notes are difficult to sanitize in-situ. Although it is sometimes possible just to use ‘Lorem ipsum dolor sit amet, no pro mundi propriae signiferumque, tale perfecto urbanitas duo et. Ne errem apeirian euripidis nam, an mazim necessitatibus per. Vim salutandi assentior cotidieque et, vim ne ullum putent.. etc.’ this is unlikely to help with training.

The most useful approach is likely to be the use of markov chains to produce meaningless text that mimics the style of the original. Homer can, for example, be obfuscated to:

Nor yet e’en so Achilles let his counsel take:

who should strike the bird?

As less in skill not one was absent;

nor of his lofty vessel’s prow. The dark whirlwind’s force

as this wine we pour their hearts’ best blood

Theirs and their fam’d Allies who freely in thy house

receiv’d, for twenty days, the heav’nly Maid; Daughter of Cisseus.

Structured text (XML/JSON)

Structured text, such as in an XML column, can contain the very values that are being obfuscated, so they cannot be left alone. There is no point altering only the name column in a table, if the names also appear in an XML document that is supposed to hold supplementary information.

This structured text can contain data that is used by the application under test, training or development, so it will need to be parsed and, if necessary, subject to field-by-field masking, just as if it were normal relational data. There is no short-cut to this chore.

Intelligent keys

Some data items such as postal codes, employee numbers, credit card numbers and bank codes, have a structure containing several attributes. Unless the substitution is done with the same rules, they are likely to fail any validation checks performed within the application, and to violate CHECK constraints within the database.

The problem arises in the front-end application data-entry screens, which will check the content of these ‘intelligent keys’ prior to update. If we’ve masked data in these columns in a way that violates the internal rules governing the attributes of this data, then any screen that displays the value will never permit an update because the validity checks fail.

You can, of course, try shuffling the data in these columns, but a postal code, for example, could easily be checked against an address in the same row, so it is possible that the shuffled data will be rejected as inconsistent.

Training and demonstration data

Sometimes we need to anonymize a database for training or demonstration purposes, in such a way that certain data is consistent enough to support a training ‘scenario’. If you need to demonstrate an application process involving a certain user with, for example, certain accounts or other records, then the obfuscation process must take this into account. The easiest approach to this problem is to introduce consistent records into the obfuscated version of the database, after the obfuscation is completed.

Specialist data

There is certainly data that is common to many databases and can therefore be served by a list, such as names of countries, currencies and regions. Likewise, we can perform substitution from lists containing typical forenames or last names, valid credit card numbers from the most popular providers, and so on.

However, substitution of data soon gets complicated when we consider how to mask specialized words, such as the names of manufacturing parts, zoological taxonomies, because now we must deal with the diversities of culture. Even in Europe, lists would need to be in the sixteen most common languages.

There comes a time when lists need a supplement, which in its simplest form might be a reverse Regex expression that can generate random numbers or strings according to a regex expression.

This technique is most effective if the proportion of NULL values introduced into the output can be specified, and the distribution of random values can be altered to fit the distribution of the data. Ideally, there should be a way of scripting random data generators to accommodate any sort of condition constraints or data consistency.

Redgate tools for masking and anonymizing data

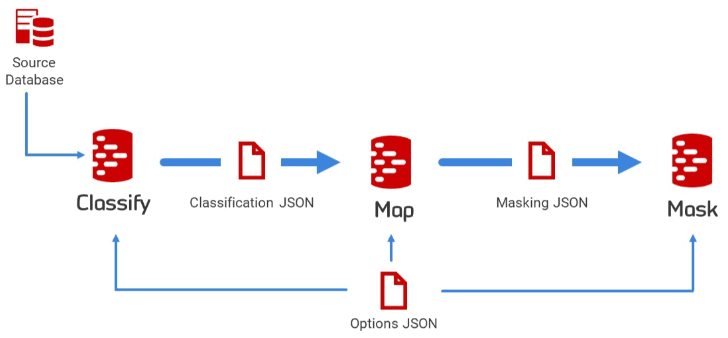

The anonymization component of Redgate Test Data Manager will allow you to sanitize private or sensitive data in your databases (SQL Server, PostgreSQL, MySQL or Oracle), so that this data is then safe to use for other business purposes, such as for development and testing work. The anonymization process will help you discover any PII in your databases, and classify it, and it can sync with existing data catalogs. It will map each identified PII column to a set of masking rules that, when executed, will replace the real data with synthetic, generated data.

It supports a wide range of masking rules, from simple data substitution and data shuffling rules to synchronization rules that will help you mask all your data consistently , even if it is stored in denormalized form. It will even attempt to help in difficult cases, where the databases lack the proper keys and constraints. This allows it to support deterministic masking, making it easier to maintain referential integrity and uniqueness rules, and to ensure that the masked data retains all the correct characteristics, relationships and distribution of the source production data. The result is test data that is not only safe to use, but also yields test results that much more accurately predict the behavior observed in the production database system.

Redgate Test Data Manager provisions copies of the sanitized databases as data containers (clones), allowing you to apply data anonymization as part of an automated database provisioning process that is viable even for enterprise-scale database systems.

For cases where you cannot, or prefer not to, use production data as a starting point, the alternative is to generate realistic but entirely fake data using the data generation component.

Summary

Even data extracts need to be created with great caution. Apparently innocuous data can be combined with other information to expose information about identifiable individuals. Location information, identifiers, or descriptive information, such as racial origins, can be used to unmask data. Fields containing compound identifiers, such as bank codes, can contain location information. XML fields can occasionally compromise a data extract by containing some or all of the original record, in denormalized form.

In cases where an entire database must be delivered in obfuscated form, the problems can get worse. If the log file is included, the obfuscated data can be either partially or completely recovered, and it is also possible to reconstruct some of the original data by examining the data pages unless there has been Residual Information in the database (RIP).

Also, the referential nature of data in the average database means that the apparently simple matter of changing the data in databases can get complicated quickly. Where data has been denormalized, a record can store the same value in several places, and so changing the value is a matter that needs caution and research. Replacing a value in a column that is participating in a primary key can be almost impossible, without a great deal of skill and knowledge.

There are times when development and testing requires data that is as close as legally -possible to the real production data. It is likely to be a regular demand. If, therefore, the creation of a masked or anonymized database is done regularly, it is best to automate the process so the pain of doing so isn’t wasted, and can be reused. Also, the routine can be tested alongside the database as it is extended and developed, to catch the problems that are occasionally introduced to the obfuscation process during development work.